- Introduction

- Platform Overview

- Architecture Comparison

- 3.1. Storage Architecture

- 3.2. Compute Model

- 3.3. Services Layer

- Cost Structure Analysis

- 4.1. Pricing Models

- 4.2. Hidden Cost Factors

- 4.3. Cost Scaling Patterns

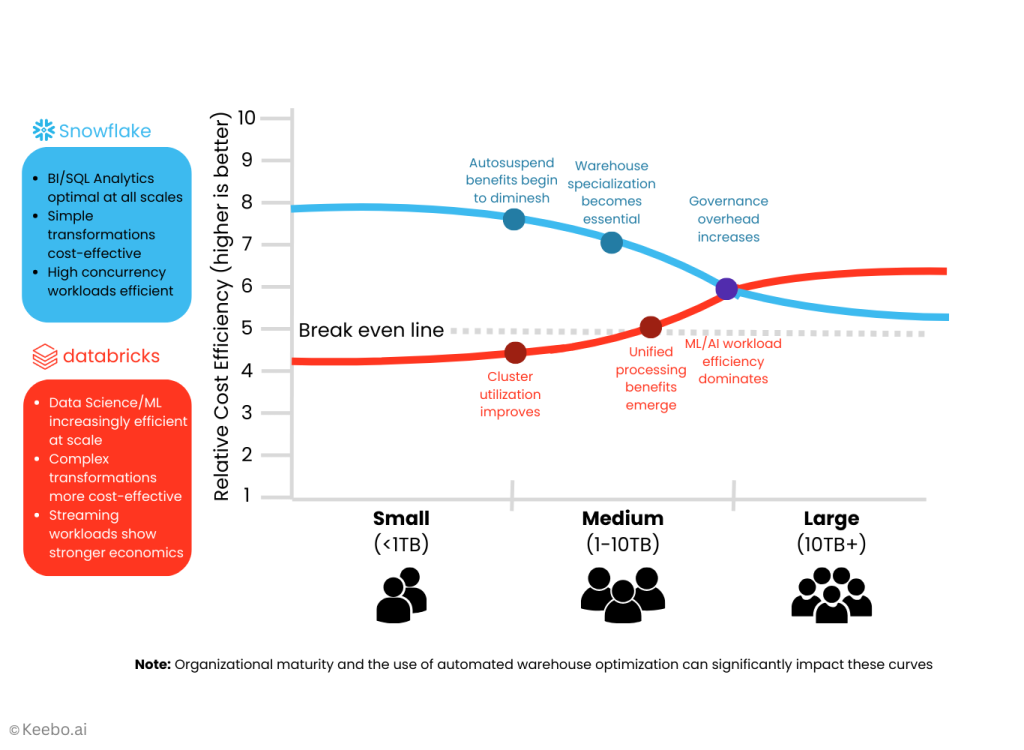

- 4.3.1. Scaling with data volume

- 4.3.2. Scaling with user counts

- 4.3.3. Scaling with query complexity

- 4.4. Real-World Cost Scenarios

- Performance Benchmarks

- 5.1. Query Performance

- 5.1.1. Data loading benchmarks

- 5.1.2. Analytical query performance

- 5.1.3. Concurrency handling

- 5.1.4. Cold start performance

- 5.2. Data Engineering Workloads

- 5.2.1. ETL/ELT performance comparison

- 5.2.2. Data transformation efficiency

- 5.2.3. Streaming data handling

- 5.3. Machine Learning/AI Workloads

- 5.3.1. Model training performance

- 5.3.2. Inference serving capabilities

- 5.3.3. LLM and GenAI support

- 5.1. Query Performance

- Cloud Integration & Ecosystem

- 6.1. Multi-Cloud Capabilities

- 6.1.1. AWS/Azure/GCP support differences

- 6.1.2. Cross-cloud data sharing

- 6.1.3. Regional availability

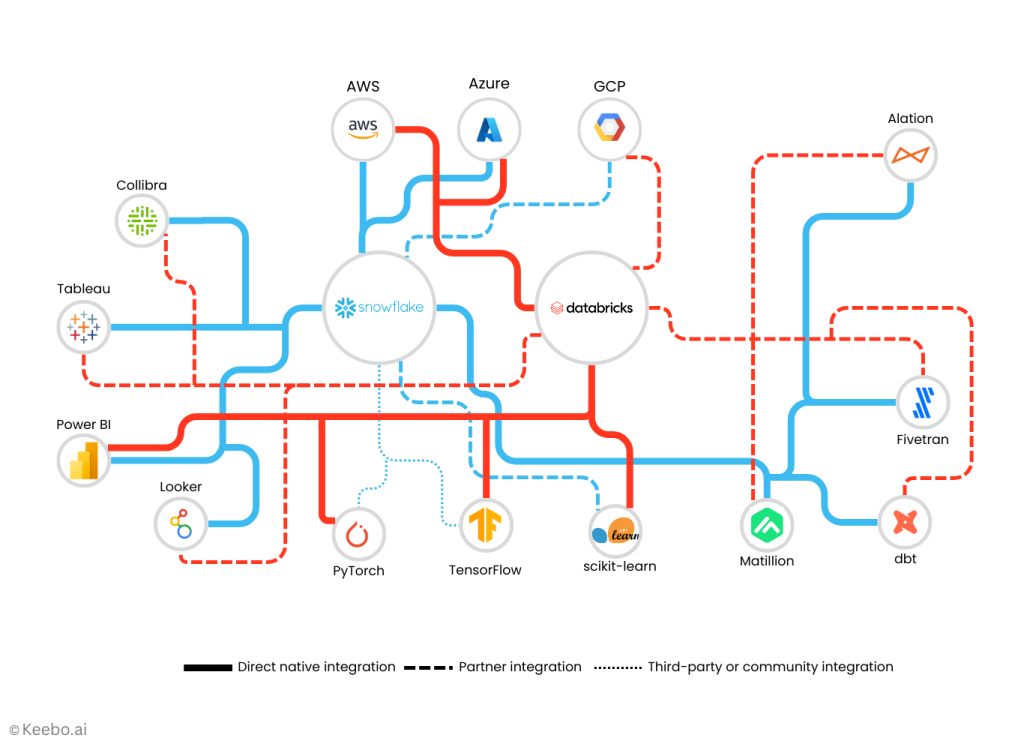

- 6.2. Tool & Partner Ecosystem

- 6.2.1. BI tool integration

- 6.2.2. Data governance tool support

- 6.2.3. Third-party extensions and marketplace

- 6.1. Multi-Cloud Capabilities

- Minimum Efficient Scale Analysis

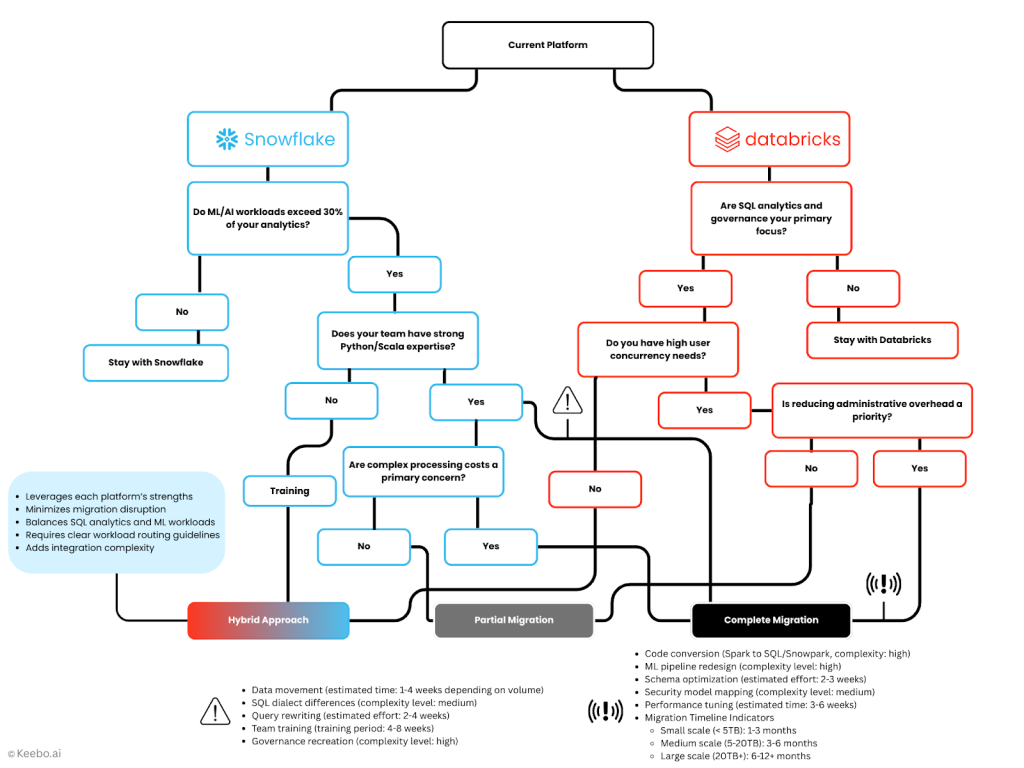

- Migration Considerations

- 8.1. From Snowflake to Databricks

- 8.1.1. Migration approach and methodology

- 8.1.2. Cost implications

- 8.1.3. Performance impact expectations

- 8.1.4. Common challenges

- 8.2. From Databricks to Snowflake

- 8.2.1. Migration strategy and approach

- 8.2.2. Cost considerations

- 8.2.3. Performance expectations

- 8.2.4. Typical obstacles

- 8.3. Hybrid Approach Considerations

- 8.3.1. Complementary use cases

- 8.3.2. Integration architecture

- 8.3.3. Cost optimization in hybrid environments

- 8.1. From Snowflake to Databricks

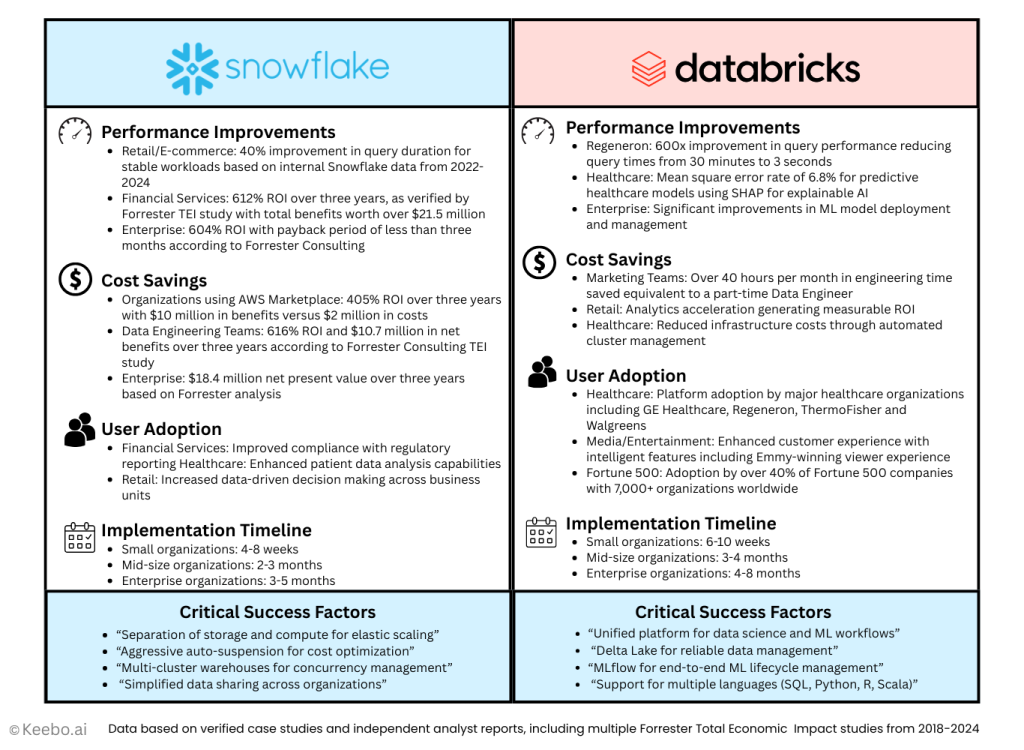

- Real-World Customer Examples

- Decision Framework

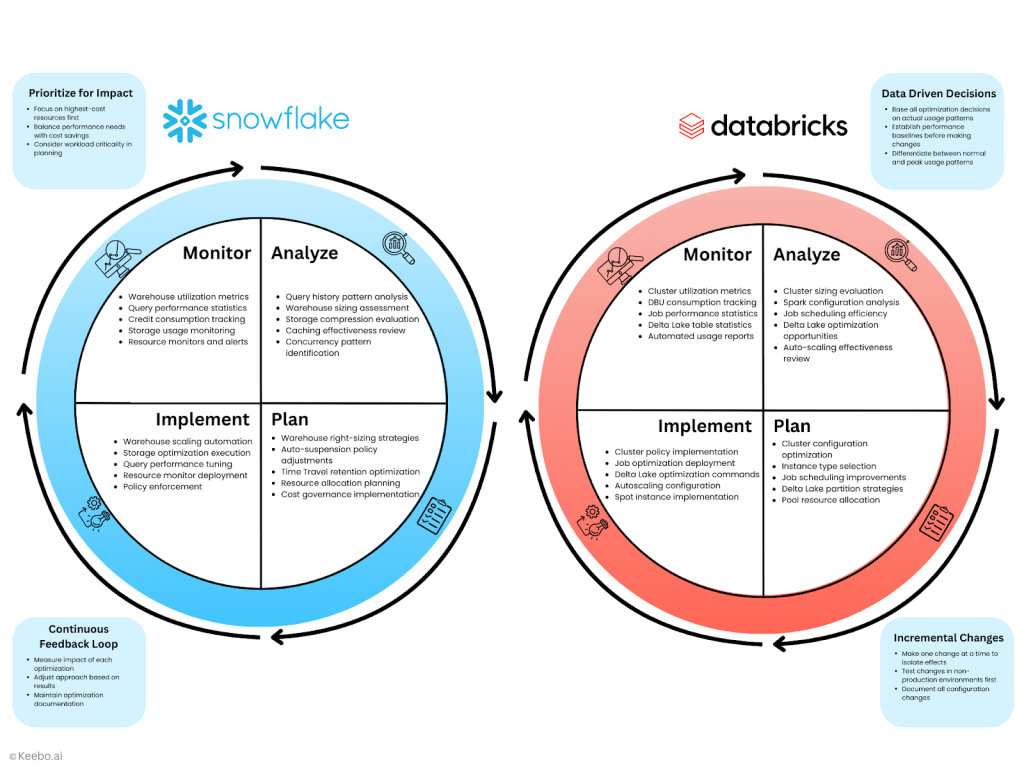

- Optimization Best Practices

- 11.1. Snowflake Cost Optimization

- 11.1.1. Warehouse sizing and auto-suspension

- 11.1.2. Storage optimization techniques

- 11.1.3. Query performance tuning

- 11.1.4. Credit monitoring and governance

- 11.2. Databricks Cost Optimization

- 11.2.1. Cluster sizing and autoscaling

- 11.2.2. Job scheduling optimization

- 11.2.3. Storage format optimization

- 11.2.4. DBU monitoring and management

- 11.3. Cross-Platform Optimization for Hybrid Implementations

- 11.1. Snowflake Cost Optimization

- Future Outlook

- Conclusion & Recommendations

- Final Thoughts

- Additional Resources

1. Introduction

As someone who has spent the last two decades working at the intersection of AI and database systems, both in academia as a professor at the University of Michigan and in industry, I’ve had the unique opportunity to witness the evolution of data platforms from multiple perspectives. I personally know several of Databricks’ founders from our days as labmates at UC Berkeley and have tremendous respect for what they’ve built. Similarly, at Keebo, we’ve developed a deep partnership with Snowflake over the past few years, working closely with hundreds of their customers and gaining intimate knowledge of their platform’s inner workings.

This experience with both ecosystems allows me to approach a Snowflake cost management tools comparison with genuine appreciation for the strengths of both platforms. My goal isn’t to advocate for either solution, but to provide an objective analysis that helps you navigate this important decision based on your organization’s specific needs.

1.1. Market context and platform selection importance

In today’s data-driven business landscape, selecting the right analytics platform isn’t just a technical decision—it’s a strategic one that can significantly impact an organization’s ability to derive value from its data assets. Databricks and Snowflake have emerged as two dominant forces in the modern data stack, each offering compelling but distinctly different approaches to handling enterprise-scale analytics workloads.

As organizations increasingly migrate their data infrastructure to the cloud, the choice between these platforms represents a critical fork in the road—one that will influence everything from day-to-day operations to long-term analytics capabilities and, perhaps most importantly, overall cost efficiency. This decision has become even more consequential as both platforms have expanded their capabilities, blurring traditional boundaries between data warehousing, data lakes, and machine learning environments.

1.2. Evolution of the analytics landscape

The data analytics ecosystem has evolved dramatically over the past decade. What began as a clear division between data warehouses (optimized for structured analytics) and data lakes (designed for raw, unstructured data) has transformed into a more nuanced landscape. Snowflake pioneered the cloud data warehouse model with its innovative separation of storage and compute, while Databricks evolved from its Apache Spark roots to develop the “lakehouse” paradigm—an architecture designed to combine the best elements of both warehouses and lakes.

Both companies now position themselves as comprehensive data platforms rather than point solutions, capable of handling everything from traditional business intelligence to cutting-edge machine learning workloads. This convergence creates both opportunity and complexity for organizations trying to make the right investment decision.

1.3. Critical role of cost and performance considerations

While features and capabilities often dominate platform comparisons, the economic implications of these choices can be equally profound. The architectural differences between Snowflake and Databricks translate directly into distinctive cost models and performance characteristics that must be thoroughly understood before making a commitment.

The reality is that many organizations have experienced unexpected cost overruns with both platforms when implementation decisions weren’t adequately informed by a comprehensive understanding of their pricing mechanisms. Unlike traditional on-premises solutions with predictable licensing costs, these cloud-native platforms employ usage-based billing that can scale dramatically—sometimes in unexpected ways—as workloads grow in volume, complexity, and diversity.

Performance considerations are equally critical. While both platforms can deliver exceptional performance, they do so through different technical approaches that may favor certain workload types over others. Understanding these nuances is essential for aligning technology choices with business requirements and optimizing both cost and performance outcomes.

1.4. Guide overview and learning objectives

This comprehensive Snowflake cost management tools comparison aims to provide technology leaders, architects, and decision-makers with a detailed, objective comparison of Databricks and Snowflake from both cost and performance perspectives. By the end of this guide, you’ll understand:

- The fundamental architectural differences between these platforms and their implications for various workload types

- The detailed cost structures of each platform, including both obvious and hidden factors that influence total cost of ownership

- Performance characteristics across diverse analytical workload types, from batch processing to real-time analytics and machine learning

- The concept of minimum efficient scale—at what point each platform becomes cost-effective for different use cases

- Migration considerations for organizations considering switching between platforms

- Practical optimization strategies to maximize value regardless of which platform you choose

Rather than advocating for either solution, my goal is to provide the analytical framework and factual insights you need to make the right choice for your organization’s specific requirements, technical environment, and budget constraints. Let’s begin by examining the core architectures of these platforms to establish a foundation for our discussion of their cost and performance implications.

2. Platform Overview

My background in both academic research and industry implementation has given me a front-row seat to how these platforms have evolved. While I’ll dive deep into their technical differences in later sections, I want to start with a high-level overview of what makes each platform distinctive.

2.1. Snowflake: The Data Cloud Pioneer

Snowflake emerged in 2012 with a radical vision: reimagining the data warehouse for the cloud era. What distinguished Snowflake from the beginning was its architecture that completely separated storage from compute—a concept that seems obvious today but was revolutionary at the time.

2.1.1. Core architecture and key differentiators

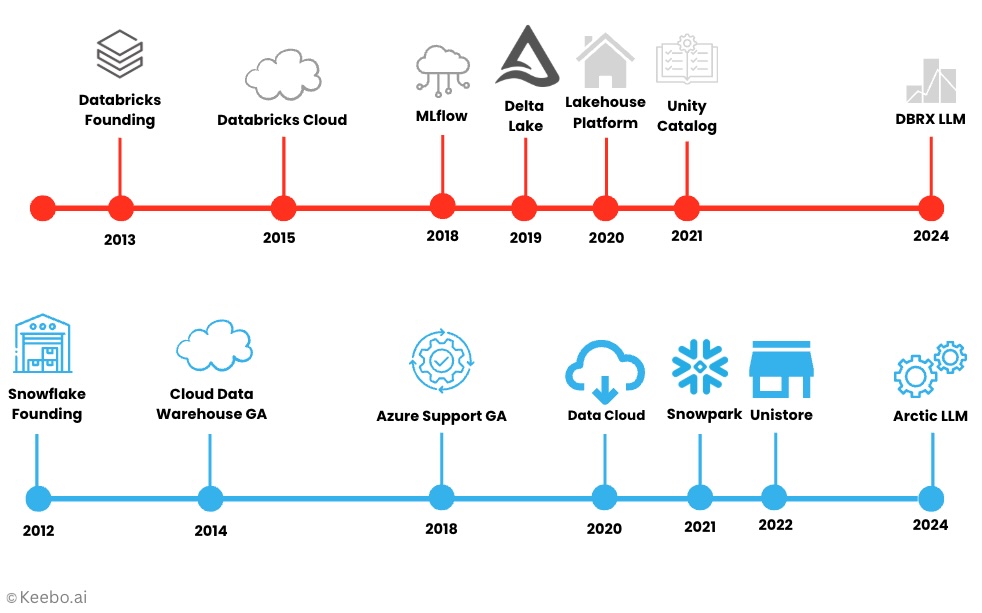

At its foundation, Snowflake’s architecture consists of three distinct layers:

- Storage layer: Data is stored in proprietary compressed columnar format in cloud object storage (S3, Azure Blob, or Google Cloud Storage)

- Compute layer: Virtual warehouses (essentially clusters) that can be scaled up, down, or paused independently

- Services layer: Handles metadata, security, query optimization, and transaction management

This separation has profound implications. Unlike traditional data warehouses where you provision capacity to handle peak workloads, Snowflake allows you to spin up resources on demand and scale them down when not needed—effectively matching your resource consumption to your actual workload.

2.1.2. Evolution from data warehouse to complete data platform

While Snowflake began as a cloud data warehouse, it has steadily expanded its capabilities. The platform now offers:

- Snowpark: Programmatic access for Python, Java, and Scala developers

- Unistore: Transactional workload support

- Data sharing: Secure, governed sharing across organizations without data movement

- Marketplace: Ecosystem for data products and applications

- Cortex AI: Native ML and generative AI capabilities

This evolution reflects Snowflake’s ambition to become the center of gravity for an organization’s entire data ecosystem—not just a warehouse for analytical queries.

2.1.3. Target use cases and ideal customer profiles

In my experience working with Snowflake customers, the platform particularly excels for:

- Organizations with primarily structured data and SQL-centric workloads

- Enterprises requiring strict data governance and security

- Teams seeking minimal operational overhead for data infrastructure

- Workloads involving cross-cloud or cross-region data sharing

- Businesses with variable analytical workload patterns

Snowflake’s strength lies in making complex data operations accessible through a familiar SQL interface, allowing data teams to focus on insight generation rather than infrastructure management.

2.2. Databricks: The Lakehouse Architecture

Databricks has a different origin story, emerging from the Apache Spark project at UC Berkeley’s AMPLab. Founded by the creators of Spark, Databricks began as a managed platform for Spark processing but has evolved into something much more comprehensive.

2.2.1. Foundational technology (Apache Spark origins)

The core of Databricks remains Apache Spark—the distributed computing framework designed for both batch and streaming workloads. Having observed Spark’s development from my days at Berkeley, I’ve watched it evolve from a research project to an enterprise-ready platform that can process enormous datasets with impressive efficiency.

Databricks built upon this foundation by:

- Creating a managed cloud service to simplify Spark deployment

- Adding collaborative notebook interfaces for data scientists and engineers

- Developing performance optimizations beyond open-source Spark

- Building enterprise features around governance, security, and reliability

2.2.2. The lakehouse paradigm explained

The term “lakehouse” reflects Databricks’ vision to combine the best aspects of data lakes and data warehouses. Traditional data lakes provide cheap storage and schema flexibility but lack performance and consistency guarantees. Data warehouses offer strong performance and consistency but with higher costs and less flexibility.

The lakehouse architecture attempts to bridge this gap through:

- Delta Lake: An open-source storage layer that brings ACID transactions to data lakes

- Unified batch and streaming: Consistent processing paradigms for both historical and real-time data

- SQL Analytics: Warehouse-like performance for SQL queries against lake data

- Governance layer: Catalog and lineage tracking across the data lifecycle

This approach allows organizations to maintain a single copy of data that can serve multiple analytical needs—from BI reporting to machine learning.

| Capability | Traditional Data Lake | Traditional Data Warehouse | Lakehouse Approach |

|---|---|---|---|

| Storage Cost | Low | High | Low |

| Query Performance | Variable | Optimized | Optimized |

| Schema Flexibility | High | Low | High |

| ACID Transactions | No | Yes | Yes |

| BI Support | Limited | Excellent | Good‑Excellent |

| ML/AI Support | Good | Limited | Excellent |

| Data Governance | Limited | Strong | Strong |

2.2.3. Primary use cases and ideal scenarios

Databricks typically shines in:

- Organizations with significant data science and machine learning workloads

- Environments dealing with both structured and unstructured data

- Teams with strong engineering capabilities seeking fine-grained control

- Workloads requiring both batch and streaming data processing

- Cases where Python, R, or Scala are the primary languages for data manipulation

The platform is particularly well-suited for organizations where advanced analytics and AI are central to their data strategy, rather than just traditional business intelligence.

Each platform reflects different philosophical approaches to data management, which I’ll explore in more depth when examining their architectural differences in the next section. The choice between them often hinges not just on technical capabilities but on organizational culture, existing skill sets, and strategic priorities.

3. Architecture Comparison

Understanding the architectural differences between Snowflake and Databricks provides the foundation for analyzing their cost and performance implications. While both platforms enable cloud-based data analytics at scale, they approach this challenge from fundamentally different angles—differences that stem from their distinct origins and design philosophies.

3.1. Storage Architecture

The way these platforms store and organize data represents perhaps their most fundamental difference, with cascading implications for performance, cost, and flexibility.

3.1.1. Snowflake’s micro-partitioning vs. Databricks Delta Lake

Snowflake stores data in its proprietary format using what it calls micro-partitions—optimized units of storage typically ranging from 50MB to 500MB in size. These micro-partitions contain columnar data, which allows for highly efficient scanning and filtering operations. Snowflake automatically handles all aspects of data organization, including partitioning, clustering, and compression.

In contrast, Databricks’ Delta Lake is an open-source storage layer that organizes data in Parquet files (an open columnar format) with added transaction log capabilities. This approach gives Delta Lake ACID transaction support while maintaining compatibility with the Parquet ecosystem. Unlike Snowflake’s fully managed approach, Delta Lake gives users more explicit control over partitioning strategies, file sizes, and optimization techniques.

A key consideration: Snowflake’s approach is more hands-off and managed, while Databricks offers more granular control for teams that want to optimize their storage patterns.

3.1.2. Data format and organization differences

Beyond their core storage formats, these platforms differ in how they organize and manage data:

| Aspects | Snowflake | Databricks Delta Lake |

|---|---|---|

| File Format | Proprietary columnar | Open‑source Parquet |

| Metadata Management | Centralized catalog | Unity Catalog / Hive Metastore |

| Partitioning | Automatic micro‑partitioning | User‑defined partitioning |

| File Sizing | Managed automatically | User‑configurable |

| Clustering | Automatic with manual options | Manual via Z‑ordering / range partitioning |

| Data Layout Optimization | Automatic + reclustering | Manual optimization commands |

| Schema Evolution | Managed | Supported through explicit commands |

The practical impact of these differences depends heavily on your team’s preferences for control versus automation. In my work with customers, I’ve noticed that data engineering teams with deep technical expertise often prefer Databricks’ approach, which allows them to optimize for specific access patterns. Organizations with smaller data teams typically favor Snowflake’s automated approach.

3.1.3. Impact on storage costs and performance

These architectural differences directly influence both storage efficiency and query performance:

Cost implications:

- Snowflake charges for actual compressed storage used

- Databricks storage costs depend on the underlying cloud storage (S3, ADLS, GCS)

- Both platforms benefit from compression, though compression ratios can vary

- Databricks may require more hands-on optimization to achieve optimal storage efficiency

- Snowflake’s Time Travel and Fail-safe features consume additional storage that must be accounted for

Performance implications:

- Snowflake’s proprietary format is highly optimized for its query engine

- Delta Lake’s Parquet format benefits from broader ecosystem optimizations

- Snowflake’s metadata layer provides excellent pruning capabilities

- Delta Lake’s data skipping and Z-ordering provide similar benefits but may require more explicit management

The efficiency of each approach ultimately depends on access patterns and workload types—a theme we’ll explore more thoroughly when discussing performance benchmarks.

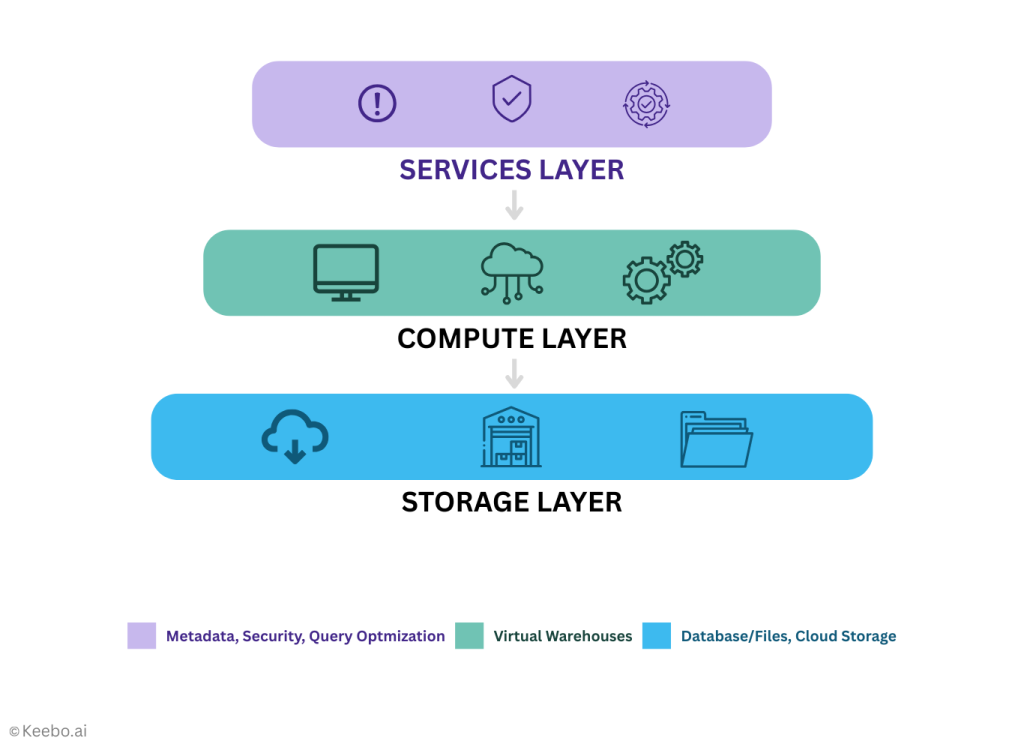

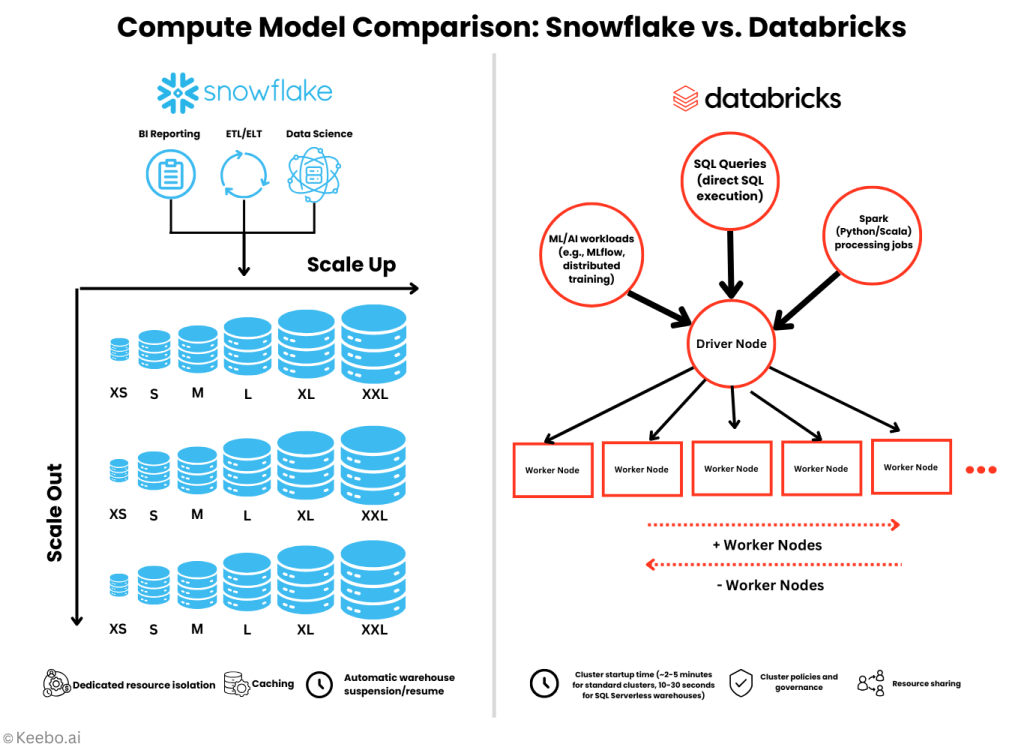

3.2. Compute Model

The way these platforms provision and manage compute resources represents another fundamental architectural difference with significant implications for how you develop, deploy, and pay for data applications.

3.2.1. Snowflake’s virtual warehouses vs. Databricks clusters

Snowflake’s compute model is built around virtual warehouses—independent compute clusters that can be sized (XS through 6XL), scaled, and suspended as needed. Each warehouse operates independently, with its own resources, cache, and workload. This isolation provides clear performance boundaries and straightforward cost attribution.

Databricks, meanwhile, uses a cluster-based approach inherited from its Spark foundations. These clusters consist of driver and worker nodes that operate as a unified computing environment. While Databricks has introduced serverless SQL warehouses that behave more like Snowflake’s virtual warehouses, its core compute model remains centered around these more general-purpose clusters.

A critical distinction is that Snowflake’s warehouses are primarily accessed through SQL, while Databricks clusters support multiple programming models (SQL, Python, Scala, R) directly within the same compute environment.

3.2.2. Elasticity and scaling behaviors

Both platforms offer elasticity, but implement it differently:

Snowflake:

- Scales through multi-clustering (adding warehouses) or sizing (XS → 6XL)

- Each size increment doubles both resources and cost

- Automatic scaling based on concurrent queries (Enterprise+ editions)

- Immediate suspension when idle (customizable threshold)

- Clear isolation between warehouse resources

Databricks:

- Scales by adding or removing worker nodes to clusters

- More granular scaling increments

- Autoscaling based on workload

- Cluster policies for governance

- Resources potentially shared across sessions

In practice, Snowflake’s model tends to provide more predictable performance for concurrent query workloads, while Databricks offers more flexibility for mixed computational patterns, especially those involving iterative processing or machine learning.

3.2.3. Resource isolation approaches

Resource management philosophy differs significantly between the platforms:

Snowflake enforces strong isolation between virtual warehouses—each operates with dedicated resources and cannot impact the performance of others. This approach simplifies performance tuning and troubleshooting but may result in lower overall resource utilization.

Databricks allows more shared resource utilization, especially within clusters, which can improve efficiency but introduces more complexity in performance management. The platform offers both shared clusters and single-user clusters depending on isolation requirements.

This difference has tangible implications for how organizations structure their compute environments and manage multi-tenant workloads.

3.3. Services Layer

Beyond storage and compute, both platforms provide various services that handle metadata, security, and orchestration—often referred to as the “brain” of the system.

3.3.1. Metadata handling differences

Metadata management represents a significant architectural distinction:

Snowflake employs a centralized metadata layer that maintains information about databases, schemas, tables, views, and security policies. This approach provides consistency and enables Snowflake’s governance capabilities, including its unique data sharing features.

Databricks historically relied on the Hive Metastore for metadata management but has introduced Unity Catalog as a more comprehensive governance solution. Unity Catalog provides a centralized way to manage metadata across workspaces, offering similar capabilities to Snowflake’s approach but with a different implementation strategy.

3.3.2. Security and governance comparison

Both platforms offer robust security models with some philosophical differences:

Snowflake:

- Role-based access control (RBAC) with hierarchical roles

- Object-level security and row/column-level security

- Data masking and tokenization

- Always-on encryption

- Native data classification capabilities

Databricks:

- Unity Catalog with centralized permissions

- Table access control and dynamic views

- Credential passthrough options

- Enterprise security features (SCIM, SSO)

- Audit logging and compliance reporting

Organizations with complex security requirements can achieve their objectives with either platform, though the implementation paths differ significantly.

3.3.3. Administrative overhead comparison

A final consideration is the administrative effort required to maintain each platform:

Snowflake generally requires less ongoing administration due to its fully managed nature. Most administration focuses on managing access controls, monitoring costs, and optimizing queries rather than infrastructure management.

Databricks typically demands more hands-on administration, particularly around cluster configuration, pool management, and workspace organization. While Databricks has introduced more automation over time, it still retains more of its “platform” heritage compared to Snowflake’s “as-a-service” approach.

This difference in administrative overhead can significantly impact total cost of ownership, particularly for organizations with limited dedicated data engineering resources.

In the next section, I’ll explore how these architectural differences translate into concrete cost structures and pricing models—revealing why understanding architecture is essential for effective cost optimization on either platform.

4. Cost Structure Analysis

Understanding the nuanced cost structures of both platforms is essential for making informed decisions and avoiding unexpected expenses. Having worked with organizations that use both platforms, I’ve observed that the largest cost overruns typically stem not from the platform choice itself, but from misalignment between architecture decisions and pricing models.

4.1. Pricing Models

Snowflake and Databricks employ fundamentally different approaches to pricing, reflecting their architectural differences and business philosophies.

4.1.1. Snowflake: Credit-based pricing explained

Snowflake uses a credit-based consumption model where all compute activities consume credits at rates that vary based on several factors:

- Warehouse size: Each size increment (XS, S, M, L, XL, etc.) doubles both the compute resources and the credit consumption rate

- Edition: Standard, Enterprise, Business Critical, and VPS editions have different per-credit costs

- Regional variations: Credit costs vary by cloud provider and region

- Minimum charges: 60-second minimum billing increments for warehouses

The credit system provides a unified currency across Snowflake’s platform, with credits consumed not just by virtual warehouses but also by serverless features like Snowpipe, materialized views, and search optimization.

A key consideration is that Snowflake bills for actual runtime rather than provisioned capacity—when a warehouse is suspended, it incurs no costs. This model rewards efficient utilization and proper autoscaling configuration.

4.1.2. Databricks: DBU-based pricing across workload types

Databricks uses a conceptually similar but distinctly implemented consumption model based on Databricks Units (DBUs):

- Workload type: All-Purpose Compute, Jobs Compute, SQL, Pro, and Serverless DBUs have different rates

- Regional variation: Costs vary by cloud provider and region

- Cluster configuration: DBU consumption depends on instance types and cluster sizing

- Pricing tiers: Standard, Premium, and Enterprise tiers with different capabilities and rates

Unlike Snowflake’s uniform credit system, Databricks’ DBU rates vary significantly based on workload type. All-Purpose computing is typically more expensive than Jobs computing, which is reserved for scheduled batch workloads.

Additionally, Databricks charges for runtime on the underlying cloud instances, which means proper cluster configuration and autoscaling are critical for cost management.

4.1.3. On-demand vs. capacity/commitment pricing options

Both platforms offer options that balance flexibility against cost:

Snowflake:

- On-demand: Pay-as-you-go with no commitments

- Capacity: Pre-purchased credits at discounted rates (typically 20-30% savings)

- Business Critical/VPS: Higher per-credit costs for advanced features

- Upfront annual commitments: Deeper discounts for longer-term contracts

Databricks:

- Pay-as-you-go: Consumption-based with no commitments

- Committed Use: Discounted rates for committed DBU consumption

- Premium/Enterprise Tiers: Higher rates but with additional capabilities

- Reserved instances: Potential savings through cloud provider reserved instances

Organizations typically optimize costs by using capacity models for predictable baseline workloads while maintaining flexibility for variable or seasonal demands.

4.2. Hidden Cost Factors

Beyond the obvious compute and storage charges, both platforms have less visible cost factors that can significantly impact total expense.

4.2.1. Storage costs and compression efficiencies

Snowflake:

- Charges for compressed data after applying columnar compression

- Time Travel retention (default: 1 day, configurable up to 90 days) increases storage costs

- Fail-safe period (7 days, non-configurable) adds to storage footprint

- Clones initially consume minimal additional storage but diverge over time

Databricks:

- Storage costs based on underlying cloud provider (S3, ADLS, GCS)

- Delta Lake versioning consumes additional storage depending on retention policy

- No built-in equivalent to Fail-safe, though cloud providers may offer their own recovery mechanisms

- Storage consumption heavily influenced by file size, partitioning, and optimization frequency

Organizations should monitor storage costs carefully, as they typically grow continuously while compute costs fluctuate with usage.

4.2.2. Data transfer and egress charges

Data movement represents a frequently overlooked cost dimension:

Snowflake:

- No charges for data movement within the same region

- Cross-region transfer incurs costs

- Data sharing between Snowflake accounts in the same region is free

- External exports may trigger cloud provider egress charges

Databricks:

- Subject to cloud provider cross-region transfer fees

- Data movement between Databricks workspaces incurs charges

- External query federation may trigger additional costs

- Delta Sharing introduces additional cost considerations

Organizations with multi-region architectures should pay particular attention to these charges, as they can accumulate rapidly with large-scale data movement.

4.2.3. Serverless feature billing differences

Both platforms offer serverless capabilities that bill differently from their core compute models:

Snowflake:

- Snowpipe (data ingestion)

- Materialized Views (automated refreshes)

- Search Optimization Service

- Snowflake Streams

- External Functions

Databricks:

- Auto Loader

- Delta Live Tables

- Workflows

- Databricks SQL Serverless

- Model Serving

These serverless features often operate continuously in the background, creating a baseline cost that persists even when interactive analysis is minimal. Proper governance of these features is essential for cost control.

4.2.4. Operational overhead costs

Beyond direct platform charges, consider:

- Administrative overhead (staff time for platform management)

- Learning curve and training requirements

- Integration with existing tools and workflows

- Development time for optimizations and cost controls

- Monitoring and observability solutions

While harder to quantify, these factors contribute significantly to total cost of ownership. Organizations often underestimate these costs when focusing solely on direct platform charges.

4.3. Cost Scaling Patterns

Understanding how costs scale with different variables helps predict future expenses as usage evolves.

4.3.1. Scaling with data volume

As data volumes grow:

Snowflake:

- Storage costs scale linearly with data volume (after compression)

- Compute costs may increase sub-linearly due to pruning and caching efficiencies

- Time Travel and Fail-safe costs grow proportionally with data volume

Databricks:

- Raw storage costs scale linearly

- Compute costs for scanning data can be mitigated through optimization

- Proper partitioning becomes increasingly important at scale

The rate of storage growth relative to query patterns significantly influences the long-term cost trajectory.

4.3.2. Scaling with user counts

As user populations expand:

Snowflake:

- Costs scale primarily with concurrent query workloads

- Multi-clustering can manage concurrency efficiently

- User-based features (e.g., Snowsight) may have separate pricing

Databricks:

- SQL warehouse costs scale with concurrent users

- Workspace management adds complexity at scale

- Shared cluster models may offer economies of scale

Organizations should consider not just raw user counts but usage patterns and concurrency requirements when forecasting growth.

4.3.3. Scaling with query complexity

As analytical requirements grow more sophisticated:

Snowflake:

- Complex queries may require larger warehouses

- Materialized views can optimize repetitive complex queries

- Caching benefits diminish with highly variable query patterns

Databricks:

- Complex processing may benefit from specialized cluster configurations

- Delta Engine optimizations help mitigate complexity impact

- Photon acceleration shows particular benefits for complex workloads

Understanding your query complexity trajectory helps anticipate future compute requirements and optimize accordingly.

4.4. Real-World Cost Scenarios

To make these concepts concrete, let’s examine representative scenarios based on my experience with organizations of different sizes.

4.4.1. Small business implementation (~1TB)

For startups or small analytics teams with moderate data volumes:

Scenario: 1TB of data, 5-10 concurrent users, primarily BI workloads with some data science

Snowflake approach:

- Small/Medium warehouses for interactive queries

- Auto-suspension set to aggressive thresholds

- Standard Edition typically sufficient

- Monthly cost range: $2,000-5,000

Databricks approach:

- SQL warehouses for BI users

- Shared all-purpose clusters for data science

- Standard tier usually adequate

- Monthly cost range: $1,800-6,000

At this scale, implementation differences and team expertise often influence costs more than platform selection.

4.4.2. Mid-market deployment (~10TB)

For growing organizations with diverse analytical needs:

Scenario: 10TB of data, 20-50 users, mixed BI/ETL/data science workloads

Snowflake approach:

- Dedicated warehouses for different workload types

- Enterprise Edition for improved concurrency

- Capacity pricing becomes advantageous

- Monthly cost range: $10,000-25,000

Databricks approach:

- Job clusters for ETL workloads

- SQL warehouses for analysts

- All-purpose clusters for data scientists

- Monthly cost range: $12,000-30,000

At this scale, workload segmentation and capacity planning become critical cost factors.

4.4.3. Enterprise deployment (~100TB+)

For large organizations with sophisticated data ecosystems:

Scenario: 100TB+ data, 100+ users, complex analytical ecosystem

Snowflake approach:

- Multi-cluster warehouses for high concurrency

- Resource monitors for cost governance

- Business Critical features for sensitive workloads

- Monthly cost range: $50,000-250,000+

Databricks approach:

- Workspace and pool segmentation for governance

- Premium/Enterprise tier for advanced security

- Extensive automation and optimization

- Monthly cost range: $60,000-300,000+

At enterprise scale, sophisticated cost governance, workload optimization, and architectural decisions drive the cost structure more than base platform fees.

4.4.4. ML/AI workload cost considerations

For organizations focused on machine learning:

Scenario: Training and deploying models on large datasets

Snowflake considerations:

- Larger warehouses needed for feature engineering

- Snowpark for Python enables in-database ML

- External model integration may require data movement

Databricks considerations:

- Native support for distributed ML training

- MLflow for model management

- GPU clusters available for deep learning

- Delta Lake for feature stores

ML workloads often show more pronounced cost differences between platforms, with Databricks typically offering more native capabilities for complex ML pipelines.

In the next section, we’ll examine performance benchmarks across these same workload types to complement our cost analysis with performance considerations.

5. Performance Benchmarks

After examining cost structures, it’s equally important to understand performance differences between these platforms. In my work with both Databricks and Snowflake, I’ve observed that performance characteristics vary significantly depending on workload types, data volumes, and query patterns. Rather than declaring one platform universally “faster,” this section breaks down performance by specific workload categories.

5.1. Query Performance

The most direct comparison between platforms typically focuses on SQL query performance—an area where both platforms have invested heavily but approach differently.

5.1.1. Data loading benchmarks

Data ingestion capabilities represent a foundational performance consideration:

Snowflake:

- COPY command provides highly parallelized loading

- Snowpipe offers continuous, serverless data ingestion

- External tables enable querying without explicit loading

- Performance scales with warehouse size

- Typically achieves 1-2 TB/hour per warehouse node (XL)

Databricks:

- Auto Loader provides incremental file ingestion

- Delta Live Tables for streaming ETL

- COPY INTO for bulk loading

- Performance scales with cluster size and configuration

- Typically achieves 1-5 TB/hour depending on configuration

Both platforms can achieve similar maximum throughput, but Databricks often requires more configuration to reach optimal performance while Snowflake provides more predictable scaling with warehouse size.

5.1.2. Analytical query performance

For analytical workloads, performance differences emerge based on query type:

Simple aggregations and filters:

- Both platforms show excellent performance

- Snowflake’s micro-partitioning enables efficient pruning

- Databricks’ Delta Engine provides similar capabilities

- Results typically comparable with proper optimization

Complex joins and window functions:

- Snowflake’s optimizer excels with complex SQL constructs

- Databricks’ Photon engine has significantly improved SQL performance

- Snowflake typically maintains more consistent performance across query complexity levels

- Databricks may require more query optimization for complex joins

Common patterns observed:

- Snowflake often performs better “out of the box” for SQL-heavy workloads

- Databricks can achieve comparable or superior performance with tuning

- Query performance on both platforms improves dramatically with proper optimization

Performance differences typically range from negligible to 2-3x depending on the specific query pattern and optimization level.

5.1.3. Concurrency handling

A critical differentiator emerges when handling multiple simultaneous queries:

Snowflake:

- Multi-cluster warehouses handle concurrency through parallel resources

- Consistent performance as concurrency increases (with proper sizing)

- Result caching reduces redundant computation

- Clear isolation between workloads

Databricks:

- SQL warehouses scale with concurrent users

- Cluster pools support concurrency for notebook workloads

- Potential resource contention in heavily concurrent scenarios

- More complex configuration required for optimal concurrency

Organizations with high concurrency requirements typically find Snowflake’s model more straightforward to implement, while Databricks requires more careful planning but can achieve similar results with proper configuration.

5.1.4. Cold start performance

The time to begin processing from an idle state differs significantly:

Snowflake:

- Warehouses resume in 1-2 seconds

- Query execution begins almost immediately after resume

- Consistent startup performance across warehouse sizes

Databricks:

- SQL warehouses start in 10-30 seconds

- Cluster startup can take 2-5+ minutes depending on configuration

- Serverless pools improve initial latency but with some limitations

This difference is particularly relevant for interactive workloads with inconsistent usage patterns. Organizations with continuous processing tend to be less affected by these differences than those with sporadic, on-demand analytics.

5.2. Data Engineering Workloads

Data transformation pipelines represent another important performance dimension.

5.2.1. ETL/ELT performance comparison

For data engineering workloads:

Snowflake:

- SQL-centric transformations show excellent performance

- Stored procedures and user-defined functions extend capabilities

- Snowpark brings programmatic transformations within the platform

- Scales linearly with warehouse size

Databricks:

- Native support for complex transformation logic in multiple languages

- Optimized for large-scale data processing

- Delta Live Tables provides declarative pipeline development

- More flexibility for complex transformation logic

The performance gap is typically narrower for simple transformations and widens for complex processing that benefits from Databricks’ Spark foundation.

5.2.2. Data transformation efficiency

Looking at resource efficiency for transformation workloads:

Snowflake:

- Highly efficient for SQL-based transformations

- Scaling is predictable with warehouse size

- Tasks and stored procedures enhance automation

- Less efficient for iterative or procedural transformations

Databricks:

- Optimized for complex, multi-stage transformations

- Photon engine improves SQL performance

- Delta Lake provides ACID guarantees for reliable pipelines

- Particularly efficient for ML feature engineering

Organizations with primarily relational transformations often find Snowflake more straightforward, while those with complex transformation logic may benefit from Databricks’ flexibility.

5.2.3. Streaming data handling

Real-time data processing capabilities differ substantially:

Snowflake:

- Snowpipe Streaming for continuous ingestion

- Snowflake Streams for CDC and incremental processing

- SQL-based processing model

- Lower throughput but simpler implementation

Databricks:

- Native Spark Streaming integration

- Structured Streaming with exactly-once guarantees

- Delta Live Tables for declarative streaming

- Higher potential throughput with more complex configuration

For organizations with significant streaming requirements, Databricks typically offers more native capabilities and higher throughput, while Snowflake provides a simpler implementation model for moderate volumes.

5.3. Machine Learning/AI Workloads

Perhaps the most significant performance differences emerge in advanced analytics workloads.

5.3.1. Model training performance

For developing machine learning models:

Snowflake:

- Snowpark for Python enables in-database ML

- Integration with scikit-learn, XGBoost, etc.

- Limited distributed training capabilities

- Best suited for moderate-scale ML use cases

Databricks:

- Native support for distributed ML frameworks

- Deep integration with popular ML libraries

- GPU support for accelerated training

- MLflow for experiment tracking and model management

Databricks has a clear advantage for large-scale ML training, particularly for complex models that benefit from distributed processing or GPU acceleration.

5.3.2. Inference serving capabilities

For deploying models to production:

Snowflake:

- Snowpark container services for model deployment

- UDFs for simpler models

- External integrations for more complex serving needs

- Lower latency for SQL-integrated predictions

Databricks:

- Model Serving for real-time inference

- MLflow model registry for versioning

- Feature store for consistent feature serving

- More comprehensive ML operations capabilities

The performance gap depends heavily on model complexity and serving requirements, with Databricks offering more native capabilities for sophisticated ML deployments.

5.3.3. LLM and GenAI support

For next-generation AI workloads:

Snowflake:

- Cortex Data Clean Room for GenAI applications

- Document AI for unstructured data processing

- Snowflake Arctic for vector search

- Integrations with major AI providers

Databricks:

- Dolly and other foundation models

- Vector search capabilities in Delta Lake

- MLflow AI Gateway for model access

- Native fine-tuning capabilities

Both platforms are rapidly evolving their GenAI capabilities, with Databricks currently offering more native support for model development and Snowflake focusing on enterprise integration and governance.

When evaluating performance, it’s essential to consider not just raw benchmark numbers but also implementation complexity, maintenance requirements, and alignment with team skills. The “fastest” platform on paper may not deliver the best real-world performance if it requires expertise your team doesn’t possess or introduces operational complexity that slows overall progress.

In my experience, organizations achieve the best results by aligning platform selection with their dominant workload types and team capabilities rather than focusing solely on maximum theoretical performance. The next section explores how these performance characteristics intersect with cloud integration capabilities and ecosystem considerations.

6. Cloud Integration & Ecosystem

The value of a data platform extends beyond its core capabilities to include how well it integrates with cloud providers, third-party tools, and the broader data ecosystem. Having worked with organizations implementing both Snowflake and Databricks across various cloud environments, I’ve observed that integration capabilities often become decisive factors in platform selection.

6.1. Multi-Cloud Capabilities

Both platforms offer multi-cloud support, but with distinct approaches and limitations that impact deployment decisions.

6.1.1. AWS/Azure/GCP support differences

Snowflake:

- Available on AWS, Azure, and GCP

- Consistent experience across cloud providers

- Independent pricing by region/cloud

- Managed service abstracts cloud provider details

- Replication across clouds supported natively

Databricks:

- Available on AWS, Azure, and GCP

- Minor interface and feature differences between clouds

- Separate workspace management across clouds

- More direct exposure to underlying cloud infrastructure

- Replication requires custom implementation

Snowflake’s approach provides higher abstraction from the underlying cloud infrastructure, which simplifies multi-cloud management but reduces access to cloud-native capabilities. Databricks maintains closer integration with each cloud’s native services but requires more cloud-specific knowledge.

6.1.2. Cross-cloud data sharing

Data movement across cloud boundaries represents a critical consideration for multi-cloud organizations:

Snowflake:

- Native data sharing across regions and clouds

- Secure data sharing without data movement

- Data Exchange and Marketplace for broader sharing

- Cross-cloud direct queries (preview)

- Consistent governance across environments

Databricks:

- Delta Sharing for external table access

- Unity Catalog for consistent governance

- Requires data movement for full cross-cloud access

- More complex to implement cross-cloud pipelines

- Leverages cloud-native transfer mechanisms

Snowflake’s data sharing capabilities represent one of its most distinctive advantages, particularly for organizations operating across multiple clouds or with external partners. Databricks has made significant progress in this area but still requires more implementation effort for similar capabilities.

6.1.3. Regional availability

Geographic distribution affects compliance requirements and data sovereignty:

Snowflake:

- 30+ regions across AWS, Azure, and GCP

- Consistent feature availability across most regions

- Private deployment options for regulated industries

- Region-to-region replication capabilities

- New regions typically available shortly after cloud provider availability

Databricks:

- Available in most major cloud regions

- Some feature limitations in newer or smaller regions

- Regional deployment requirements for regulated industries

- Manual replication between regions

- Typically follows cloud provider regional expansion

Organizations with global operations or specific regional compliance requirements should verify current availability for both platforms, as geographic coverage continues to expand rapidly.

6.2. Tool & Partner Ecosystem

Beyond cloud integration, connectivity with the broader analytics ecosystem significantly impacts implementation success and long-term flexibility.

6.2.1. BI tool integration

Connectivity with business intelligence and visualization tools:

Snowflake:

- JDBC/ODBC drivers for all major BI tools

- Native connectors for Tableau, Power BI, Looker, etc.

- Optimized performance with partner-certified connectors

- Snowsight for native visualization

- Strong partner certification program

Databricks:

- JDBC/ODBC connectivity for BI tools

- Native connectors for major platforms

- SQL endpoint for direct connections

- Databricks SQL for native visualization

- Growing but still maturing partner ecosystem

Both platforms work well with popular BI tools, though Snowflake typically offers more mature and optimized connectivity with the broadest range of visualization platforms. Databricks has made significant progress in this area, particularly for tools that support JDBC connections.

6.2.2. Data governance tool support

Integration with data catalogs and governance platforms:

Snowflake:

- Native data classification and tagging

- Object tagging and discovery

- Access history and governance reporting

- Partner integrations with Alation, Collibra, etc.

- Data lineage through partner tools

Databricks:

- Unity Catalog for governance

- Native lineage tracking

- Column-level lineage (in development)

- Partner integrations with governance platforms

- Open metadata framework support

Both platforms recognize the importance of governance in enterprise deployments and have invested heavily in this area. Snowflake tends to have more mature partner integrations, while Databricks has focused on building native capabilities through Unity Catalog.

6.2.3. Third-party extensions and marketplace

The availability of pre-built solutions and integrations:

Snowflake:

- Snowflake Marketplace with 1,000+ data products

- Native apps framework

- Partner Connect for simplified onboarding

- UDF/UDTF extensibility

- Growing ecosystem of Snowpark accelerators

Databricks:

- Partner Connect ecosystem

- Databricks Marketplace (emerging)

- Extensive library of notebooks and solutions

- MLflow model registry and sharing

- Deep integration with open-source frameworks

Snowflake has established a more comprehensive marketplace ecosystem, particularly for data products and turnkey integrations. Databricks leverages its strong connection to the open-source community for extensions and accelerators.

When evaluating ecosystem integration, consider not just current requirements but future needs as your data strategy evolves. Organizations often underestimate the importance of ecosystem connectivity until they encounter integration challenges that impede business agility.

My experience suggests that organizations with heterogeneous technology environments and complex integration requirements often find value in Snowflake’s broader partner ecosystem. Meanwhile, organizations deeply invested in open-source technologies and advanced analytics often benefit from Databricks’ tighter integration with those communities.

Integration capabilities continue to evolve rapidly for both platforms, with competitive pressure driving expansion into each other’s traditional strengths. This convergence benefits customers but also increases the importance of regularly reassessing integration capabilities as part of your platform strategy.

7. Minimum Efficient Scale Analysis

One of the most frequent questions I receive from organizations evaluating these platforms is: “At what scale does each platform become cost-effective?” This question reflects the reality that the economics of data platforms shift dramatically depending on workload size, usage patterns, and organizational maturity. Drawing from my experience with implementations across organizations of all sizes, this section explores the concept of minimum efficient scale for both platforms.

7.1. Cost-effectiveness thresholds by platform

The minimum efficient scale—the point at which average costs reach their lowest level—varies significantly between Snowflake and Databricks based on several factors.

Snowflake’s efficiency curve:

- Small-scale operations (< 1TB, lightweight queries): Snowflake’s per-second billing and instant auto-suspend features make it cost-effective even for small, sporadic workloads. The platform’s minimal administrative overhead also helps smaller teams achieve value quickly.

- Mid-scale operations (1-10TB, mixed workloads): As data volumes and query complexity increase, Snowflake’s optimization becomes more important. At this scale, organizations typically need to implement more disciplined warehouse sizing and usage patterns.

- Large-scale operations (10TB+, complex ecosystem): At larger scales, Snowflake’s costs can grow substantially if not carefully managed. However, the platform’s elasticity and multi-clustering capabilities allow well-optimized implementations to maintain cost efficiency.

Databricks’ efficiency curve:

- Small-scale operations (< 1TB, lightweight queries): Databricks typically has a higher minimum operational cost due to cluster startup times and baseline configurations. Small or sporadic workloads may face challenges achieving cost-effectiveness.

- Mid-scale operations (1-10TB, mixed workloads): As scale increases, Databricks begins to demonstrate improved economics. Organizations that fully leverage its capabilities for diverse workloads start to see better returns on investment.

- Large-scale operations (10TB+, complex ecosystem): At scale, particularly for organizations with significant data science and ML workloads, Databricks often shows strong cost-performance characteristics due to its optimization capabilities and unified platform approach.

The inflection points where each platform delivers optimal value depend heavily on workload characteristics beyond just data volume.

7.2. Breakeven analysis for different workload types

Different workload types demonstrate distinct economic profiles on each platform:

Business Intelligence / SQL Analytics:

- Snowflake typically reaches cost efficiency earlier for pure SQL analytics workloads

- Predictable query patterns benefit from Snowflake’s caching and auto-suspension

- Breakeven point: Often at smaller scales (hundreds of GB to few TB)

Data Engineering / ETL:

- Mixed picture depending on transformation complexity

- Simple transformations may be more cost-effective in Snowflake

- Complex, multi-stage pipelines often more efficient in Databricks

- Breakeven point: Highly variable based on transformation complexity

Data Science / Machine Learning:

- Databricks typically reaches cost efficiency earlier for ML workloads

- Native distributed training and experiment tracking deliver significant value

- Snowflake becoming more competitive with Snowpark developments

- Breakeven point: Databricks usually more efficient at all scales for intensive ML

Streaming / Real-time Analytics:

- Databricks generally more cost-effective for high-volume streaming

- Snowflake competitive for moderate streaming with simpler processing needs

- Breakeven point: Dependent on streaming volume and processing complexity

This workload-specific analysis explains why many organizations eventually implement both platforms, using each for its areas of economic strength.

7.3. Platform switching considerations

Organizations sometimes consider migrating between platforms as their needs evolve. Understanding the economic implications of switching is essential:

From Snowflake to Databricks:

- Typically motivated by growing data science and ML requirements

- Migration costs include data movement, query rewriting, and retraining

- Economic benefits often take 6-12 months to materialize

- Skills gap may require additional hiring or training

From Databricks to Snowflake:

- Usually driven by governance requirements or BI/analytics focus

- Migration involves data restructuring and ETL pipeline adjustments

- Governance improvements may deliver rapid ROI for regulated industries

- Typically easier for primarily SQL-based workloads

The costs of platform migration extend beyond the direct expenses to include opportunity costs and organizational disruption. These factors often lead organizations to adopt a hybrid approach rather than a complete platform switch.

7.4. Scaling efficiency at different organization sizes

Beyond technical workloads, organizational factors significantly influence platform economics:

Startups and small teams:

- Limited specialized expertise favors platforms with lower administrative overhead

- Resources typically constrained, making predictable costs important

- Snowflake often more accessible for teams without specialized engineering resources

- Databricks may require more technical expertise to operate efficiently

Mid-size organizations:

- Diverse workload requirements start to emerge

- Specialized roles (data engineers, scientists, analysts) develop

- Unified platforms help manage organizational complexity

- Either platform can be effective with proper implementation

Enterprise organizations:

- Complex governance and security requirements

- Multi-geography, multi-cloud considerations

- Sophisticated cost allocation and chargeback mechanisms

- Both platforms can scale to enterprise requirements with proper architecture

In my experience, organizational capability and maturity often influence platform economics more than technical workloads alone. A technically “perfect” platform for your workloads may still prove inefficient if your team lacks the expertise to optimize and manage it effectively.

When evaluating minimum efficient scale, consider both your current position and your expected trajectory. A platform that seems economically advantageous today may become less optimal as your data strategy evolves. Conversely, what appears expensive initially may deliver superior economics as your use cases mature and diversify.

Most importantly, remember that implementation quality ultimately influences cost efficiency more than platform selection. I’ve seen well-implemented Snowflake deployments outperform poorly managed Databricks environments economically—and vice versa. The principles and practices outlined in this guide can help you achieve economic efficiency regardless of which platform you select.

8. Migration Considerations

For many organizations, the question isn’t about choosing between Snowflake and Databricks for a new implementation—it’s about whether to migrate from one platform to the other or how to integrate both into their data ecosystem. Having guided numerous migration projects, I’ve found that successful transitions require careful planning around data, code, personnel, and governance.

8.1. From Snowflake to Databricks

Organizations typically consider migrating from Snowflake to Databricks when they need enhanced capabilities for data science, machine learning, or complex data processing beyond SQL analytics.

8.1.1. Migration approach and methodology

A successful migration typically follows these stages:

- Assessment: Catalog existing Snowflake objects, query patterns, and dependencies

- Architecture planning: Design the target Databricks implementation, considering both Delta Lake storage organization and compute patterns

- Data migration: Move data from Snowflake tables to Delta Lake, preserving partitioning strategies where relevant

- Query transformation: Convert SQL queries, considering Databricks SQL dialect differences

- ETL/ELT conversion: Translate Snowflake tasks and procedures to Databricks workflows

- Testing and validation: Ensure data consistency and performance equivalence

- Cutover planning: Implement synchronization during transition period

- Training and enablement: Upskill team on Databricks concepts and tools

The complexity of this process varies dramatically with implementation size and complexity. For simple data marts, migration might be completed in weeks; enterprise data warehouses may require 6-12 months.

8.1.2. Cost implications

Beyond the obvious platform costs, migrations incur several expenses:

- Data transfer costs: Moving large datasets between platforms

- Professional services: Expertise often required for complex migrations

- Staff time: Internal resources dedicated to migration instead of other projects

- Dual operation: Running both platforms during transition period

- Training: Getting teams proficient on the new platform

- Refactoring: Adjusting downstream applications and BI tools

Organizations frequently underestimate these migration costs by 30-50%, particularly the hidden costs of staff time and business disruption.

8.1.3. Performance impact expectations

Performance changes after migration vary by workload:

- Data loading: Typically comparable or improved with proper configuration

- Standard analytics: Usually similar performance after optimization

- Complex analytics: Often improved, especially for algorithmic processing

- Data science: Substantially improved for Python/R workloads

- Concurrency: May require more configuration to match Snowflake’s simplicity

The performance transition isn’t uniform—some workloads improve immediately while others may require tuning to match previous performance levels.

8.1.4. Common challenges

The most frequent obstacles in Snowflake-to-Databricks migrations include:

- SQL dialect differences: Subtle variations in function behavior and SQL syntax

- UDF conversion: Translating user-defined functions to Databricks equivalents

- Governance recreation: Rebuilding security models and access controls

- Tool connectivity: Reconfiguring BI and ETL tool connections

- Performance tuning: Adjusting to different optimization approaches

- Team resistance: Overcoming familiarity bias with existing platform

Successful migrations proactively address these challenges through comprehensive planning and realistic timelines.

8.2. From Databricks to Snowflake

Conversely, organizations migrate from Databricks to Snowflake typically seeking simplified operations, enhanced SQL analytics, or stronger governance capabilities.

8.2.1. Migration strategy and approach

The migration process generally includes:

- Capability assessment: Identify which Databricks features are used and their Snowflake equivalents

- Schema planning: Design Snowflake database, schema, and table structures

- Data migration: Extract from Delta Lake to Snowflake, preserving table structures

- Transformation logic: Convert Spark code to Snowflake SQL/Snowpark

- Procedural logic: Translate notebooks and workflows to Snowflake tasks and procedures

- Security mapping: Recreate access controls and data protection measures

- Performance validation: Test and optimize for expected workloads

- Operational transition: Shift monitoring and administration procedures

The complexity varies significantly based on how extensively the organization uses Databricks’ programmatic capabilities beyond SQL.

8.2.2. Cost considerations

Key financial factors include:

- Credit capacity planning: Determining appropriate Snowflake capacity commitments

- Edition selection: Choosing the right Snowflake edition for required features

- Data transfer: Moving datasets to Snowflake’s storage format

- Conversion effort: Transforming Spark/Python/Scala code to Snowflake equivalents

- Skills development: Training teams on Snowflake administration and optimization

- Transition period: Operating both platforms during cutover

Organizations with significant investments in custom Spark code typically face higher migration costs than those using primarily SQL functionality.

8.2.3. Performance expectations

Performance shifts in Databricks-to-Snowflake migrations typically follow these patterns:

- SQL analytics: Often improved, especially for concurrent query workloads

- Data science workflows: May require more effort to achieve equivalent performance

- Complex transformations: Sometimes need redesign to maintain performance

- Real-time processing: Might require architectural adjustments

- Query latency: Usually improved for interactive analytics

Performance transitions are rarely uniform across all workloads, requiring careful benchmarking during migration planning.

8.2.4. Typical obstacles

Common challenges include:

- Scala/Python dependency: Heavy reliance on programmatic capabilities requiring redesign

- ML workflow integration: Recreating machine learning pipelines in Snowflake’s environment

- Custom optimization loss: Forfeiting hand-tuned Spark optimizations

- Schema complexity: Handling nested structures and schema evolution

- Streaming integration: Adapting real-time pipelines to Snowflake’s model

- Resource governance: Adjusting to different resource allocation paradigms

Organizations most successful in these migrations take a phased approach rather than attempting a “big bang” cutover.

8.3. Hybrid Approach Considerations

Rather than complete migration, many organizations opt for a hybrid approach, leveraging each platform for its strengths.

8.3.1. Complementary use cases

Common hybrid patterns include:

- Using Snowflake for enterprise data warehousing and governed analytics

- Leveraging Databricks for advanced analytics, ML, and data science

- Implementing bidirectional data flows between platforms

- Maintaining consistent metadata and governance across environments

This approach minimizes migration disruption while maximizing each platform’s value proposition.

8.3.2. Integration architecture

Effective hybrid architectures typically feature:

- Streamlined data movement between platforms

- Unified metadata management

- Consistent security models

- Clear workload routing guidance

- Optimization for cost and performance across platforms

The added complexity of managing two platforms is offset by better workload-to-platform alignment.

8.3.3. Cost optimization in hybrid environments

Optimizing costs across platforms requires:

- Careful workload placement decisions

- Avoidance of redundant storage where possible

- Minimizing cross-platform data transfer

- Right-sizing resources on each platform

- Clear cost allocation and chargeback models

While a hybrid approach may increase total technology spend, it often delivers superior return on investment through better workload-to-platform alignment.

Migration decisions should ultimately be driven by business needs rather than technology preferences alone. I’ve seen organizations successfully implement all three approaches—migration to Databricks, migration to Snowflake, and hybrid architecture—with the key differentiator being how well they aligned their choice with their specific data strategy, team capabilities, and business requirements.

9. Real-World Customer Examples

While theoretical comparisons are valuable, examining how organizations actually implement these platforms in production environments provides crucial insights. Drawing from verified case studies and independent analyst reports, in this section I present real-world examples that illustrate typical implementation patterns, challenges, and outcomes for both platforms.

9.1. Analytics-focused organization case study

Industry: Retail/E-commerce

Data Volume: ~20TB

Primary Use Case: Business intelligence and customer analytics

Implementation and Challenges

A mid-sized retailer implemented Snowflake as their primary analytics platform after struggling with an on-premises data warehouse that couldn’t scale to meet holiday season demands.

Their implementation journey included:

- Migrating from an on-premises data warehouse to Snowflake

- Creating a centralized data model for reporting consistency

- Implementing advanced security for compliance requirements

- Developing self-service analytics capabilities

Key challenges they encountered:

- Initial credit consumption exceeded forecasts by ~40%

- Dashboard performance issues during peak concurrency

- Integration complexity with legacy systems

- Cost allocation across business units

Results and Learnings

After optimization, the organization achieved:

- 40% improvement in query duration for stable workloads

- Significant reduction in data pipeline failures

- Successful handling of higher query volumes during peak season

- Self-service capabilities for business users

Critical success factors included:

- Implementing virtual warehouse specialization by workload type

- Developing a robust cost governance model

- Creating a center of excellence for data modeling

- Adopting a phased migration approach

This case illustrates how organizations with primarily SQL-based analytics workloads can achieve substantial benefits with Snowflake, particularly when concurrency and reliability are priorities.

9.2. ML/AI-focused organization case study

Industry: Healthcare Technology

Data Volume: ~15TB structured, ~50TB unstructured

Primary Use Case: Patient outcome predictions and clinical decision support

Implementation and Challenges

A healthcare technology company leveraged Databricks to build ML models that predict patient outcomes using both structured EHR data and unstructured clinical notes.

Their implementation included:

- Creating a unified data lake to integrate structured and unstructured data

- Developing natural language processing pipelines for clinical text

- Building distributed training for complex prediction models

- Implementing MLOps practices for model governance

Significant challenges included:

- Complex security requirements for PHI/HIPAA compliance

- Integration with clinical systems with strict latency requirements

- Model explainability for clinical stakeholders

- Cost variability across development and production

Results and Learnings

The optimized implementation delivered:

- 600x improvement in query performance, reducing times from 30 minutes to 3 seconds

- Mean square error rate of 6.8% for predictive healthcare models

- Successful deployment of models to multiple healthcare systems

- Significant estimated annual savings from improved outcomes

Key success factors included:

- Adopting cluster policies and automated pool management

- Implementing comprehensive monitoring for both performance and costs

- Developing specialized Delta Lake optimization strategies

- Creating custom MLflow integrations for clinical workflows

This example demonstrates Databricks’ strengths in complex ML workflows, particularly when unstructured data processing and model development are central requirements:

9.3. Hybrid use case study

Industry: Financial Services

Data Volume: ~80TB total

Primary Use Case: Regulatory reporting and algorithmic trading research

Implementation and Challenges

A financial services firm implemented both platforms in a complementary architecture:

- Snowflake for enterprise data warehouse and regulatory reporting

- Databricks for quantitative research and trading algorithm development

- Bidirectional data flows between platforms

- Unified security and governance layer

Their implementation challenges included:

- Maintaining data consistency across platforms

- Optimizing cross-platform data movement

- Managing distinct skill requirements for each platform

- Implementing consistent governance across environments

Results and Learnings

The hybrid approach yielded:

- 612% ROI over three years with Snowflake, as verified by Forrester TEI study

- Substantial improvement in algorithm development cycle with Databricks

- Combined net benefits exceeding $21.5 million over three years

- Successful audit compliance across all systems

Critical success factors included:

- Implementing clear workload routing guidelines

- Developing automated synchronization between platforms

- Creating a unified metadata management strategy

- Building platform-specific centers of excellence while maintaining knowledge sharing

This case illustrates that the choice between platforms isn’t always binary—many sophisticated organizations achieve optimal results by leveraging both platforms strategically.

9.4. Implementation ROI and Metrics

Organizations implementing these platforms have documented clear ROI figures through independent analysis:

Snowflake Implementations

- Financial Services: 612% ROI over three years with total benefits worth over $21.5 million

- Organizations using AWS Marketplace: 405% ROI over three years

- Data Engineering Teams: 616% ROI and $10.7 million in net benefits over three years

Databricks Implementations

- Healthcare: 600x query performance improvements for complex genomic analysis

- Marketing Teams: Over 40 hours per month in engineering time saved

- Enterprise: Adoption by over 40% of Fortune 500 companies with 7,000+ organizations worldwide

9.5. Implementation Timelines

Based on verified case studies, typical implementation timelines are:

Snowflake:

- Small organizations: 4-8 weeks

- Mid-size organizations: 2-3 months

- Enterprise organizations: 3-5 months

Databricks:

- Small organizations: 6-10 weeks

- Mid-size organizations: 3-4 months

- Enterprise organizations: 4-8 months

9.6. Success Patterns

Organizations that report the highest satisfaction with their platform choices typically:

- Align platform selection with their predominant workload types

- Build internal centers of excellence for platform expertise

- Implement comprehensive cost monitoring and optimization

- Take a phased approach to implementation

- Maintain realistic expectations about implementation complexity

The most important insight from these customer experiences is that implementation quality ultimately matters more than platform selection. Well-implemented Snowflake and Databricks environments both deliver excellent results when properly aligned with organizational needs and capabilities.

These case studies demonstrate that success with either platform is achievable—but requires thoughtful planning, realistic expectations, and ongoing optimization. The next section provides a decision framework to help you determine which approach best fits your specific circumstances.

10. Decision Framework

After examining architecture, costs, performance, ecosystem integration, and real-world implementations, the critical question remains: how do you determine which platform is right for your specific organization? Drawing on my experience guiding platform selection processes across diverse industries, I’ve developed a structured decision framework to help navigate this complex choice.

10.1. Use-case based decision tree

The starting point for any platform selection should be a clear-eyed assessment of your primary workload requirements. While both platforms have converged significantly, they still demonstrate distinct advantages for different use cases.

Snowflake typically proves advantageous when:

- SQL-based analytics represent 70%+ of workloads

- Business intelligence and reporting are primary use cases

- High concurrency for diverse business users is essential

- Cross-organization data sharing is a core requirement

- Administrative simplicity is highly valued

- Team skills are primarily SQL-focused

Databricks typically offers advantages when:

- Machine learning and data science are central workloads

- Complex data processing beyond SQL is common

- Your team has strong Python/Scala capabilities

- Unified processing of batch and streaming data is required

- Deep integration with open-source ecosystems is valued

- Advanced ML operations and experimentation are needed

Many organizations fall somewhere between these profiles, which explains the growing popularity of hybrid approaches.

10.2. Cost sensitivity considerations