- Snowflake Storage 101: Understanding Storage Unit Rates

- Hidden Drivers: Time-Travel & Fail-Safe Storage

- Strategy #1: Squeeze Every Byte with Compression & Smart Partitioning

- Strategy #2: Lifecycle Management & Object Tagging

- Strategy #3: Cut Bills by Leveraging AWS S3 Storage Cost with External Tables

- Strategy #4: Zero-Copy Cloning & Data Sharing

- Strategy #5: Monitor, Alert & Iterate

- Future Outlook: Operationalising Iceberg & Tiered “Platinum / Gold / Bronze” Storage

- Conclusion & Next Steps

Snowflake bills you for storage every single day—whether you query that data or not. One thing I have seen a lot is that because storage feels “cheap” compared to compute, it’s easy for micro-partitions to balloon quietly until the bill stings. In this post, I’ll walk through the mechanics of Snowflake storage unit rates, expose the hidden culprits (hi, Time Travel), and show you a few high-impact tactics—compression & smart partitioning—that can shrink your footprint before month-end close.

Snowflake Storage 101: Understanding Storage Unit Rates

At its simplest, Snowflake charges a flat storage unit rate—currently $23 per terabyte per month in AWS us-east-1 for on-demand accounts . A few nuances to keep in mind:

Capacity vs. On-Demand contracts

If you make an upfront commitment, the rates can drop by 10–30% depending on the size of your commitment (and your negotiation skills of course!).

What counts as storage?

Compressed table data, external-stage files left hanging around, Time Travel copies, and Fail-safe snapshots all accrue at the same $/TB.

Daily averaging

Snowflake snapshots storage once a day and averages the month—deleting data on the 30th won’t save you 29 days of charges.

Need the full breakdown of every line item? My previous 2025 Snowflake pricing guide walks through cost classes and regional uplifts.

Hidden Drivers: Time-Travel & Fail-Safe Storage

Snowflake’s point-in-time restore features are fantastic for “oops” moments—but they silently duplicate micro-partitions:

| Feature | Default Retention | Typical Storage Overhead |

| Time Travel | 1 day (can raise to 90) | ~3–5 % per extra day |

| Fail-safe | Fixed 7 days | ~7–10 % of table size |

According to the Snowflake architecture diagram, transient or temporary tables escape these fees though.

You can build your own dashboard in your BI tool to watch Time Travel’s share of the bill. You can also use the Storage Health functionality of Keebo’s Workload Intelligence to surface the exact dollar impact automatically and flag tables whose retention windows have crept up. See screenshot below:

Quick win: set unused dev schemas to a 1-day Time Travel retention, then run

ALTER DATABASE … SET DATA_RETENTION_TIME_IN_DAYS = 1;.

Next, I am going to share 5 effective strategies for reducing your overall storage cost with Snowflake. Depending on your business requirements, some may not be feasible for you but my hope is that you will find at least a couple that you can benefit from.

Strategy #1: Squeeze Every Byte with Compression & Smart Partitioning

Snowflake’s columnar engine already does a lot of the work for you—typical compression runs 3–4× smaller than raw data . You can push that even further with a few habits:

Use the right data types

Replace padded VARCHAR(1000) columns with trimmed VARCHAR or numeric types. A lot of the time, data teams do not want to carefully think through the optimal data type for every column when they design their database, but that’s an effort worth putting in. Not only does it reduce your storage footprint, it can also drastically improve your query performance (e.g., using INTversus VARCHAR).

Flatten JSON into VARIANT

Within the Snowflake architecture diagram the VARIANT type stores semi-structured data (JSON, Avro, Parquet) in a binary-columnar format instead of plain text:

- Parsing & typing once

When you run COPY INTO table … from a JSON stage, Snowflake tokenizes each record, converts leaf values to native types (NUMBER, STRING, BOOLEAN), and stores them in mini sub-columns. - Columnar compression kicks in

Because every sub-column now holds uniform, highly repetitive data, Snowflake’s dictionary + LZ4 compression achieves 3–5× size reduction compared with raw JSON bytes. - Query performance improves

Dot-notation paths (select data:user_id::variant …) can filter on individual attributes without scanning the entire JSON blob.

Quick demo

— Landing raw events

CREATE OR REPLACE TABLE raw_events (data VARIANT);

COPY INTO raw_events

FROM @my_stage/events/ FILE_FORMAT = (TYPE = ‘JSON’);

— Compressed size check

SELECT SYSTEM$ESTIMATE_TABLE_SIZE(‘RAW_EVENTS’);

Typical results on click-stream data: 120 GB raw → ~30 GB in VARIANT.

For maximum savings, avoid storing the same JSON in a VARCHAR column—let Snowflake do the flattening for you.

Terabyte to gigabyte conversion refresher: Snowflake (like AWS) uses binary math—1 TB = 1024 GB.

Let micro-partition pruning work

Snowflake automatically groups data into micro-partitions (50–500 MB uncompressed). During a query it scans only the partitions whose metadata range matches your predicates—this is called partition pruning.

Why “less than ~20 %” is the magic number

- If a filter touches >80 % of partitions, clustering won’t help; the engine must scan almost everything anyway.

- If a filter touches ≤20 %, you’re already getting near-optimal I/O elimination, so extra clustering may waste compute and inflate storage.

How to measure

— For a representative query

EXPLAIN USING ‘TEXT’

SELECT * FROM sales WHERE order_dt >= ‘2025-01-01’;

— Look for “partitions scanned” vs “partitions total”

For a broader view:

SELECT *

FROM TABLE( SYSTEM$CLUSTERING_INFORMATION(

‘SALES’, ‘(ORDER_DT, CUSTOMER_ID)’) );

If the CLUSTERING_DEPTH metric is under 1.5 and PERCENT_PARTITION_SELECTED stays below 20 %, skip ALTER TABLE … CLUSTER BY; let natural ordering do the job.

Archive wide history tables

Fact tables with years of history may be 98 % read-only—yet you still pay hot-storage rates plus Time-Travel and Fail-safe overhead. Shift aged partitions to cheap object storage:

- Copy old slices to Parquet in S3

COPY INTO @archive_stage/sales/<=2023/

FROM (SELECT * FROM sales WHERE order_dt < ‘2024-01-01’)

FILE_FORMAT = (TYPE = PARQUET COMPRESSION = SNAPPY);

- Create an external Iceberg/Parquet table

CREATE OR REPLACE EXTERNAL TABLE sales_archive

WITH LOCATION = ‘@archive_stage/sales/<=2023/’

FILE_FORMAT = (TYPE = PARQUET);

- Drop or truncate the hot copy

DELETE FROM sales WHERE order_dt < ‘2024-01-01’;

- Union-all in views so dashboards stay unchanged

CREATE OR REPLACE VIEW sales_all AS

SELECT * FROM sales

UNION ALL

SELECT * FROM sales_archive;

Result: cold partitions now cost ≤ $1/TB-mo (Glacier Deep Archive) instead of $23, yet analysts still query a single logical table. Amazon S3 Glacier Deep Archive is AWS’s lowest-cost object-storage class, designed for data you hardly ever need to read but must keep for years. (Terabyte to gigabyte conversion refresher: Remember that 1 TB equals 1024 GB when you forecast archive size).

Strategy #2: Lifecycle Management & Object Tagging

Snowflake never deletes a byte unless you ask it to. A simple tag-and-lifecycle loop prevents “forgotten” objects from bloating storage forever.

Why lifecycle rules matter

- Temp upload files, one-off staging schemas, and abandoned sandboxes linger at full price.

- Time-Travel and Fail-safe snapshots compound the waste if those objects stick around.

Tag data tiers up front

— Tag prod objects as “hot” (30-day retention)

ALTER TABLE finance.transactions

ADD TAG data_tier = ‘hot’;

— Tag staging schema as “cold” (7-day retention)

ALTER SCHEMA staging

SET TAG data_tier = ‘cold’;

Tags propagate to clones, so governance follows the data wherever it goes.

Automate drops & alerts

- Create a Nightly task like the following one:

CREATE TASK drop_cold_objects

WAREHOUSE = admin_wh

SCHEDULE = ‘USING CRON 0 3 * * * America/Detroit’

AS

CALL admin.drop_cold_objects();

- The stored procedure here checks whether data_tier = ‘cold’ and age > 7 days, then issues DROP.

- Alert when cold tier creeps up. Use a simple daily check against TABLE_STORAGE_METRICS to spot growth in data tagged cold. If the total exceeds your safe threshold (say > 5 TB or grows > 10 % week-over-week), send a Slack or email alert.

— Measure today’s cold-tier footprint

WITH today AS (

SELECT

SUM(active_bytes + time_travel_bytes + failsafe_bytes) AS bytes_cold

FROM SNOWFLAKE.ACCOUNT_USAGE.TABLE_STORAGE_METRICS

WHERE tag_value = ‘cold’

AND date = CURRENT_DATE() – 1 — yesterday’s snapshot

),

— Same metric seven days ago

week_ago AS (

SELECT

SUM(active_bytes + time_travel_bytes + failsafe_bytes) AS bytes_cold

FROM SNOWFLAKE.ACCOUNT_USAGE.TABLE_STORAGE_METRICS

WHERE tag_value = ‘cold’

AND date = CURRENT_DATE() – 8

)

SELECT

ROUND(today.bytes_cold / POWER(1024, 4), 2) AS tb_cold_today,

ROUND(week_ago.bytes_cold / POWER(1024, 4), 2) AS tb_cold_week_ago,

ROUND(

(today.bytes_cold – week_ago.bytes_cold)

/ NULLIF(week_ago.bytes_cold, 0)

* 100, 1) AS pct_change

FROM today, week_ago;

If pct_change > 10 or tb_cold_today > 5, raise an alert:

— Example: notify via email

CALL system$send_email(

‘storage_alerts’,

‘Cold-tier storage exceeded threshold’,

‘Cold data grew more than 10 % this week—review lifecycle rules.’);

Drop this query into a scheduled task and you’ll know the moment your cold data footprint starts to drift. The Data Health feature of Keebo’s Workload Intelligence view goes further by flagging tables that have been written to but not read in 90 days—prime candidates for archival or deletion as well tables that have not been written to or read from. Filter by schema to spot forgotten sandboxes, then drop or offload those tables to cut both compute and storage waste. See a screenshot from Keebo’s Data Health feature below:

Strategy #3: Cut Bills by Leveraging AWS S3 Storage Cost with External Tables

Snowflake compressed storage runs ≈ $23/TB-month in AWS us-east-1, so understanding **AWS S3 storage cost** tiers is critical before you off-load. Offloading archival partitions to S3 lets you downgrade costs in tiers:

| AWS tier | Typical use-case | Cost ($/GB-mo)* |

| S3 Standard | hot/active | 0.023 |

| S3 Intelligent-Tiering | auto hot/cold | 0.013 → 0.023 |

| S3 Glacier Deep Archive | compliance copies | 0.00099 |

*This is based on the published AWS price, June 2025.

Offload workflow

- Export to Parquet

COPY INTO @cold_stage/2025_q1/

FROM analytics.clickstream

FILE_FORMAT = (TYPE = PARQUET COMPRESSION = SNAPPY);

- Create an external table

CREATE OR REPLACE EXTERNAL TABLE clickstream_ext

WITH LOCATION = ‘@cold_stage/2025_q1/’

FILE_FORMAT = (TYPE = PARQUET);

- Add S3 lifecycle rules

- 0–30 days → Standard

- 30 days → Intelligent-Tiering

- 90 days → Glacier Deep Archive (this is the cheapest tier)

Queries can UNION native and external tables transparently, so analysts don’t need to know where the bytes live.

Keep queries predictable

- Partition Parquet files by date (dt=YYYY-MM-DD/part-00001.parquet) so pruning still works.

- Prefetch large scans with COPY INTO temp if latency matters.

- Track S3 request fees—cheap storage can turn pricey if you scan terabytes daily.

With lifecycle tagging guarding hot data (Strategy #2) and S3 offloading sheltering the coldest bytes, you’ll cut storage spend without sacrificing recoverability—or rewriting a single dashboard.

Strategy #4: Zero-Copy Cloning & Data Sharing

Snowflake’s zero-copy clone feature lets you fork a complete database, schema, or table in seconds—without copying a single byte. The new object merely references the source micro-partitions; storage is consumed only when you write to the clone (copy-on-write).

Why clones can still explode

- Each DML against the clone materializes new micro-partitions, so an “inactive” dev sandbox can silently grow to terabytes if testers run UPDATE/DELETE statements.

- Nested clones amplify the problem—cloning a clone duplicates any already-materialized partitions.

Best practices for dev/test sandboxes

- Track clones with tags

ALTER DATABASE myapp_dev_clone

ADD TAG env = ‘dev’, ttl_days = 14;

- A scheduled task can drop anything where ttl_days is exceeded.

- Prefer transient clones for scratch work to avoid Fail-safe fees.

CREATE TRANSIENT DATABASE scratch_clone

CLONE prod_db AT (TIMESTAMP => TO_TIMESTAMP(‘2025-05-01 00:00:00’));

- Avoid DML on large fact tables inside clones—use CREATE TABLE … AS SELECT on a filtered subset instead.

- Drop early, drop often

DROP DATABASE IF EXISTS myapp_dev_clone;

Use Data Sharing instead of cloning

When another team (or an external partner) only needs read access, use Secure Data Sharing. Shares expose the same micro-partitions in place—no extra storage, no copy-on-write risk—while keeping access control at the object level.

Strategy #5: Monitor, Alert & Iterate

Storage optimization is not a one-and-done project; it’s a feedback loop (unfortunately, like most things in life!).

Build a lightweight cost-governance dashboard

- Query STORAGE_DAILY_HISTORY for total bytes, broken down by active, Time Travel, and Fail-safe.

- Add TOP 20 objects by storage delta week-over-week to spot sudden spikes.

- Publish a time-series chart of $ per TB to your BI tool or a simple Streamlit app.

Key metrics to track

| Metric | Why it matters | Alert threshold |

| Active storage Δ 7-day | Detects uncompressed dumps or rogue imports | > 10 % |

| Time Travel % of total | Reveals retention mis-configurations | > 25 % |

| Fail-safe % of total | Flags need for transient tables | > 15 % |

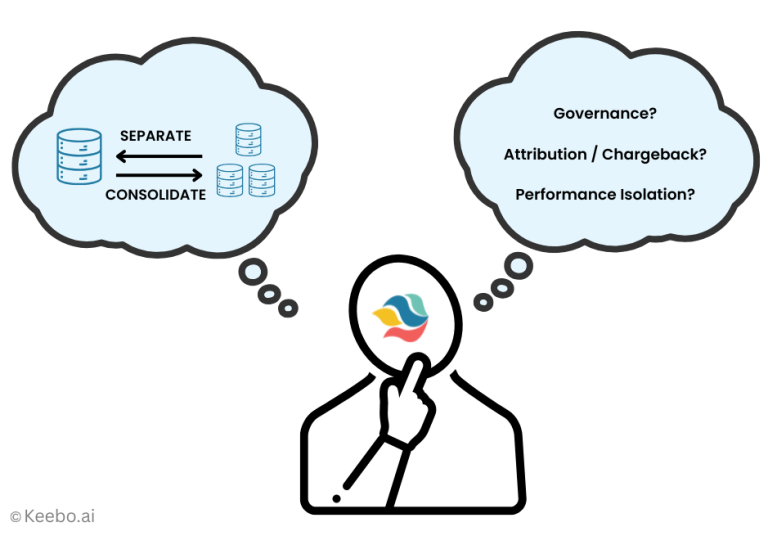

DIY Dashboards vs. Keebo Workload Intelligence

DIY dashboards work—but they require upkeep and domain expertise. My post on Lessons from Instacart and Snowflake outlines the trade-offs of buy versus build in detail, but below is a summary table.

| What you need | Keebo | Build Your Own |

|---|---|---|

| Time-to-Value | ✅ Instant visibility with pre-built, FinOps-ready dashboards | ❌ Hours to spin up a dashboard; months to harden end-to-end tooling |

| Accuracy of Attribution | ✅ Granular $ attribution by user, team, workload & query | ❌ Delayed, error-prone reports with limited context |

| Pro-active Cost Control | ✅ Real-time alerts + AI-prioritised fixes | ❌ Static thresholds; manual monitoring; delayed reaction |

| Engineering Overhead | ✅ Fully managed—zero maintenance | ❌ High ongoing cost & engineering distraction |

| Clarity on Fixes | ✅ AI shows top issues and how to resolve them | ❌ You spot the problem → now hunt for a fix yourself |

| Governance & Accountability | ✅ Built-in attribution drives ownership across teams | ❌ Hard to enforce; manual scaffolding needed |

If you’d rather skip maintenance, Keebo’s battle-tested Workload Intelligence surfaces storage anomalies, sends proactive alerts, and even recommends the right retention policy—all without writing a single line of SQL.

Pro tip: Set a weekly calendar reminder to review the dashboard—or let Workload Intelligence ping you in Slack when any metric crosses its threshold.

With continuous monitoring in place, every optimization from Strategies #1-4 stays locked in, and new issues surface before they balloon into next month’s bill.

Future Outlook: Operationalising Iceberg & Tiered “Platinum / Gold / Bronze” Storage

Snowflake’s May 2024 GA for Iceberg Tables decoupled where you store bytes from how you query them. The 2025 Summit upgrades—Catalog-Linked Databases (CLDB) and Dynamic Iceberg Tables—have pushed that decoupling even further.

Why this matters

- Cost leverage – Store 80-90 % of cold data in S3 (¢/GB) while still querying it through Snowflake’s engine.

- Vendor flexibility – A single Iceberg catalog can serve Snowflake, Databricks, Presto & Hive—no more copy-and-paste data lakes.

- Governance continuity – CLDB syncs object-storage ACLs and Iceberg metadata back into Snowflake RBAC, so security teams keep a single control plane.

What teams should do now

| Priority | Action | Pay-off |

|---|---|---|

| High | Pilot an Iceberg landing zone. Export a non-critical fact table ≥ 1 TB to Iceberg (Parquet) and create an external database via CLDB. | Validate query latency & cost; build muscle memory for lifecycle rules. |

| Medium | Update lifecycle policies. Route RAW_* tables → Gold after 30 days → Bronze (Iceberg + Glacier) after 90–180 days. | Automatic cost drops with no ETL. |

| Medium | Add Iceberg support to CI/CD. Extend db-change scripts so every new table has a heat tier tag (platinum/gold/bronze). | Keeps tiering consistent as new data lands. |

| Low (watchlist) | Preview Search Optimization & QAS on Iceberg. Private preview now; GA expected 2026. | Brings sub-second queries to Bronze data without re-ingesting. Disclaimer: I expect both Search Optimization & WAS to increase compute costs for most customers, but the latency improvements may justify that. |

Roadmap signals to watch

- Auto-tiering – Snowflake hinted at policies that move native tables to Iceberg once they age beyond an SLA. Storing only the hottest 5 – 10 % of bytes in **platinum storage** keeps premium spend under control.

- Cross-cloud catalogs – Polaris (open-source) aims to unify Iceberg metadata across AWS, Azure, GCP.

- Column-cache acceleration – Expect a vendored cache layer that keeps hottest Iceberg columns in SS-RAM for sub-second BI.

In short, the platinum / gold / bronze playbook is becoming real: keep only the fastest-needed 5-10 % in premium storage, warm the next 20 % in native Snowflake, and shove the rest into Iceberg on S3 plus Glacier Deep Archive. Start small now so you’re ready when auto-tiering goes GA.

Conclusion & Next Steps

Storage often feels cheap—until it quietly doubles your bill. Applying the five levers I covered in this guide can typically trim 20–40% of that spend:

- Right-size data types & compression

- Prune Time Travel and Fail-safe windows

- Enforce lifecycle tags & drops

- Offload archives to S3 / Iceberg

- Govern with continuous monitoring

Ready to pinpoint your biggest storage-savings wins? Spin up a free trial of Keebo Workload Intelligence—no commitment, no card required—connect Snowflake once, let the platform run its automated scan, and within 30 minutes you’ll have a prioritized, actionable savings plan, start your free Keebo trial →.

Happy optimizing!

FAQs for “Storage Cost Optimization for Snowflake — How to Reduce Your TB Spend”

1. What are Snowflake storage unit rates, and how are they calculated?

Snowflake charges storage based on the total compressed data stored, averaged daily across the month. In AWS us-east-1, on-demand rates are typically $23 per terabyte per month, with potential discounts for capacity-based commitments. Charges include table data, Time Travel, and Fail-safe storage.

2. Why do Snowflake storage costs increase unexpectedly?

Unexpected cost spikes often come from Time Travel retention, Fail-safe copies, unused staging files, or forgotten sandbox environments. These hidden storage drivers silently accumulate data over time, increasing your monthly bill without visible query activity.

3. How can compression and partitioning help reduce Snowflake storage costs?

Using the right data types, flattening JSON into VARIANT, and allowing micro-partition pruning can reduce storage up to 3–5×. Optimizing data design minimizes I/O, boosts query performance, and prevents unnecessary micro-partition growth.

4. What is Time Travel and Fail-safe storage in Snowflake?

Time Travel allows you to restore data from a past point (default 1 day, up to 90 days), while Fail-safe retains data for 7 additional days for disaster recovery. Both consume extra storage — roughly 3–10% additional space depending on retention windows.

5. How can lifecycle management reduce Snowflake storage waste?

Applying object tagging and automated lifecycle rules ensures temporary or cold data is dropped or archived on schedule. For example, tagging “cold” schemas for 7-day retention prevents forgotten sandboxes and staging tables from inflating storage bills.