On June 3rd, Snowflake used its Summit platform keynote (video) to unveil Adaptive Compute—a new warehouse type intended to eliminate manual sizing. Since then a steady flow of customers and community members has asked for my perspective. Rather than explain the feature one conversation at a time, I’m sharing my analysis here with everyone.

We currently have two concrete inputs and a set of open questions:

- Public facts — Snowflake’s keynote demo, the official announcement, and shared screenshots.

- Community observations — feedback from several customers who got the private preview.

- Open questions — pricing and tuning details Snowflake will determine during the preview.

In this post I will explain what Adaptive Compute does, why it improves day‑to‑day operations, and why most organizations should not expect automatic cost savings in its first release.

1 What Is Adaptive Compute?

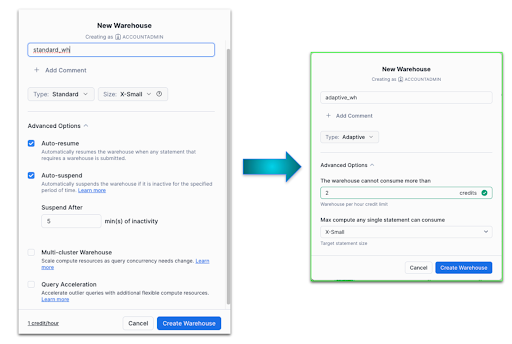

Traditional Snowflake warehouses force users to select a T‑shirt size (XS → 2XL) and, on Enterprise tiers, optional min/max clusters plus a scaling policy. Adaptive Compute collapses those choices:

- Submit a query.

- Snowflake routes it to an internal pool of warehouses sized for that plan.

- Two limits remain: max credits per hour and max warehouse size per statement.

For full context see the keynote and announcement links above.

2 Why the User Experience Will Improve

Snowflake’s original sizing model forces administrators to translate business goals—“this report must finish in 30 seconds” or “keep our monthly spend under $X”—into low-level parameters such as warehouse size, auto-suspend timers, and multi-cluster ranges. Adaptive Compute abstracts those mechanics away. By letting the platform choose an appropriate warehouse on the fly, data teams can refocus on service-level objectives rather than infrastructure minutiae.

Seen through that lens, Adaptive Compute promises three concrete benefits:

- Operational simplicity – Engineering time shifts from capacity planning to delivering analytics; SLAs replace “T-shirt size” spreadsheets.

- Lower queue time – A platform-managed pool of warehouses reduces the chance a query waits for resources.

- Plan-aware sizing – Because the optimizer sees each query plan’s operator mix and row estimates, it can allocate just enough CPU and memory—something external tools can only approximate.

These improvements mirror what Keebo customers have experienced for several years via Smart Query Routing, but without requiring users to learn a new rule engine or maintain bespoke orchestration scripts.

Keebo customers have enjoyed similar benefits for years through Keebo’s Smart Query Routing, which automatically directs each statement to the most cost‑effective engine and allows full visibility and override control. Snowflake’s native support is welcome, though it seems to lack the full visibility and flexibility to tweak routing rules for budget versus performance trade‑offs.

3 What Will Happen to My Snowflake Spend?

3.1 Philosophical

Vendors seldom launch features that materially reduce revenue unless competitive pressure demands it. Snowflake’s consumption model remains strong; there is no incentive to cut bills by double‑digit percentages.

3.2 Anecdotal

The launch blog highlights Pfizer’s preview experience (case study). The word “cost” does not appear even once in the entire case study. If Pfizer had achieved any savings, that result would almost certainly headline the story. Reading between the lines, they didn’t save anything (and quite likely they observed an increase in costs).

3.3 Technical — Loss of Per‑Query Cost/Performance Control

Consider two companies running the identical query pattern:

| Customer | Business priority | Warehouse they would have chosen before Adaptive Compute |

| A | Strict latency SLA; willing to pay for speed | L (large) |

| B | Cost‑conscious; happy if the query finishes in a few minutes | S (small) |

Under Adaptive Compute, Snowflake—not the customer—now decides the target size. Because at least one client (Customer A) needs low latency, Snowflake’s router will almost certainly route both customers’ queries to the larger warehouse. Customer B therefore loses the ability to trade speed for savings and pays the same premium rate as Customer A.

The core issue: a single optimizer must satisfy the most performance‑sensitive tenant. Everyone else inherits that decision—and its credit cost.

What about Resource Monitors and the new per‑hour credit limit? They notify you after the credits are burned. Once a high‑priority burst depletes the hourly budget, remaining workload either stalls (if you configured SUSPEND) or keeps running at the higher rate (if you chose alert‑only). In practice, teams still need to run the workload—so they raise the limit and the overspend continues. Snowflake offers no middle ground such as “fallback to a cheaper size.”

Net effect: many organizations that previously ran on smaller warehouses for non‑critical queries will see higher average warehouse sizes and, therefore, higher spend.

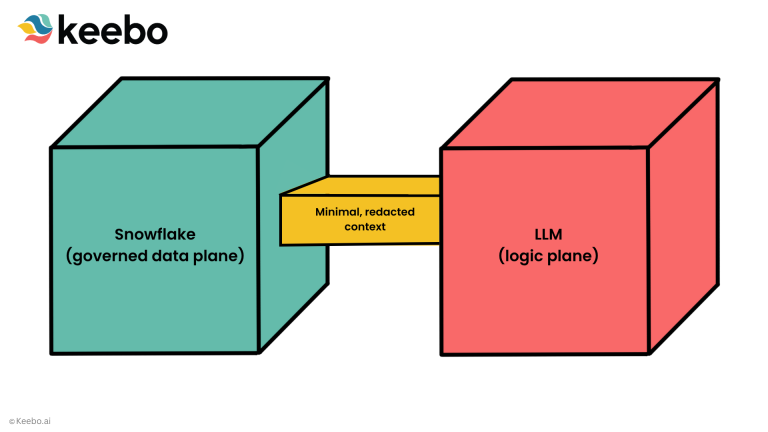

4 How Adaptive Compute Is Likely Implemented (Reverse‑Engineered)

Disclaimer: I have no access to Snowflake’s codebase so the following is simply based on best practices and my attempt at guessing how Snowflake architects must have implemented this new functionality given the feedback from early adopters.

- Statement router – Every query passes through a router component that inspects plan metadata (cardinality estimates, operator types, compute profile, memory needs, etc.).

- Shared pool – The router selects from a tiered fleet of internal warehouses (e.g., XS, M, L, …).

- Execution – The chosen warehouse executes the statement; runtime metrics feed the router for future decisions. This part I am not so sure about. Hopefully, they have a learning component there but if they don’t in this initial version and the feature is implemented using static rules for simplicity, I would not be surprised.

For backward compatibility, standard warehouses are most likely replaced with router objects with a single fixed warehouse size in their pool. Adaptive warehouses route to the full pool, enabling plan‑specific sizing while preserving backward compatibility for existing automation scripts. Implementation details are less important than the ultimate functionality.

5 Open Questions Snowflake Still Needs to Resolve

The word on the street is that Snowflake is going to first open access to ~20 design-partner customers to test Adaptive Compute. I expect Snowflake to track two dimensions very closely during the private preview phase:

- Revenue impact. Early workloads will reveal whether automatic routing cannibalizes, preserves, or slightly lifts net consumption. Snowflake’s goal will be to set price bands—or a small premium—that neutralize any revenue drop.

- Tuning the routing logic. Preview telemetry will show how often the router over- or under-sizes queries. Expect multiple iterations of cost/latency weights before GA.

In short, I suspect the preview will be less about feature completeness and more about finding a pricing model and an internal tuning curve that improve user experience without shrinking Snowflake’s top line.

6 Smart Query Routing: Centralized, Flexible Control You Can Use Today

Adaptive Compute removes knobs, but it still applies one opaque policy to every query.

Keebo’s Smart Query Routing (KQR) lets you keep the automation and decide—at rule-level granularity—how each workload trades cost for performance.

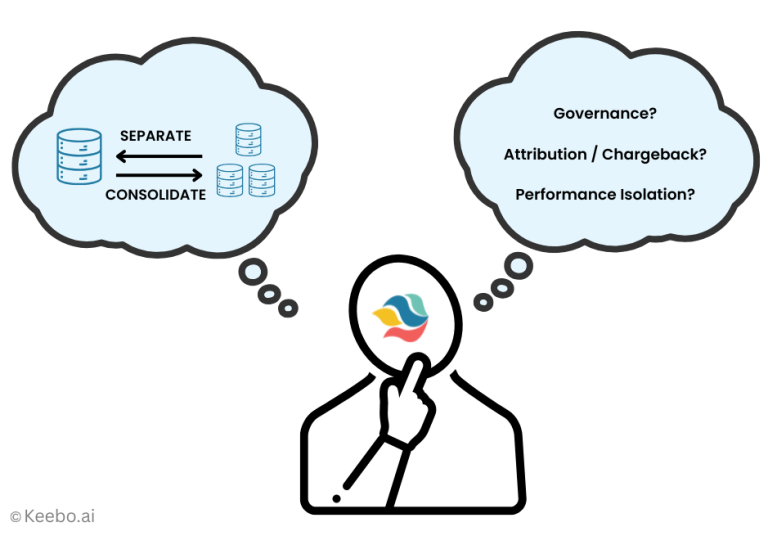

6.1 Centralized governance and why it matters

- Organization-wide policy, one click. Define rules such as

IF avg_gb_scanned > 25 THEN route_to XL

or

IF role = 'CEO_BI' THEN route_to PROD_XL.

Everyone inherits the policy automatically; you’re no longer relying on dozens of developers to “pick the right warehouse” by hand. - Budget + SLA alignment. Ops can cap spend (“never use >M for ad-hoc BI”) while Product keeps strict SLAs for external dashboards.

- Instant visibility & edits. All routing logic lives in one console. Need to tighten a budget or loosen an SLA? Toggle a rule—not a code deploy.

6.2 Flexibility

- Per-workload choice. Keep a mission-critical ETL job on its own fixed XL warehouse, while letting KQR steer your data science queries dynamically.

- Multi-environment support. Route some applications to Gen 2, others to Gen 1, or even to a different cloud region—without touching a single line of your application code.

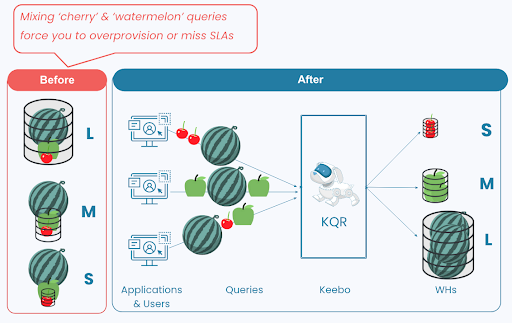

6.3 Solving the mixed-workload problem

Most warehouses run a mixed workloads—cherries (tiny), apples (mid-size), and watermelons (huge):

- Running cherries on a “watermelon-class” warehouse is pure waste.

- One stray watermelon forces you to over-provision or risk missing your SLAs.

- Once a watermelon starts on an undersized node, it’s too late—the cluster can’t shrink later.

KQR inspects every statement before execution, sends cherries to XS, apples to M, watermelons to L/XL, and prevents the wrong query from ever landing on the wrong warehouse. The result: lower cost and fewer SLA breaches—without touching application code.

6.4 Eliminate the need for over-provisioning

Because heavy queries are intercepted pre-execution, you no longer size warehouses for the worst-case spike. Over-provisioning becomes optional, not mandatory.

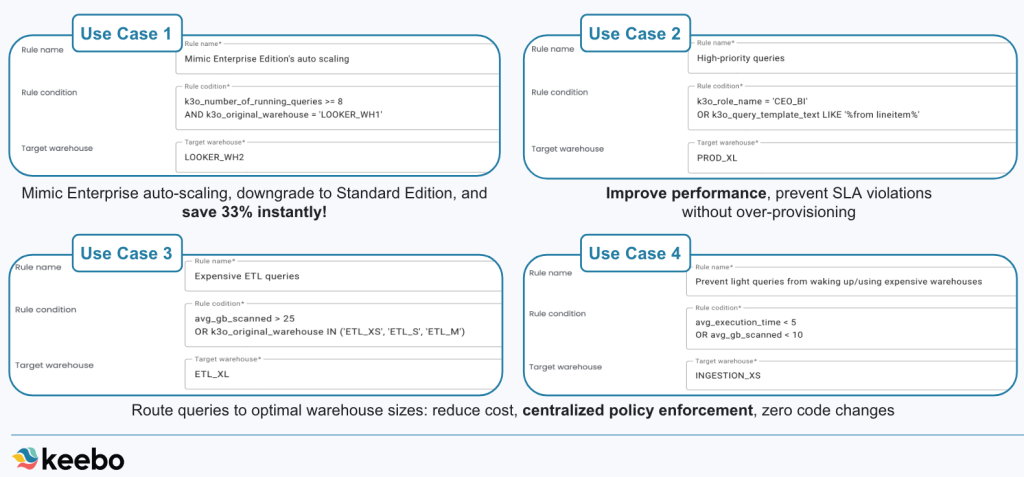

6.5 Common use cases for Smart Query Routing (Figure 4)

Below are some of the common examples of how some of our customers have utilized Keebo’s Smart Query Routing functionalities for some of their workloads (remember: you don’t have to send every workload to KQR, you can choose which ones to send directly to Snowflake and which ones to route through KQR).

Scenario 1: Mimic Enterprise auto-scaling on Standard Edition

Example rule: IF k3o_number_of_running_queries >= 8 AND k3o_original_warehouse = 'LOOKER_WH1' THEN route_to LOOKER_WH2

Business pay-off: Save 33 % instantly by downgrading from Snowflake’s Enterprise edition to their Standard edition without losing access to auto scaling. KQR wakes up an extra warehouse only when needed and puts it back to sleep afterwards.

Scenario 2: Shield a customer-facing dashboard

Example rule:IF role = 'CEO_BI' OR

k3o_query_template_text ILIKE '%from

lineitem%' THEN route_to PROD_XL

Business pay-off: Guarantees sub-second latency for mission-critical workloads—meeting SLAs without blanket oversizing.

Scenario 3: Promote large ETL jobs only when they breach cost / runtime thresholds

Example rule: IF avg_gb_scanned > 25 OR

k3o_original_warehouse IN

('ETL_XS','ETL_S','ETL_M') THEN route_to ETL_XL

Business pay-off: Heavy overnight loads get the horsepower they need only when they cross a defined scan threshold. Otherwise they stay on economical hardware—cutting ETL credits by 20-50%.

Scenario 4: Prevent lightweight queries from waking expensive production warehouses

Example rule: IF avg_execution_time < 5 AND

avg_gb_scanned < 10 THEN route_to INGESTION_XS

Business pay-off: Stops “cherry” queries from waking up an XL warehouse, drastically reducing waste in compute costs.

For a deeper look at Keebo’s multi‑layer cost optimization—including Autonomous Warehouse Optimization, Workload Intelligence, and Smart Query Routing—see our solution overview.

6.6 Case Study: How a Global SaaS Company Cut Snowflake Spend by 45 % Using Keebo Smart Query Routing

The Challenge

A global SaaS enterprise managing petabytes of analytics data in Snowflake struggled with cost volatility and unpredictable query performance. Different engineering teams sized their own warehouses manually—some over-provisioned for reliability, others under-sized and missed SLAs. The company needed a way to standardize warehouse governance, align performance to SLAs, and curb runaway compute spend—all without slowing developer velocity.

The Keebo Solution

The company deployed Keebo Smart Query Routing (KQR) across all production workloads. Keebo’s centralized policy engine automatically inspected every query before execution, routing heavy ETL and BI workloads to the most cost-effective warehouses in real time. Policies such as

IF avg_gb_scanned > 25 THEN route_to XL

ensured that large scans received appropriate resources, while ad-hoc and lightweight queries stayed on smaller, cheaper warehouses.

The deployment took less than two weeks and integrated seamlessly with the customer’s existing Snowflake setup—no code changes required.

The Results

- 45 % reduction in total Snowflake credit consumption within 60 days

- 35 % faster average query execution, with no SLA breaches

- Zero operational overhead for manual warehouse tuning or scaling

- Full transparency through Keebo Workload Intelligence dashboards, enabling precise chargebacks by department

Key Takeaways

By adopting Keebo KQR, the customer gained the same automation Snowflake now targets with Adaptive Compute—but with greater visibility, controllability, and predictability. Engineers refocused on analytics delivery, not warehouse babysitting, while Finance gained consistent monthly forecasting of Snowflake costs.

7 Conclusion

Adaptive Compute is a welcome step toward a more serverless Snowflake experience. It will simplify operations and improve query latency. Cost, however, is unlikely to fall— and may rise—until Snowflake introduces finer budget controls.

In the meantime, proven tools like Keebo Smart Query Routing can deliver the same automation but with transparent, adjustable rules. If you’d like to try out how routing can improve performance and reduce spend today, feel free to reach out and get a free trial.

FAQs: Adaptive Compute in Snowflake

- What is Adaptive Compute in Snowflake and how does it differ from traditional warehouses? Adaptive Compute automatically selects warehouse sizes for queries, replacing manual sizing and scaling. Unlike traditional warehouses, it removes the need to pick T-shirt sizes and manages resources dynamically based on query plans.

- Will Adaptive Compute reduce my Snowflake costs immediately? Not necessarily. While Adaptive Compute simplifies operations and may improve efficiency, cost savings are not guaranteed in the first release. Organizations may even see higher average warehouse usage depending on workload patterns.

- How does Adaptive Compute affect performance tuning and SLA management? Adaptive Compute optimizes query placement internally, but customers lose per-query control over warehouse sizing. High-priority workloads may influence resource allocation, potentially affecting latency or cost for other queries.

- What are the key limitations of Adaptive Compute? Limitations include reduced visibility into per-query cost decisions, potential overspend during high-priority bursts, and reliance on Snowflake’s internal router without user-level control for performance-cost trade-offs.

- How does Keebo Smart Query Routing complement Adaptive Compute? Keebo’s Smart Query Routing (KQR) provides centralized, flexible, and rule-based routing, allowing organizations to optimize performance and costs while retaining full visibility and governance over workloads.