Updated on Jan 19, 2026

1. Databricks Cost Optimization Introduction

As organizations scale their data analytics and machine learning operations, Databricks has become the platform of choice for processing massive volumes of data and building sophisticated AI models. However, with this power comes a challenge that keeps data leaders awake at night: escalating cloud costs. Without effective Databricks cost optimization strategies, organizations often find themselves paying far more than necessary while leaving performance gains on the table.

The reality is most organizations waste 30-50% of their Databricks spending on idle resources, oversized clusters, and inefficient workload configurations. But it doesn’t have to be this way. By implementing proven Databricks cost optimization techniques, companies can dramatically reduce their cloud bills while actually improving performance and reliability.

This comprehensive guide explores actionable strategies that help organizations achieve substantial cost savings without compromising on the capabilities that make Databricks indispensable for modern data teams.

2. Understanding the Databricks Cost Structure

Before diving into optimization strategies, it’s essential to understand how Databricks pricing works and where costs typically accumulate. Databricks operates on a consumption-based model measured in Databricks Units (DBUs)—a normalized unit of processing capability that varies by workload type and cloud provider.

Your Databricks bill is based on Databricks own charges for the platform, calculated based on DBU consumption. With serverless compute, infrastructure costs are bundled into the DBU pricing, so there’s no separate compute bill from the cloud provider.

The challenge with this model is that costs can escalate quickly as workloads scale. Organizations running data workloads on Databricks now spend an average of $300,000 per year, making cost management a critical business priority.

Several factors drive Databricks compute costs higher than expected:

- Always-on compute: Clusters or SQL warehouses, that remain active during idle periods

- Oversized or non-autoscaling compute: That provisions more resources than workloads actually need

- Inefficient queries: That scan more data than necessary due to missing or weak filters

- Suboptimal data layout: For example, poor partitioning or clustering that limits the effectiveness of targeted reads

- Lack of visibility: Into which teams, projects, or jobs consume the most compute and DBUs

Understanding these cost drivers is the first step toward implementing effective Databricks cost optimization best practices that deliver measurable results.

3. Strategic Compute Resource Management: The Foundation of Cost Control

Compute resource configuration represents the single largest lever for Databricks cost optimization. How you size, configure, and manage your compute resources – such as clusters and warehouses – directly determines both performance and cost outcomes.

3.1 Right-Sizing Compute Resources for Actual Workload Needs

One of the most common mistakes organizations make is provisioning compute resources based on peak capacity rather than typical workload patterns. This “better safe than sorry” approach results in compute resources running at 20-30% utilization during normal operations, wasting 70-80% of compute spending.

Optimizing resource allocation through strategies such as autoscaling and auto-termination dynamically allocates resources based on workload requirements, ensuring you only pay for the compute capacity you actually use.

To right-size your compute resources effectively:

- Analyze historical usage patterns to understand actual resource consumption across different workloads

- Start small and scale up rather than starting large and hoping workloads will grow into the capacity

- Use workload-appropriate instance types: CPU-optimized for transformations, memory-optimized for large joins, and GPU-accelerated for machine learning training

- Monitor cluster utilization metrics continuously to identify opportunities for downsizing without impacting performance

The goal is to maintain cluster utilization above 75-80% during active job execution. Anything significantly below this threshold indicates wasted capacity and optimization opportunities.

3.2 Implementing Intelligent Autoscaling

Static cluster configurations are a relic of the past for cost-conscious organizations. Autoscaling allows compute resources to dynamically adjust their size based on real-time workload demands, starting small and expanding only when necessary before scaling back down.

When implementing autoscaling for Databricks cost optimization or any cloud provider, consider these best practices:

- Set minimum cluster sizes based on baseline workload requirements, not peak capacity

- Configure maximum sizes with enough headroom for occasional spikes without excessive over-provisioning

- Use separate cluster configurations for different job types rather than one-size-fits-all approaches

- Monitor scaling patterns to ensure compute resources aren’t thrashing (constantly scaling up and down, which adds overhead)

Organizations that implement intelligent autoscaling typically achieve 40-60% cost reductions compared to static cluster sizing, all while maintaining or improving performance characteristics.

3.3 Auto-Termination: Eliminating Idle Cluster Costs

Perhaps the simplest yet most impactful Databricks cost optimization technique is configuring auto-termination for compute resources. Many organizations inadvertently leave interactive compute resources running overnight, during weekends, or after data engineers complete their work—accumulating charges for compute resources doing absolutely nothing.

Configure aggressive auto-termination policies:

- Interactive compute resources: 15-30 minute idle timeout for development compute resources and 5-20 minutes for warehouses.

- Scheduled jobs: Jobs should run on job compute resources that automatically terminate upon completion

- Production workloads: Even production compute resources should terminate when idle, leveraging autoscaling to spin back up when needed

This single configuration change can eliminate 20-40% of wasted spending with zero impact on functionality or user experience.

4. Leveraging Spot Instances for Massive Cost Reductions

One of the most powerful strategies for Databricks cost optimization involves using spot or preemptible instances for cluster nodes. All major cloud providers offer unused capacity at discounts of 60-90% compared to on-demand pricing.

4.1 Understanding Spot Instance Economics

Spot instances work on a market-driven model where supply and demand determine pricing, which fluctuates based on current utilization levels. When cloud providers have excess capacity, they make it available at dramatically reduced rates. The trade-off is that these instances can be reclaimed with short notice when the provider needs capacity for on-demand customers.

For many Databricks workloads, this tradeoff is entirely acceptable. Databricks automatically handles spot instance interruptions by checkpointing job progress and seamlessly migrating work to other nodes. For fault-tolerant workloads like batch ETL jobs, data preparation, and model training, spot instances deliver exceptional value.

4.2 Strategic Spot Instance Implementation

To maximize savings while maintaining reliability:

- Use spot instances for worker nodes while keeping driver nodes on on-demand instances to prevent job failures

- Use fleet instance diversification and configure clusters with fleet instance types to automatically span compatible instances across multiple AZs using capacity-optimized strategies, reducing eviction risks.

- Configure appropriate spot instance bidding strategies based on your workload tolerance for interruption

- Implement fallback to on-demand instances when spot capacity is unavailable

- Reserve spot capacity for dev/test environments where interruptions have minimal business impact

Organizations implementing comprehensive spot instance strategies for optimizing Databricks workloads typically reduce compute costs by 50-70% for appropriate workload types without meaningful impact on completion times.

5. Data Organization: The Often-Overlooked Cost Optimization Lever

While most organizations focus on compute optimization, data organization and storage strategies significantly impact both query performance and overall costs. Poor data organization forces queries to scan far more data than necessary, directly increasing compute consumption and execution time.

5.1 Modern Partitioning and Clustering Strategies

Traditional partitioning approaches create static filesystem hierarchies that are difficult to change as access patterns evolve. Many teams end up with over-partitioned tables containing thousands of tiny partitions, which actually degrades performance rather than improving it.

Modern Databricks optimization techniques focus on dynamic clustering rather than static partitioning:

- Liquid clustering provides flexibility to adjust clustering keys without rewriting all data

- Z-ordering collocates related information within files, enabling efficient data skipping

- Partition only when necessary: Tables under 1TB often perform better without partitioning

When you do partition, ensure each partition contains at least 1GB of data to avoid the small file problem that creates overhead from metadata management.

5.2 File Size Optimization and Compaction

The size of your data files dramatically affects query performance and costs. Files that are too small create excessive metadata overhead and reduce parallelism. Files that are too large prevent effective data skipping and force queries to read irrelevant data.

Modern Databricks includes automated features that handle file optimization:

- Auto-compaction runs after writes complete: Combining small files within Delta table partitions

- Optimized writes improve file size during write operations

- Regular OPTIMIZE commands compact files and implement Z-ordering for frequently queried tables

Maintaining optimal file sizes (typically 16MB-1GB depending on workload) reduces both storage costs and the compute required to process queries.

5.3 Storage Management and Data Retention

Delta Lake provides time travel, allowing access to historical data versions for auditing, debugging, and error recovery. However, retaining unlimited historical versions indefinitely creates unnecessary storage costs.

Implement intelligent data retention policies:

- Configure appropriate time travel windows: Based on actual recovery requirements (typically 7-30 days)

- Run VACUUM commands regularly: To remove old file versions beyond your retention period

- Identify and archive or delete unused tables and datasets

- Implement tiered storage strategies: Moving cold data to lower-cost storage classes relying on cloud object storage features (AWS S3 Intelligent-tiering, Azure blob access tiers, GCP cloud storage classes)

These practices can reduce storage costs by 40-60% while maintaining all data necessary for operational and compliance requirements.

6. Query and Workload Optimization Techniques

The way you write queries and structure data processing workloads has enormous impact on compute consumption and costs. Inefficient queries waste resources by scanning unnecessary data, performing redundant computations, and using suboptimal execution strategies.

6.1 Leveraging Adaptive Query Execution

Databricks’ Adaptive Query Execution (AQE) dynamically optimizes query plans based on runtime statistics, providing several key benefits for Databricks optimization techniques:

- Dynamic shuffle partition coalescing adjusts partition counts based on actual data size

- Dynamic join strategy switching converts expensive sort-merge joins to efficient broadcast joins when appropriate

- Skew join optimization automatically detects and handles data distribution imbalances

AQE is enabled by default in recent Databricks runtimes and requires no code changes, making it one of the easiest Databricks cost optimization strategies to implement.

6.2 Query Design Best Practices

Beyond automatic optimizations, thoughtful query design significantly impacts costs:

- Apply filters early to leverage predicate pushdown and reduce data volumes before expensive operations

- Use broadcast joins for small dimension tables (under 10MB) to eliminate shuffle operations

- Minimize data shuffling by partitioning tables on commonly joined columns

- Cache intermediate results when the same data will be accessed multiple times

- Use columnar file formats like Parquet or Delta to read only required columns

Each of these optimizations reduces the amount of data processed, directly lowering compute consumption and costs.

6.3 Choosing the Right Compute Type and Mode

Selecting the appropriate compute for your workload type is critical for cost efficiency.

For Spark Workloads (ETL, Data Processing, ML):

- Use Job Compute for production pipelines, scheduled jobs, and automated model training. Job clusters terminate immediately upon completion and cost significantly less than All-Purpose compute – typically reducing costs by up to 40-50% for these workloads.

- Reserve All-Purpose Compute for interactive development, exploration, and ad-hoc analysis where clusters need to remain available.

For SQL Workloads (BI, Dashboards, Ad-Hoc Queries):

- Use Databricks SQL Warehouses for production SQL workloads. Avoid running steady-state BI and dashboard queries on All-Purpose clusters, which have materially higher effective costs for SQL.

- Serverless SQL Warehouses automatically scale capacity and minimize idle costs with short auto-stop timeouts (e.g., 5-10 min), making them ideal for sporadic or variable workloads.

- Pro SQL Warehouses (provisioned) may be more cost-effective for constant, high-volume query streams when right-sized with appropriate auto-stop settings.

This strategic separation of compute by workload type – SQL vs. Spark, and automated vs. interactive – typically delivers the largest immediate cost reduction with minimal code changes.

7. Comprehensive Monitoring and Governance

Effective Databricks cost optimization requires comprehensive visibility into how resources are consumed and clear governance policies that prevent waste.

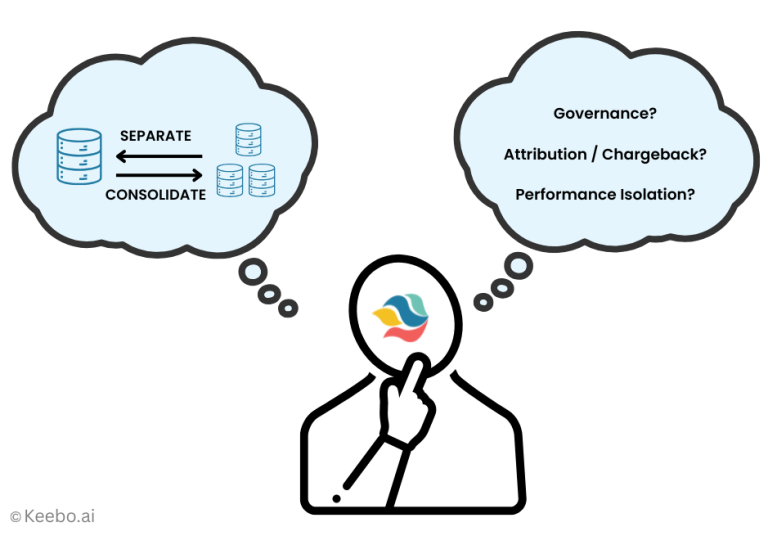

7.1 Implementing Strategic Tagging

Organizations should implement effective tagging practices by creating tag naming conventions that attribute usage to specific user groups and provide granular insights based on roles, products, or services.

Develop a comprehensive tagging strategy that tracks:

- Business unit or department for chargeback and cost allocation

- Project or application to understand ROI on specific initiatives

- Environment type (development, staging, production) to identify optimization opportunities

- Cost center for financial reporting and budget management

Consistent tagging enables detailed cost attribution, helping you identify which teams, projects, or workloads consume the most resources and where optimization efforts should focus.

7.2 Budget Alerts and Cost Anomaly Detection

Reactive cost management—discovering overruns after the fact—is far more expensive than proactive monitoring and alerting. Implement budget alerts at multiple levels:

- Workspace-level budgets to control overall spending

- Team or project budgets based on your tagging strategy

- Anomaly detection to identify unexpected cost spikes before they become significant

Modern cost management approaches combine historical trend analysis with machine learning to detect unusual patterns early, allowing teams to investigate and address issues before they impact budgets.

7.3 Regular Cost Reviews and Optimization Cycles

Databricks cost optimization isn’t a one-time project—it’s an ongoing process as workloads evolve, data volumes grow, and usage patterns change. Establish regular optimization cycles:

- Weekly reviews of cost trends and anomalies

- Monthly deep dives into resource utilization and optimization opportunities

- Quarterly strategic reviews of overall spending patterns and ROI

These regular reviews ensure optimization strategies remain effective as your Databricks environment evolves.

8. Automated Optimization: The Modern Approach

While manual optimization techniques are valuable, they require constant expertise and attention. As Databricks environments grow more complex with hundreds of workloads and dynamic usage patterns, manual optimization becomes increasingly challenging to maintain.

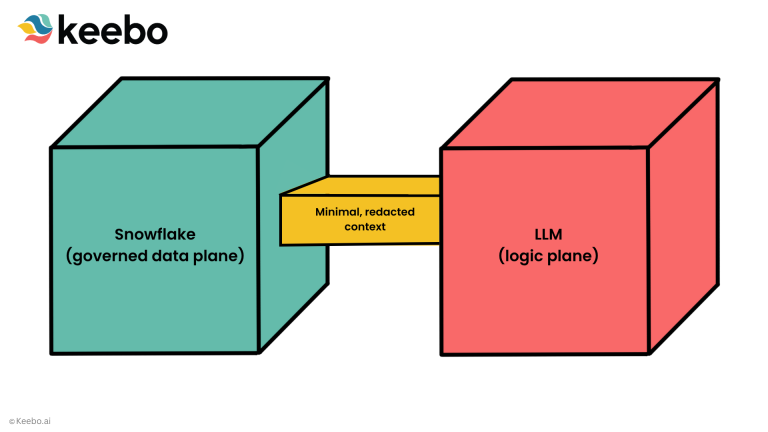

The “modern approach” to cost optimization is no longer about manually tuning Spark configurations or managing individual virtual machines. It is about leveraging Platform AI to handle the infrastructure, and Automated Optimization solutions to manage the capacity and policy decisions that remain.

This is where automated optimization platforms deliver transformative value. Keebo represents the next generation of Databricks cost optimization best practices through continuous, AI-powered optimization that adapts to changing workload patterns without manual intervention.

Unlike traditional “observe and report” tools that simply provide recommendations requiring manual implementation, Keebo actively optimizes your Databricks environment in real-time:

- Continuous monitoring of query patterns and resource utilization

- Intelligent right-sizing of warehouses and compute resources based on actual workload requirements

- Automated tuning of configurations to maximize efficiency

- Six-layer performance guarantees ensuring optimizations never compromise query performance or SLAs

Organizations using automated optimization platforms achieve approximately 27% average cost savings while maintaining guaranteed performance—all without requiring dedicated engineering resources for ongoing optimization.

For teams lacking specialized performance engineering expertise, dealing with dynamic workloads that change throughout the day, or needing to guarantee SLAs while controlling costs, automated solutions like Keebo eliminate the constant optimization burden. You can explore flexible pricing options aligned with your specific needs and savings goals.

9. Building a Cost-Conscious Culture

Technical Databricks cost optimization techniques deliver significant savings, but sustainable cost management requires cultural changes across your data organization.

9.1 Training and Enablement

Ensure your teams understand the cost implications of their decisions:

- Train engineers on Databricks pricing models and how different choices impact costs

- Share cost dashboards broadly so teams understand their resource consumption

- Celebrate optimization wins to reinforce the importance of cost-conscious development

- Provide cost estimation tools so developers can understand the financial impact of new workloads before deployment

When engineers understand how their decisions affect costs, they naturally make more efficient choices during development rather than requiring extensive optimization after the fact.

9.2 Cost Ownership and Accountability

Assign clear ownership for cost management:

- Make teams responsible for their own Databricks spending through chargeback models

- Include cost metrics in project planning and success criteria

- Review resource consumption regularly with team leads and managers

- Reward teams that successfully optimize costs while maintaining or improving performance

When cost ownership is clear and teams are accountable for their spending, optimization becomes a natural part of the development workflow rather than an afterthought.

10. The Foundation: Leveraging Platform Based AI

The first step in any modern optimization strategy is to let Databricks manage the underlying infrastructure. By shifting workloads to Databricks Serverless and leveraging native intelligence, you eliminate the low-level manual overhead that plagued earlier data platform management.

- Infrastructure Management: Serverless Compute removes the need to select specific VM types (e.g., i3.xlarge), manage driver versions, or configure complex startup scripts. The platform handles the hardware provisioning instantly.

- Execution Optimization: Features like Adaptive Query Execution (AQE) and Liquid Clustering automatically optimize query plans and data layout in real-time, removing the need for manual partitioning or query tuning.

11. Advanced Optimization Strategies

Beyond fundamental techniques, several advanced approaches can deliver additional cost savings for specific scenarios:

11.1 Photon Engine for Query Acceleration

Databricks’ Photon vectorized query engine can provide 2-3x performance improvements for SQL workloads, which directly translates to reduced compute time and lower costs. Photon is particularly effective for:

- Aggregations and complex joins

- Window functions and analytical queries

- Processing semi-structured data (JSON, CSV)

For Databricks performance optimization, enabling Photon often delivers immediate improvements with no code changes required.

11.2 Strategic Use of Serverless Compute

Databricks Serverless eliminates cluster management overhead by providing instant-on compute that automatically scales to zero when idle. For sporadic workloads with unpredictable timing, serverless compute prevents paying for idle compute resources between executions.

Serverless is particularly cost-effective for:

- Intermittent data science experimentation

- Scheduled jobs with variable execution frequency

- Development and testing environments with irregular usage

12. Measuring Optimization Success

Effective Databricks cost optimization requires clear metrics that demonstrate success and identify areas needing additional attention:

12.1 Key Performance Indicators

Track these essential metrics:

- Total monthly spending and trend over time

- Cost per query or cost per job to identify inefficient workloads

- Cluster utilization rates to ensure right-sizing

- Idle time percentage to measure auto-termination effectiveness

- Spot instance adoption rate and savings achieved

- Query performance metrics to ensure optimization doesn’t degrade performance

12.2 Cost-to-Value Ratio

Don’t optimize costs in isolation—measure the business value generated relative to spending:

- Calculate cost per business outcome (reports generated, models trained, insights delivered)

- Track user satisfaction and query performance alongside cost metrics

- Understand which workloads deliver the highest ROI to guide resource allocation

The goal isn’t minimizing absolute spending—it’s maximizing value generated per dollar spent.

Conclusion

Databricks cost optimization is no longer just about “trimming the fat” – it is a critical architectural requirement for scaling data operations sustainably.

The strategies outlined in this guide reflect a fundamental shift in how we manage cloud resources. We have moved away from the era of Infrastructure Management – where engineers spent hours selecting instance types, managing spot markets, and writing initialization scripts. Today, we are in the era of Configuration Management.

The New Optimization Formula: Effective optimization now relies on a powerful combination of technologies:

Platform AI (The Foundation): Adopting Databricks Serverless and Liquid Clustering to handle the heavy lifting of infrastructure provisioning and data layout.

Automated Optimization (The Intelligence): Leveraging solutions like Keebo to dynamically manage the capacity decisions that remain – automatically resizing SQL Warehouses and tuning idle policies in real-time.

From “Cost Cutting” to “Value Efficiency”: The goal isn’t just to lower the bill; it’s to ensure that every dollar spent delivers maximum impact. By eliminating the static configurations that force you to over-provision for peaks and pay for waste during valleys, organizations can achieve cost reductions of 40-60% while simultaneously improving query performance and user experience.

The Cost of Inaction: Every day your platform runs on rigid, unadjusted configurations is a day you are paying for capacity you don’t need. Whether you are just beginning with basic auto-termination policies or are ready to implement fully automated dynamic sizing, the most important step is to stop treating optimization as a one-time project.

The tools now exist to make cost optimization continuous, intelligent, and automatic. By integrating Platform AI with Automated Optimization, you ensure your Databricks environment evolves as fast as your business does – efficiently, reliably, and automatically.

Frequently Asked Questions (FAQs)

What is Databricks cost optimization and why does it matter?

Databricks cost optimization involves implementing strategies and techniques to reduce cloud spending on the Databricks platform while maintaining or improving performance. It matters because organizations typically waste 30-50% of their Databricks budget on idle resources, oversized compute resources, and inefficient configurations that can be eliminated through proper optimization.

What are the most effective Databricks cost optimization techniques?

The most impactful techniques include intelligent cluster autoscaling, aggressive auto-termination policies, leveraging spot instances for appropriate workloads, proper data organization with optimal file sizes, separating job compute from all-purpose compute, and implementing comprehensive tagging for cost visibility. Organizations combining these approaches typically achieve 40-60% cost reductions.

How does autoscaling help with Databricks cost optimization?

Autoscaling allows compute resources to dynamically adjust their size based on real-time workload demands, starting small and expanding only when necessary before scaling back down. This ensures you only pay for compute capacity during active processing rather than maintaining large compute resources during idle periods. Organizations implementing intelligent autoscaling typically see 40-60% cost reductions compared to static cluster configurations.

Should I use spot instances for all Databricks workloads?

Spot instances work excellently for fault-tolerant workloads like batch ETL, data preparation, and model training, offering 60-90% discounts compared to on-demand pricing. However, they’re less suitable for interactive workloads or jobs requiring guaranteed completion times. The optimal approach uses spot instances for worker nodes on appropriate workload types while keeping driver nodes on on-demand instances for reliability.

What is the difference between job compute and all-purpose compute?

Job compute is optimized for running automated, non-interactive workloads and terminates immediately upon completion, while all-purpose compute remains available for interactive queries and exploration. Job compute typically costs 40-50% less than all-purpose compute for the same resources, making it the optimal choice for production ETL jobs, scheduled analytics, and automated model training.

How does data organization impact databricks cost optimization?

Poor data organization forces queries to scan far more data than necessary, directly increasing compute consumption. Optimal file sizes (16MB-1GB), appropriate partitioning or clustering, and regular compaction reduce the amount of data processed per query, lowering compute costs while improving performance. Organizations optimizing data layout typically see 30-40% reductions in query costs.

What role does monitoring play in Databricks cost optimization strategies?

Comprehensive monitoring provides visibility into resource consumption patterns, enabling you to identify waste and optimization opportunities. Implementing strategic tagging, budget alerts, and regular cost reviews ensures optimization strategies remain effective as workloads evolve. Without proper monitoring, you’re essentially flying blind and will miss significant savings opportunities.

Can I optimize costs without compromising performance?

Absolutely. Proper Databricks cost optimization best practices actually improve performance while reducing costs by eliminating waste, right-sizing resources, and ensuring efficient query execution. Techniques like adaptive query execution, optimal file sizes, and appropriate cluster configurations deliver better performance at lower cost compared to default configurations.

How does automated optimization differ from manual approaches?

Manual optimization requires constant monitoring and expert intervention to implement configuration changes as workloads evolve. Automated solutions continuously adjust settings in real-time, adapting to dynamic usage patterns 24/7 without human intervention while guaranteeing performance. Organizations using automated platforms like Keebo typically achieve sustained savings of approximately 27% with minimal operational overhead.

What is the ROI timeline for implementing Databricks cost optimization?

Organizations implementing comprehensive Databricks cost optimization techniques typically see measurable savings within the first month, with full ROI achieved within 1-3 months. Simple changes like auto-termination and right-sizing deliver immediate impact, while more sophisticated strategies like spot instances and data organization optimization provide sustained long-term benefits.

How do I get started with Databricks cost optimization?

Start by implementing quick wins like aggressive auto-termination policies and basic cluster right-sizing based on utilization metrics. Then implement comprehensive tagging for cost visibility, configure autoscaling, and begin using job compute for batch workloads. For sustained optimization without dedicated engineering resources, consider automated platforms like Keebo that handle continuous optimization automatically.