When teams come to us with Snowflake performance or cost issues, one of the most common culprits we find is poorly configured clustering keys. It’s not glamorous, and it’s rarely the first thing people think about when troubleshooting slow queries or high bills – but it’s often the biggest opportunity for improvement.

Here’s the typical pattern. Someone creates a table in Snowflake, loads some data, and everything works fine initially. Queries run fast enough, costs seem reasonable, and the team moves on to the next thing. Six months later, the table has grown to multiple terabytes, queries are getting slower, and compute costs are climbing. Everyone assumes this is just the natural cost of growth.

But here’s what’s actually happening: your data is poorly clustered, which means Snowflake is scanning way more data than it needs to for every query. And unlike most performance problems, this one gets exponentially worse as your table grows.

- What Clustering Actually Does

- The Default Behavior Nobody Talks About

- When Clustering Actually Matters

- How to Choose a Clustering Key

- Multi-Column Clustering Keys

- The Cost of Clustering

- How to Check Your Current Clustering

- Beyond Manual Clustering: Automated Optimization

- The Bottom Line

1. What Clustering Actually Does

When you load data into Snowflake, it automatically gets organized into micro-partitions – compressed, columnar storage units that hold between 50-500MB of uncompressed data. Snowflake maintains metadata about each micro-partition: the min/max values for each column, number of distinct values, and other statistics.

When you run a query, Snowflake’s optimizer checks this metadata to figure out which micro-partitions it can skip entirely. This is called pruning, and it’s one of the most powerful optimization techniques available in Snowflake. If your WHERE clause filters on a date column, and Snowflake can determine that certain micro-partitions contain data from completely different dates, those partitions get pruned – never read, never scanned, never charged for.

But pruning only works well when your data is properly clustered. If the values you’re filtering on are scattered randomly across hundreds or thousands of micro-partitions, Snowflake can’t prune effectively. You end up scanning most of the table even when you only need a tiny slice of it.

2. The Default Behavior Nobody Talks About

By default, Snowflake clusters your data based on insertion order. If you’re loading data chronologically – like event logs or transaction records that arrive in time sequence – this works great. Your data naturally ends up well-clustered by timestamp, and queries with date filters prune efficiently.

But most tables don’t stay this way. Maybe you’re using Snowpipe for continuous loading, which disrupts natural clustering. Maybe you’re doing updates and deletes that scatter related rows across different micro-partitions. Or maybe your data just doesn’t arrive in a useful order to begin with.

The problem is that Snowflake never changes this default behavior. It doesn’t analyze your query patterns and say “hey, you’re always filtering on customer_id, maybe we should cluster on that instead.” The automatic clustering service will maintain whatever clustering key you’ve defined, but it won’t pick one for you.

3. When Clustering Actually Matters

Not every table needs explicit clustering. If your table is under a few hundred gigabytes and queries run fast, you’re probably fine with the default behavior.

But once you get into the multi-terabyte range, clustering becomes critical. I’ve seen queries go from 20 minutes to 2 seconds – a 600x improvement – just by adding the right clustering key. The difference between scanning 300,000 micro-partitions versus 4 micro-partitions is the difference between burning hundreds of dollars and spending pennies.

The typical threshold is around 1TB, but I’ve seen clustering make a meaningful difference on tables as small as 100-200GB if the query patterns are right.

4. How to Choose a Clustering Key

This is where most people get stuck. The answer isn’t complicated, but it does require actually looking at your data. Not sure if clustering is right for your table? Use this decision framework to evaluate whether defining an explicit clustering key makes sense:

Start by analyzing your query patterns. Look at QUERY_HISTORY for the past 30-60 days and identify your most expensive or most frequent queries against the table. What columns show up in WHERE clauses constantly? Those are your candidates. This is where Workload Intelligence becomes valuable – understanding which queries are actually costing you money and which columns they filter on helps you make informed decisions about clustering rather than guessing. The best clustering keys have a few characteristics:

4.1. High Enough Cardinality

A column like status with only 3-4 values won’t enable much pruning. You need enough distinct values to meaningfully partition your data.

4.2. Low Enough Cardinality

On the flip side, a column with millions or billions of unique values (like a UUID) may sometimes work for query performance (pruning works fine), but in most cases becomes extremely expensive to maintain when data changes frequently.

4.3. Used in Selective Filters

If most queries filter on this column and typically only need a small percentage of the data, clustering will help a lot.

4.4. Relatively Stable

Clustering keys that change frequently (through updates) cost more to maintain because Snowflake has to constantly recluster the data.

For time-series data, date columns are usually excellent choices. For user-facing applications, customer_id or user_id often work well. For multi-tenant systems, tenant_id is typically a winner.

5. Multi-Column Clustering Keys

You can cluster on multiple columns, but order matters a lot. Snowflake clusters hierarchically – first by the first column, then within each group by the second column, and so on.

If you cluster on (date, customer_id), queries filtering only on date will prune well. But queries filtering only on customer_id will scan most of the table. The first column in your clustering key is by far the most important.

General rule: put lower cardinality columns first, but only if they’re actually useful for pruning. A status column with 3 values might technically be lower cardinality than a date column, but if you’re filtering by date in 90% of queries, date should come first.

6. The Cost of Clustering

Defining a clustering key isn’t free. Snowflake’s automatic clustering service will continuously reorganize your data to maintain good clustering as new data arrives or existing data changes. This consumes credits – and understanding how Snowflake pricing works is critical to making informed decisions about clustering.

For tables that are queried frequently but updated infrequently, the performance gains usually dwarf the clustering costs. But for tables with constant updates or heavy DML, the clustering overhead can add up.

You can monitor clustering costs in the AUTOMATIC_CLUSTERING_HISTORY view. Start with one or two high-value tables, measure the impact, and expand from there. This is part of a broader approach to monitoring Snowflake performance effectively.

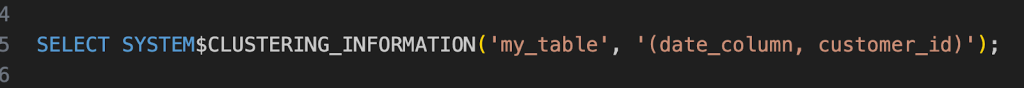

7. How to Check Your Current Clustering

Before you define a clustering key, check how well your table is currently clustered using the SYSTEM$CLUSTERING_INFORMATION function:

This returns JSON with metrics like average_depth (lower is better – under 30-50 is generally good) and average_overlaps (how many micro-partitions contain overlapping value ranges).

The nice thing about this function is you can test different clustering strategies without actually implementing them. Try different column combinations and see which ones show the best clustering metrics.

8. Beyond Manual Clustering: Automated Optimization

Getting clustering right is just one piece of the puzzle when it comes to Snowflake cost optimization. While you can manually define and monitor clustering keys, there are other optimization opportunities that require constant adjustment based on changing workload patterns.

This is where automated approaches become valuable. Warehouse optimization goes beyond just clustering – it involves dynamically adjusting warehouse sizes, managing suspension policies, and routing queries to the most efficient compute resources. These are decisions that need to happen in real-time, not quarterly during your optimization review.

For teams serious about improving query performance while reducing costs, the combination of proper clustering with intelligent automation can deliver significantly better results than either approach alone.

9. The Bottom Line

If you’re running a multi-terabyte table in Snowflake and you’ve never explicitly thought about clustering, you’re probably wasting money. The ROI on getting clustering right is often immediate: faster queries, lower compute costs, and happier users.

Start by identifying your 3-5 largest, most-queried tables. Analyze the query patterns. Run SYSTEM$CLUSTERING_INFORMATION to understand current state. Pick a clustering key that matches how the data is actually used. Monitor the impact.

Most teams I work with see 30-50% cost reductions on their biggest tables just from proper clustering. Some see 10x improvements or better. That’s not optimization at the margins – that’s fundamentally changing how efficiently your data warehouse operates.

Want to see how your current Snowflake setup stacks up? Schedule a free assessment to understand where you’re leaving money on the table.