1) Executive summary

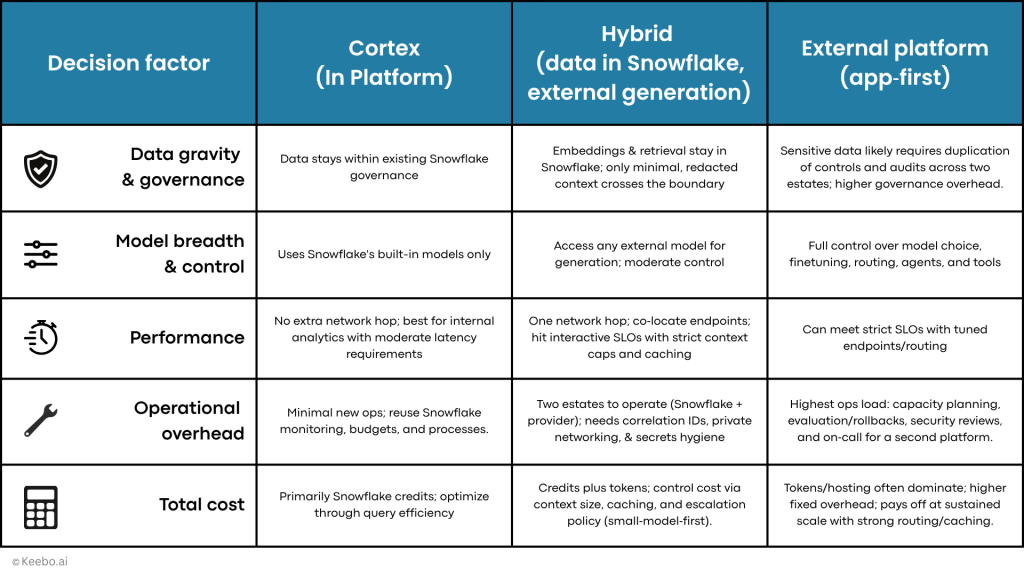

Most Snowflake customers exploring large language models (LLMs) face a practical fork in the road: Should we start with Snowflake Cortex, adopt a hybrid pattern, or build on an external LLM platform? The best answer depends on five variables you can evaluate quickly: data gravity & governance, model breadth & control, performance envelope, operational tolerance, and total cost of ownership.

- Cortex works best when your highest priorities are keeping data resident in Snowflake, applying consistent RBAC/masking, minimizing egress, and getting to an internal prototype fast (e.g., summarization/classification on governed tables, LLM‑assisted SQL, or internal knowledge bots). This path often simplifies model monitoring and data observability because it reuses telemetry and controls you already operate within Snowflake.

- A hybrid approach makes sense when you want to keep retrieval and embeddings in Snowflake while calling an external model for generation. You retain model flexibility while limiting data movement—but you’ll accept added plumbing for caching, retries, and cross‑system observability, plus a greater need for upstream query optimization to shape prompts from SQL results.

- External platforms are worth considering when your use case needs specialized models, advanced tool‑use/agentic workflows, or ultra‑low latency for customer‑facing apps. If you are simultaneously deciding Databricks vs Snowflake at the platform layer, that choice will strongly influence your LLM architecture as well.

Cost lens (for Snowflake shops): Think in terms of unit economics across options: context size, tokens in/out, cache hit rate, concurrency, and end‑to‑end latency. Within Snowflake, attach those to snowflake pricing concepts (credits for serverless features and compute, plus any egress); with external models, add token‑based fees, finetune/storage, and managed endpoint costs. For a pragmatic overview of credit math and budgeting traps, see our guide on Snowflake Pricing, Explained: A Comprehensive 2025 Guide to Costs & Savings.

Quality & safety: Establish a light but repeatable evaluation loop early. Track grounded‑answer rate, summary fidelity, and, where relevant, SQL correctness—then tie those to operational signals you already monitor (warehouse load, p50/p95 latency, error budgets). This is where your existing model monitoring and data observability practices reduce risk as adoption grows.

Rule of thumb:

If your data and controls live in Snowflake, start with Cortex. Shift to hybrid when you need model flexibility without wholesale data movement. Go external when specialization or application scale make the economics or capabilities compelling. Reassess quarterly; models, token prices, and Snowflake features are evolving.

2) Why LLMs with Snowflake now?

If you already operate your core analytics on Snowflake, the case for LLMs is less about novelty and more about meeting people where they work, maintaining governed access to data, and controlling cost as usage scales.

1) Existing workflows. Analysts and engineers spend their day in SQL, dashboards, and notebooks tied to Snowflake. LLMs augment that work in practical ways: natural language querying, summarization of result sets, classification and tagging pipelines, and assisted authoring of SQL. For an overview of these workflows and caveats around performance/correctness, see Snowflake Copilot: How the LLM‑Powered Assistant Can Improve SQL Query Efficiency & Performance (with Examples).

2) Governance requirements. You’ve likely invested years in snowflake security—RBAC, masking, row‑level policies, audit trails. Moving raw or sensitive data to another system for LLM processing introduces new compliance obligations and observability gaps. Cortex is attractive because it keeps LLM capabilities close to governed data. When external models are compelling, a hybrid RAG pattern—embeddings and retrieval in Snowflake; minimal, redacted context to an external model—reduces egress and keeps lineage/telemetry consolidated. This aligns naturally with your data observability program and smooths incident response.

3) Economics are the new bottleneck.

Capability is abundant; predictability isn’t. As adoption grows, the compound effect of context size, prompt composition, and concurrency dominates the bill. On the Snowflake side, that maps to snowflake pricing (credits for serverless features and compute, plus any network egress). On the model side, it’s tokens in/out, finetune/storage, and managed endpoint fees. The most honest KPI is $ per successful task, not $ per token. For credit guardrails, and budgeting tips, see our guide on Snowflake Pricing, Explained: A Comprehensive 2025 Guide to Costs & Savings.

4) External model trade-offs.

External platforms provide frontier models, domain‑specialized options, and rich agent frameworks. They excel when you need customization or are building user‑facing applications with strict latency SLOs. The trade‑offs: more systems to secure and observe, added network complexity, and a higher coordination burden across teams. If your organization is also weighing databricks vs snowflake, that platform decision often drives your LLM architecture as much as model choice.

5) Maintaining control while scaling.

The teams that scale LLM usage successfully standardize just enough controls early:

- Maintain a small, representative offline evaluation set for each use case, and run it any time prompts/templates or model versions change.

- Capture operational KPIs from day one: p50/p95 latency, cache hit rate, query retries, and a budget envelope tied to credits/tokens.

- Wire error budgets and alerting into your existing observability stack. For a clear primer on why observability goes beyond dashboards, see Observability vs. Monitoring in Snowflake: Understanding the Difference.

6) Data location drives architecture.

Design patterns that minimize data movement: keep retrieval close to source tables, redact aggressively, and cache stable answers. These patterns shorten feedback loops, cut cost, and reduce risk while preserving most of the benefits of model flexibility. They also directly connect to what your audience searches for—model monitoring, data observability, and snowflake security—and to how Snowflake teams already run production analytics.

3) What is Snowflake Cortex?

Cortex provides LLM and AI capabilities within Snowflake’s existing governance framework. Instead of moving information into a separate application stack, you invoke language and semantic functions where your access controls, masking policies, lineage, and budgets already apply. That proximity doesn’t magically solve quality or cost—but it reduces surface area and lets you reuse the operational habits you’ve built for the warehouse.

3.1 The building blocks (and when to use each)

Cortex isn’t a single tool; it’s a small set of primitives you can compose. The most common are LLM functions, embeddings and semantic search, Cortex Search/Knowledge Extensions, Cortex Analyst, Document AI, and Copilot. The pattern to keep in mind is simple: bring questions to the data; keep sensitive rows and columns under existing controls.

LLM functions handle text generation, summarization, translation, extraction, and classification directly in SQL or Python. These are the fastest path for adding language understanding to existing queries or pipelines because they run where the data already lives. They’re useful for templated summaries, entity extraction, or enriching records before you write them back to tables.

Embeddings and semantic search enable RAG and semantic lookup over internal content. By generating and storing embeddings in Snowflake, you keep indexing, freshness, and access rules close to source tables. This is typically how you ground answers in enterprise text while avoiding broad data egress.

Cortex Search and Knowledge Extensions provide managed indexes over curated content that teams can share and reuse. It’s the same grounding idea as above, but packaged so that search becomes a defined, governed asset.

Cortex Analyst translates natural language questions into SQL queries. This can be a cleaner path for business‑facing experiences, provided you keep the underlying datasets and access policies tight.

Document AI extracts structured data from unstructured documents like invoices, statements, and forms. The advantage is consolidating extraction, validation, and storage under the same governance and audit you already run.

Finally, Copilot helps developer/analyst productivity. It helps author and explain SQL within Snowflake, and it’s a low‑friction way to put LLM assistance where people already work. For a practical walk‑through of that workflow and the guardrails that matter, see Snowflake Copilot: How the LLM‑Powered Assistant Can Improve SQL Query Efficiency & Performance (with Examples).

These features share a common approach: data remains in Snowflake with controlled access through views or indexes.

3.2 How Cortex is priced (at a glance)

Because Cortex operates inside Snowflake, usage is metered the same way you’re used to: consumption rolls up to credits under your account and edition. In practice, costs show up in a few familiar places:

- Retrieval and preparation (e.g., building views, joining, filtering, pre‑aggregating) consume warehouse compute.

- Embeddings and search involve compute for index builds and refresh, plus ongoing query costs when you perform semantic lookup.

- LLM functions and related services add consumption based on how often and how heavily you invoke them.

- Cloud‑services activity (e.g., index maintenance) appears when you set tighter freshness targets or update large corpora.

This means optimizing upstream SQL to minimize prompt size and managing LLM usage like other Snowflake workloads: tag queries by project, monitor consumption against budgets, and look at cost per successful task rather than any single line item. (If you want a deeper refresher on credit math and guardrails, see our pricing guide for Snowflake.)

3.3 Governance and day‑2 operations

Cortex’s main advantage is that it inherits your Snowflake controls. Roles, masking, row‑level policies, and audit don’t need to be re‑implemented in a new stack; you apply them at the view or table that feeds a prompt or search. That design choice reduces the blast radius of mistakes and shortens incident response because you can trace everything with the tools you already use.

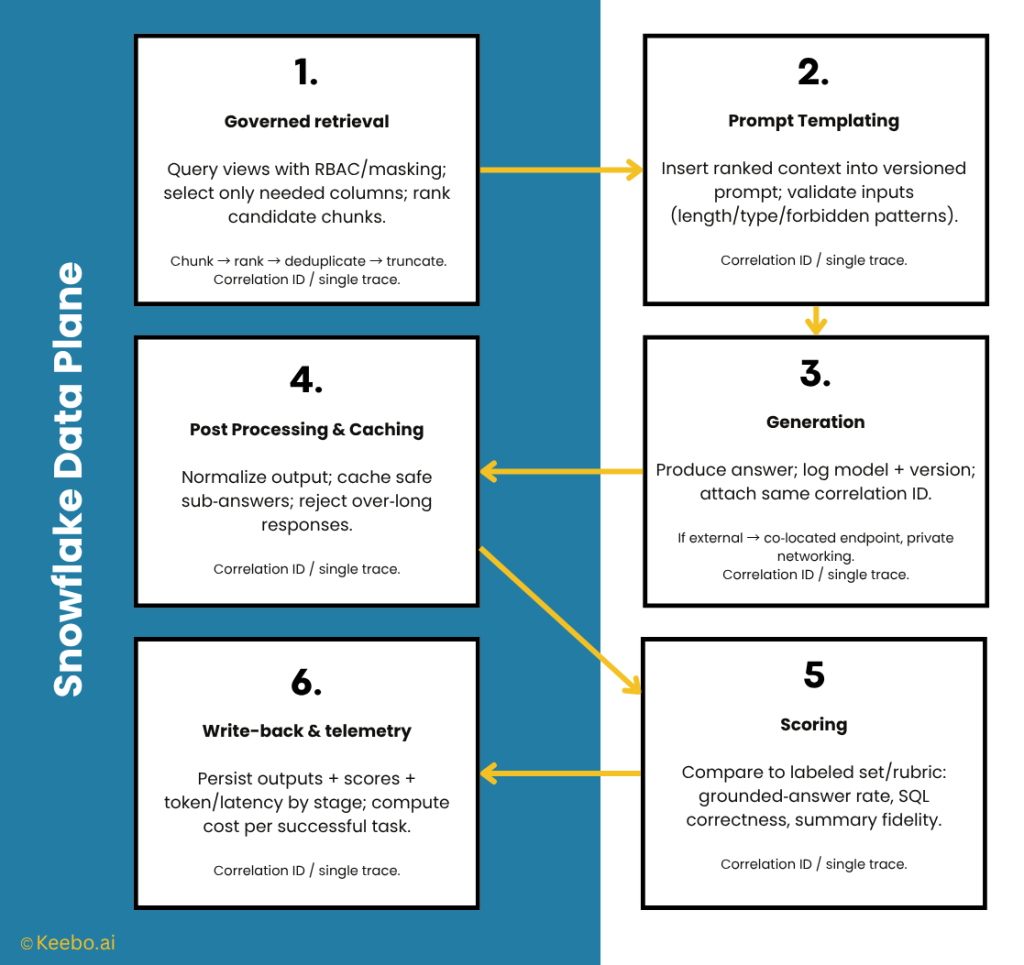

Operationally, treat a Cortex‑powered pipeline like any other production job: version prompt templates and retrieval logic within your CI/CD and source control; attach query tags so ownership and budgets are clear; and propagate a correlation ID from the moment a request is made through retrieval and generation so you can separate retrieval latency from generation latency and tie quality signals to cost. If you later adopt a hybrid pattern for specific capabilities, that same trace will stitch the external call back to the Snowflake query that created its context—no new observability religion required.

3.4 Where Cortex fits—and when to reach for hybrid/external

Cortex suits internal use cases requiring data to remain within Snowflake. Common wins include summarizing or classifying records, extracting entities or facts into structured columns, grounding answers in curated corpora, and improving developer/analyst throughput with SQL assistance. You’ll move fast because you aren’t introducing a second platform to secure and observe.

Consider hybrid approaches for specialized models, agent capabilities, or when external models demonstrate clear improvements. Even then, keep embeddings and retrieval in Snowflake and send only a minimal, redacted context across the boundary. If your product truly demands deep orchestration, frequent model swaps, and A/B control, adopt an external platform deliberately—but keep Snowflake as the data plane so lineage, access, and cost attribution remain intact. While you can implement basic A/B routing at the application layer, statistically rigorous A/B testing with built-in evaluation gates remains a strength of external platforms.

Start where your data and controls live, and add complexity only when your evaluation set proves you need it.

4) What do “other LLMs” mean in practice?

When Snowflake is your system of record, “other LLMs” simply means running generation and agent logic outside the Snowflake governance and billing boundary. You still keep retrieval—and the sensitive rows and columns it touches—inside Snowflake. Let’s examine the three main options—managed APIs, cloud ML platforms, and self-hosted open-source models—and understand how each affects your risk profile and Snowflake integration.

4.1 Managed LLM APIs (general‑purpose endpoints)

A managed API is the lightest way to add external model capability. You call an HTTPS endpoint with a prompt and receive a completion; the provider handles capacity, upgrades, and availability. For Snowflake customers, the appeal is obvious: you can prototype quickly and access frontier features without standing up infrastructure.

That convenience comes with responsibilities. Because prompts and retrieved context leave Snowflake, you must apply the same disciplines you use for any cross‑boundary data flow: redact PII before it departs, restrict secrets to least privilege, and log every hop so data observability spans both sides. Cost control also becomes a two‑currency exercise (credits in Snowflake; tokens with the provider). Teams that succeed here treat the external API as a generation service rather than a second data platform: embeddings and retrieval stay in Snowflake; only a minimal, ranked, truncated context crosses the boundary. If you later decide you need more knobs—finetuning, routing, or built‑in evaluations—you can step up to a platform without rewriting your Snowflake side.

4.2 Cloud ML platforms (hosted/finetuned models with orchestration)

Cloud ML platforms collect many capabilities you might otherwise build yourself: multiple base models (including open‑source), finetuning pipelines, prompt versioning, A/B testing, evaluation suites, vector stores, and agent/tool frameworks. They’re attractive when your application is the product and you expect frequent model swaps, complex tool use, or release cadence measured in days, not quarters.

Implementing advanced agentic workflows—where an LLM reasons, calls tools, and iterates—typically requires an orchestration layer. Frameworks like LangChain or LlamaIndex manage state and error handling for these multi-step processes, adding development and maintenance complexity beyond single-shot prompts.

For Snowflake customers, the key is maintaining clear boundaries. Snowflake remains the data plane for views, masking, and retrieval, while the platform handles prompt orchestration and evaluation. That architecture gives you platform conveniences without duplicating sources of truth. It also keeps model monitoring coherent: retrieval cost and freshness (Snowflake) can be analyzed alongside generation latency and token spend (platform) using a single correlation ID. One caution: platforms make it easy to mirror data “temporarily.” Resist that. If retrieval truly must run outside Snowflake for a specific feature, mirror only small, curated artifacts (such as embeddings or distilled corpora) and keep a clear freshness contract.

4.3 Self‑hosted open‑source LLMs (maximum control, maximum responsibility)

Running models yourself—on Kubernetes, managed inference servers, or specialized GPU fleets—offers the most control over data locality, logging, and customization. You pick the base model, decide what is retained, and set hard limits on what crosses your boundary. Common models include Meta Llama 3, Mistral 7B, Mixtral 8×7B, Falcon 40B, Gemma 7B, and Qwen2‑7B.

What you gain in privacy and control, you pay for in ops: capacity planning, upgrades, safety and bias reviews, model monitoring, and 24×7 incident response. This path usually makes sense when regulatory posture demands it or when you already have the SRE/ML‑infra depth to run inference as a first‑class service. Even then, the Snowflake‑centric best practice holds: keep embeddings and retrieval in Snowflake; ship only the redacted, ranked context to your endpoint; and write outputs back to Snowflake for lineage and audit. And always double‑check each model’s license terms with legal/security before production use.

4.4 Interoperability with Snowflake: how to connect safely

Regardless of which external option you pick, the integration contract back to Snowflake should look the same. Start with narrow views that project only the fields you truly need; attach tags and masking/row policies so sensitive data is controlled at the source. Build context by chunking, ranking, and truncating, then redact identifiers.. Co‑locate the endpoint in the same region as your Snowflake account where possible, and prefer private networking over public egress. To keep data observability intact, stamp a correlation ID on the retrieval query and propagate it through the prompt and the model response; that single trace lets you separate retrieval latency from generation latency, compute cost per successful task, and reconstruct incidents without guesswork. Finally, cache intentionally—safe, stable sub‑answers get TTLs tied to Snowflake freshness signals; volatile facts do not.

4.5 Choosing among the options (a quick, practical read)

Think in this order: data gravity → capability → ops tolerance → economics. If sensitive data must remain in Snowflake and your first use case is internal (summaries, classification, LLM‑assisted SQL), start in‑platform and earn the right to add complexity later. If a specific frontier feature materially improves outcomes, adopt Hybrid by keeping retrieval in Snowflake and sending a minimal context to the external model. If you are building a user‑facing product that needs orchestration, finetunes, or agents—and you have the team to run it—treat a cloud ML platform or self‑hosted endpoint as your logic plane while Snowflake remains the data plane. In every case, your yardstick shouldn’t change: define success for the task, log a single trace, and watch cost per successful task so architecture follows evidence rather than fashion.

5) Pricing structure & cost modeling (Cortex vs. other LLMs)

Pricing only looks complicated if you stare at rate cards. For Snowflake customers, the cleaner view is a two‑plane bill: inside Snowflake you pay in credits; outside Snowflake you pay in tokens (and sometimes hosting). The decision you’re making isn’t “which price is lower,” it’s where each dollar actually buys a successful task—a grounded answer, a correct SQL suggestion, a classified document. If you evaluate everything through cost per successful task, the architecture trade‑offs become much easier to compare.

Cortex usage accrues like any other workload in your account: retrieval queries, vector search, index builds/refresh, and LLM functions meter credits under your edition and contract. Hybrid or external setups add the token side of the ledger (input and output tokens, plus any reserved capacity if you’re hosting models). Network egress exists, but in well‑designed Snowflake‑centric pipelines it should be a rounding error because you only send minimal, redacted context across the boundary. For a refresher on credit math and practical guardrails, see our guide on Snowflake Pricing, Explained: A Comprehensive 2025 Guide to Costs & Savings.

5.1 The lens that keeps you honest: cost per successful task

Start by defining success in the language of the use case—SQL correctness for generation, grounded‑answer rate for RAG, summary fidelity for summarization. Then compute:

Cost per successful task = (Snowflake credits × price/credit + external tokens × price/token + any hosting) ÷ # of successful tasks

This number does three useful things. First, it forces you to account for retrieval shape (since wide queries inflate credits and tokens). Second, it highlights where optimizations matter most (retrieval vs. inference vs. network). Third, it lets you compare Cortex, Hybrid, and External apples‑to‑apples without arguing about line items.

5.2 What you pay for inside Snowflake (and why)

Inside Snowflake, spend usually flows through four doors:

- Retrieval and preparation. Warehouses execute the views that power prompts: filtering, joining, pre‑aggregating. This is classic query optimization territory—project fewer columns, trim rows aggressively, and pre‑aggregate so your context starts small.

- Embeddings and vector search. You’ll pay to generate embeddings, build indexes, and refresh them as content changes, plus the query cost when you perform semantic lookup. Index cadence (hourly vs. nightly) is the quiet cost lever here.

- LLM functions. When you call Cortex functions for generation, summarization, translation, extraction, or classification, consumption scales with how often you call them and how big the requests are (which circles back to retrieval shape).

- Cloud‑services activity. Some managed features (for example, search/knowledge indexes) perform background work to maintain freshness. Faster targets and higher churn mean more credits.

Two implications follow. First, the cheapest tokens are the ones you never send—fix upstream SQL before you tinker with prompts. Second, treat LLM usage like any other Snowflake workload: tag queries by project, watch snowflake pricing consumption against budgets, and attribute costs so teams see their own impact.

5.3 What you pay for outside Snowflake (and why)

In Hybrid or External patterns, you add the token side of the ledger and, sometimes, a hosting bill:

- Tokens in and out. Providers charge for context and for completions. Long inputs, verbose outputs, and high temperature settings all nudge this number up.

- Reserved or self‑hosted capacity. If you rent an inference endpoint or operate your own GPUs, you pay even when traffic is low. That can be efficient at steady, high volume, but wasteful for spiky loads without autoscaling.

- Finetuning and storage. Where supported, you’ll see charges for training runs and for storing adapters/checkpoints. Worth it only if they reduce tokens or errors enough to move cost per successful task.

- Observability overhead. Whether bundled or separate, collecting model monitoring signals (latency, failures, token counts, refusals) is part of the true cost because it prevents surprises.

A design note: the easiest way to keep this side predictable is to treat the external stack as a logic plane. Keep embeddings and retrieval in Snowflake; send only a small, redacted packet to the model; write outputs and feedback back to Snowflake for lineage and allocation.

5.4 Making the comparison fair

Compare options using these metrics over a representative time period:

- Requests/day per use case

- Average context tokens after truncation

- Average output tokens per answer

- Cache hit rate (for safe, repeatable answers)

- Concurrency at peak (drives retries and tail latency)

Hold retrieval constant while you swap generation options. If you change prompt templates or ranking rules, re‑run the whole set—even small changes to context size will invalidate yesterday’s numbers. This discipline is why you instrument correlation IDs end‑to‑end: it gives you a single trace that ties cost, latency, and quality to the exact retrieval and prompt that produced an answer. For a quick primer on picking operational signals that actually help in incidents, see Observability vs. Monitoring in Snowflake: Understanding the Difference.

5.5 Cost profiles by architecture (what tends to dominate)

Cortex‑first (all‑in Snowflake). Expect retrieval, indexing, and LLM function calls to be the main cost drivers. You keep governance and attribution simple, and you usually win on time‑to‑first‑value for internal analytics or LLM‑assisted SQL. Savings come from upstream query optimization (fewer columns/rows) and index cadence (batch, don’t re‑embed per write).

Hybrid RAG (retrieval in Snowflake, generation external). Spend splits between Snowflake retrieval and external tokens, with a thin network slice if you’re co‑located. You adopt this when a specific model feature materially improves outcomes. Cost levers are context size, caching, and escalation rules (small/fast model first; escalate only on low confidence).

Externalized platform (app‑first). Here the inference and hosting costs dominate, and you may also pay for features like evaluation suites or agents. It’s the right fit when the application is the product and needs weekly model swaps or complex tool use. Keep a tight boundary so you don’t duplicate retrieval and vector stores outside Snowflake “just in case.”

5.6 Guardrails that prevent runaway bills

Guardrails are boring—and that’s why they work. Cap context windows by route so an accidental long input can’t explode spend. Cache only what’s safe to reuse and tie TTLs to Snowflake freshness signals. Co‑locate external endpoints with your Snowflake region and prefer private networking to reduce both latency and risk. Use query tags to attribute Snowflake credits by project; set resource monitors with alerts well before cutoffs so someone can intervene. On the model side, track tokens, retries, and timeouts; alert on deviations from baselines. Track p95 latency by stage, context sizes, and cache rates to identify cost drivers.

5.7 Pitfalls to avoid (and what to do instead)

- Unbounded context. More text rarely means better answers; it always means more tokens and slower p95. Use chunk → rank → truncate and deduplicate near‑identical chunks.

- Per‑write re‑embedding. Refreshing indexes synchronously is a silent tax. Batch and schedule to match business freshness.

- Split‑brain retrieval. Duplicating a second vector store outside Snowflake doubles indexing cost and fragments debugging. Keep retrieval in Snowflake; send redacted context out only when needed.

- Hidden caches. Provider caches can make proofs‑of‑concept look cheap and production expensive. Make cache policy explicit and log hits alongside the correlation ID.

- No single trace. Without correlation IDs spanning Snowflake and the model call, you’re guessing where time and money went.

5.8 Putting it together

Focus on cost per successful task rather than rate comparisons. Inside Snowflake, that means shaping retrieval so prompts are small by design and scheduling index refresh on business‑appropriate cadences. Outside Snowflake, it means treating the model layer as a logic plane with strict context caps, explicit caching, and routing that prefers small/fast models until the evaluation set proves you need more. Keep the accounting simple—credits inside, tokens outside, one trace across—and the architecture choice will follow the evidence rather than the fashion of the week.

6) Architecture patterns: in‑platform, hybrid, and external

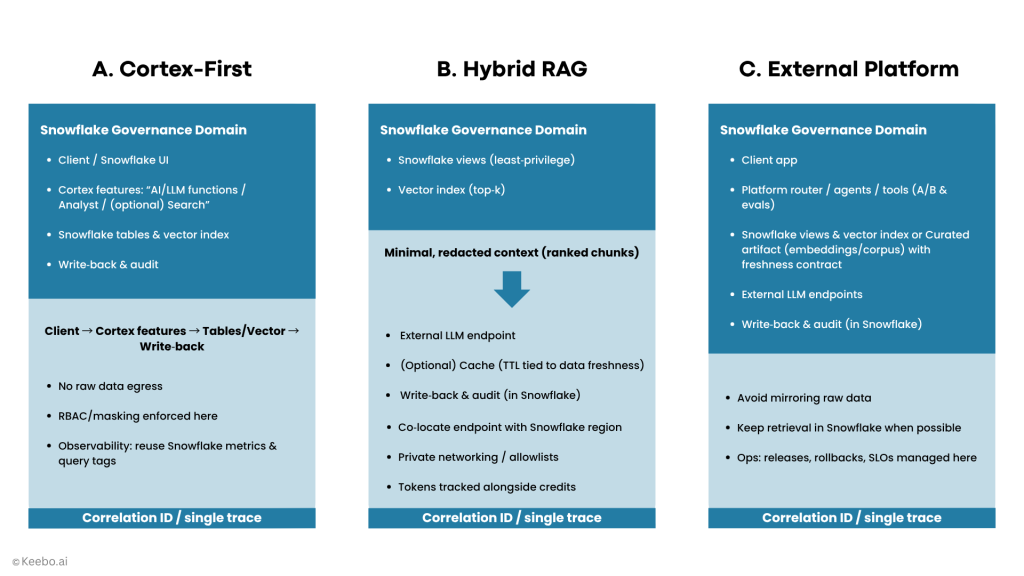

With Snowflake as your system of record, architecture choices are mostly about where the logic runs and how much crosses the governance boundary. In practice you’ll pick from three patterns: keep everything in‑platform with Cortex, run hybrid RAG with retrieval in Snowflake and generation outside, or build on an externalized platform when the application itself is the product.

6.1 Pattern A — Cortex‑first (all‑in Snowflake)

Cortex‑first is the most conservative path: retrieval, semantic search, and generation happen inside Snowflake’s RBAC, masking, and audit. Teams tend to start here because it minimizes moving parts and shortens the path from idea to value. The developer experience is familiar: analysts and engineers call LLM functions from SQL or Python, build embeddings and managed search over curated corpora, and land results back in tables with lineage intact.

Focus on data preparation You shape narrow views that expose only the fields a prompt truly needs; you add masking/row policies so sensitive columns are safe by default; and you keep contexts small by design so snowflake pricing remains predictable. If your use case needs grounding, you generate embeddings in‑platform and schedule index refresh on a sensible cadence (hourly or nightly rather than per write). Because everything stays in one estate, ownership and budgets are easy to attribute, and data observability uses the dashboards you already trust.

Cortex‑first is a great fit for internal analytics—summaries and classifications over governed tables, entity extraction into structured columns, knowledge bots over curated documents—and for LLM‑assisted SQL when the priority is developer/analyst throughput rather than novel application behavior. The moment it stops being enough is also clear: you need a specialized model or fine-tuning, advanced tool/agent behavior, native A/B testing capabilities, or a feature not yet available natively that measurably improves outcomes.

6.2 Pattern B — Hybrid RAG (retrieval in Snowflake, generation external)

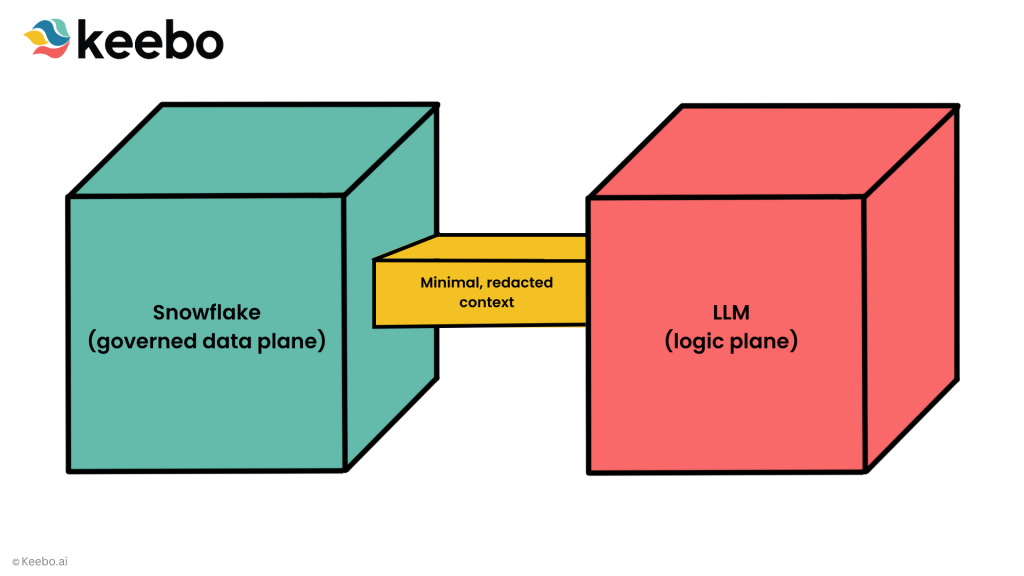

Hybrid splits the world into a governed data plane (Snowflake) and a logic plane (an external model). You keep embeddings, indexes, and retrieval close to source tables; you assemble a minimal, redacted context; and you send only that across the boundary for generation. The payoff is model flexibility without duplicating sources of truth.

The day‑to‑day discipline looks like this: retrieval queries produce ranked, deduplicated chunks that respect a strict token budget; a correlation ID is stamped on those queries and carried through prompt metadata so you can separate retrieval latency from inference latency; and safe sub‑answers (policy clauses, product facts) are cached with TTLs tied to Snowflake freshness signals. Security and privacy are explicit rather than assumed: service principals hold the keys, endpoints are co‑located with your Snowflake region, and the provider is configured not to retain or train on your data unless you’ve approved it.

Use hybrid approaches for frontier reasoning, multilingual capabilities, or immediate agent requirements. It’s also a comfortable middle ground for interactive, user‑facing experiences that need lower p95 or specialized tool use, as long as you keep contexts tight. If you find yourself running a growing web of prompts, tools, and A/B flows with weekly changes, you’re likely feeling the pull of a platform—at which point it’s time to evaluate the externalized pattern.

6.3 Pattern C — Externalized platform (app‑first, platform‑rich)

In this pattern, you treat the model layer as a product surface of its own. An external ML platform (or a self‑hosted stack) handles model selection, routing, finetunes, tool/agent orchestration, A/B testing, and evaluation gates. Snowflake remains the data backbone: retrieval can stay in Snowflake for governance and lineage, or, if a feature truly requires it, a small, curated artifact (embeddings or distilled corpora) is mirrored with a clear freshness contract.

You’ll know this pattern is warranted when the application itself demands rapid iteration—weekly model swaps, frequent prompt changes, complex tools, and a need to roll back safely. The operational burden is real: SRE/ML‑ops capacity, incident response, and a second set of access controls and audits. The way to keep that complexity in check is the same boundary discipline you’ve used all along: avoid mirroring raw data “just in case,” propagate a single correlation ID from client to retrieval to model to write‑back, and keep model monitoring side‑by‑side with Snowflake metrics so quality, latency, and cost read as one trace rather than two disjointed systems.

6.4 Choosing the simplest pattern that works

A practical selection sequence is to ask four questions in order. Where must the data live? If sensitive data can’t leave Snowflake, start Cortex‑first and treat Hybrid as an exception for narrow cases. What capability do you actually need? If a specific external model measurably improves outcomes on your evaluation set, introduce Hybrid with the smallest possible surface—one endpoint, one route—before you consider a broader platform. What interaction model do users expect? Batch or internal analytics tolerate modest latency; interactive, customer‑facing surfaces often justify Hybrid once cache + truncation can’t hit the SLO. What ops capacity do you have? Lean teams stay Cortex‑first longer; mature ML/SRE teams can carry Hybrid or an external platform, but should still keep retrieval in Snowflake to avoid sprawl.

When you apply those questions consistently, architecture stops being a philosophical debate and becomes a short exercise in constraints. The simplest design that satisfies governance and quality today is the right one; add complexity only when your metrics force your hand.

6.5 A staged rollout that avoids re‑architecture

The incremental path works because it earns each step. Stage 1 proves value in place: one Cortex‑first use case with clear success criteria, narrow views, masked columns, templated prompts, and a tiny offline evaluation set. Stage 2 adds grounding: embeddings and managed search over curated corpora with an index refresh cadence that matches business freshness. Stage 3 introduces Hybrid selectively: route a small fraction of requests to an external model where your evaluation set shows a real gain; keep retrieval in Snowflake and carry correlation IDs across the boundary. Stage 4 adopts a platform only if the application demands orchestration and A/B discipline you can’t get otherwise. The key is that each stage reuses the same observability and budgeting you set up at the start, so success remains comparable as pieces change.

6.6 Operating the patterns day‑to‑day

Regardless of which pattern you run, keep the operational invariants steady so your teams can ship without surprises. Every request should carry a single correlation ID from retrieval to generation and back; every project should have query tags, resource monitors, and a cost envelope that triggers alerts before cutoffs; and every change to prompts, ranking rules, or model versions should run through the same evaluation set before promotion. If you want a concise refresher on choosing signals that actually help during incidents, see Observability vs. Monitoring in Snowflake: Understanding the Difference.

Taken together, these habits make the patterns interchangeable parts. Start in‑platform, move to Hybrid where evidence warrants, and adopt a platform only when the application truly demands it—while keeping Snowflake the place where governance, lineage, and cost attribution never go out of date.

7) Governance, privacy, and security

When Snowflake is your system of record, governance isn’t an add‑on—it’s the frame that determines every other choice. The safest posture is to keep Snowflake as the governed data plane and treat LLMs—Cortex or external—as the logic plane. That boundary gives you a simple rule: data may be read under existing policies; only minimal, redacted context crosses the line; and every hop is logged and traceable. Here’s how to implement these principles without creating a compliance nightmare:

7.1 Draw the boundary first

Start by writing down what lives on each side. Inside Snowflake you already have RBAC, masking, row‑level policies, tags, lineage, and audit. That is the only place raw or sensitive fields should appear. Outside Snowflake—whether that’s Cortex interfaces, a managed API, or a platform—you run prompts, generation, routing, and (if hybrid) agent logic. Treat cross‑boundary calls as exceptional: explicit, least‑privilege, and accompanied by a correlation ID. Clear boundaries prevent governance issues.

7.2 Put controls in the data, not in the prompt

Governance is sturdier when it’s enforced at the view that feeds your prompts, not as a last‑mile redaction script. Tag sensitive columns; attach masking and row‑access policies that apply automatically when a service role reads those views; and design the views to project only the fields you truly need. If a column shouldn’t appear in an answer, it shouldn’t be available to the retrieval query in the first place. Doing this in Snowflake means the same protections that keep analysts safe also protect prompts.

7.3 Minimize and sanitize before anything leaves Snowflake

Language models get more expensive and riskier the more you send them. Assemble context with chunk → rank → truncate, deduplicate near‑matches, and strip nonessential metadata. Replace obvious identifiers with placeholders (<customer_email>, <account_id>) so the model has the signals it needs without the secrets it doesn’t. If you later adopt Hybrid, this discipline is what keeps external prompts small, cheap, and non‑sensitive by default.

7.4 Identity, secrets, and network posture

Production traffic should flow through service principals with the narrowest rights necessary. Keep keys in a secrets manager; never embed them in SQL, configs, or prompt templates. For any external endpoint, prefer private connectivity (e.g., VPC/PrivateLink‑style paths), co‑locate it in the same region as your Snowflake account, and operate on allowlists rather than broad egress. Finally, set providers to not retain or train on your data unless you have explicit contractual approval and a documented reason.

7.5 Observability and audit: one trace or it didn’t happen

Security without visibility is guesswork. Generate a correlation ID at the edge, include it in Snowflake query tags for retrieval, carry it inside prompt metadata, and log it with the model response and any write‑backs. Now you can separate retrieval latency from generation latency, tie quality signals to cost, and reconstruct incidents without hunting across systems. For a concise primer on which signals actually matter during incidents, see Observability vs. Monitoring in Snowflake: Understanding the Difference.

7.6 Model governance that scales with change

Treat prompts and models like code. Version prompt templates, retrieval SQL, and model parameters; review them like pull requests; and require a small offline evaluation set to pass before promotion. Record which template and model version produced each answer (via the correlation ID), along with context size and any safety filter hits. These habits keep velocity high without sacrificing traceability as models and prompts evolve.

7.7 Incident response, without drama

When something goes wrong—unexpected content, a privacy concern, a runaway bill—the same boundary saves you. Rotate or revoke the service identity involved, disable affected routes, and purge caches that may contain sensitive text. Use the correlation ID to walk the path from retrieval to prompt to model to output; that will tell you whether to tighten a view, a policy, a prompt, or an endpoint. Close the loop by adding a test to your evaluation set that would have caught the issue and by adjusting guardrails (context caps, redaction rules, or network allowlists) so the class of incident can’t recur quietly.

Bottom line: Keep sensitive data where Snowflake already protects it. Send out only what’s necessary, scrubbed and small. Make every request traceable end‑to‑end so cost, latency, and quality read as one story. With that discipline, you can adopt Cortex, go Hybrid for specific capabilities, or even run an external platform—without rewriting your governance playbook each time.

8) Benchmarking LLM options: A practical evaluation framework

Benchmarking only helps if it looks like production. For Snowflake customers that means governed retrieval inside Snowflake, templated prompts with explicit parameters, and one trace that follows every request from query to answer. The goal isn’t to crown a universal “best” model; it’s to decide what works for your data, under your controls, at a cost you can live with. We’ll cover the key metrics to track, how to build trustworthy evaluations, and ways to present results that drive decisions rather than debates.

8.1 What to measure (and why it maps to production)

Start with task quality, defined the way you’ll judge success in the real system: SQL correctness for query generation, grounded‑answer rate for RAG, and summary fidelity for summarization. Add latency—both end‑to‑end and by stage (retrieval vs. generation)—because p95 is what users feel and what drives retries. Fold in cost per successful task so you’re comparing dollars for outcomes, not dollars for tokens or credits. Finally, track reliability signals (timeouts, refusal rates, cache hits) and basic safety checks (no PII leaks in prompts/outputs). If a metric won’t influence a go/no‑go decision, don’t measure it.

8.2 Build an evaluation set that mirrors reality

An evaluation is only as good as its inputs. Sample real prompts and questions from your teams (with PII safely masked), and pair each with the retrieval view that will power the production prompt. Balance easy, typical, and edge cases; otherwise you’ll overfit to the happy path and be surprised in week two. Give each item a clear expected outcome: ground‑truth SQL and result shape for query generation, or a rubric and acceptable variants for RAG/summarization. You can keep the whole set in Snowflake tables so it’s easy to version and re‑run when prompts or models change.

8.3 Control the variables when you run

Most “benchmark drama” comes from hidden differences. Fix the retrieval path first: use the exact views, filters, and ranking logic you’ll ship. Template prompts and pass model parameters (temperature, top‑p, max tokens) explicitly. Cap context length and prefer chunk → rank → truncate over “stuff everything.” Decide how you’ll treat caching before you start—either warm caches deliberately and log hit rate, or disable caching for apples‑to‑apples runs. Then change one dimension at a time: if you’re comparing Cortex to an external model, keep retrieval constant and swap only the generation step; if you’re testing prompt changes, keep the model constant.

8.4 The metrics to publish (and how to compute them)

Once the run completes, compute the small set of numbers stakeholders actually need.

- Quality:

- Grounded-answer rate: (Number of answers where all claims can be traced to a retrieved context snippet) / (Total number of answers). For scalability, consider using an LLM-as-a-judge (e.g., GPT-4, Claude 3) to automate this evaluation.

- Hallucination rate: The complement of the grounded-answer rate; the proportion of answers containing unsupported claims.

- SQL correctness: (Number of generated SQL queries that execute without error AND return a result set matching the gold standard’s schema and values) / (Total number of queries).

- Latency: p50/p95 end‑to‑end and by stage; note the concurrency during the run so results are interpretable.

- Cost per successful task:

- Inside Snowflake: (credits consumed × price/credit) ÷ successful tasks.

- Outside Snowflake (if hybrid/external): (tokens × price/token + any hosting) ÷ successful tasks.

- Total: the sum of the above.

- Stability: Variance across N re‑runs for the same prompts; high variance usually signals temperature/decoding or retrieval instability.

8.5 Wire it into Snowflake so it scales

Keep the harness where your teams already work. Store the evaluation set in tables with columns for prompt ID, retrieval view/version, prompt template/version, model/version, and expected output/rubric. When you execute a run, stamp a correlation ID on the retrieval queries (via query tags), carry it in prompt metadata, and log it with the model response and any write‑backs. That one ID lets you separate retrieval and generation latency, attribute cost accurately, and reconstruct incidents without hunting. For a concise refresher on choosing signals that help during incidents, see Observability vs. Monitoring in Snowflake.

8.6 Report like a decision‑maker, not a lab report

A good benchmark readout fits on one page. Show distributions, not just single numbers—p50 and p95 for latency; interquartile ranges for quality scores. Compare results by task type; a model that wins at summarization may lose at SQL generation. Annotate the setup: the exact prompt template, model parameters, retrieval views, and cache policy used. Then say the quiet part out loud: if Model X is cheaper but fails more often on edge cases, recommend a routing policy (small/fast first; escalate on low confidence) rather than pretending there’s a single winner.

8.7 Pitfalls that make benchmarks useless

Benchmarks fail when they don’t look like production. Ad‑hoc retrieval, unlabeled prompts, or changing multiple variables at once will produce numbers that are easy to argue about and impossible to trust. Unbounded context will flatter large models and punish latency. Hidden provider caches will make pilots look cheap and production expensive. The antidote is the same discipline you’ll use in the real system: governed retrieval, context caps, explicit cache policy, and a single trace that ties quality and cost together.

8.8 The takeaway you can act on this quarter

If you run your evaluation where your data and controls live, the decision writes itself. Governed views shape small, relevant contexts; templated prompts keep runs comparable; a correlation ID gives you one story for latency, quality, and spend; and cost per successful task turns rate cards into actionable trade‑offs. With that harness in place, you can compare Cortex, Hybrid, and external options without guesswork—and repeat the exercise every quarter as models, features, and prices evolve.

9) Comparing approaches: Pros, cons, and best-fit scenarios

By this point the shape of the decision should feel familiar: keep Snowflake as the governed data plane, and decide where the LLM logic runs. Here’s an opinionated comparison that brings together everything we’ve covered, designed to help you make a decision. Rather than repeating laundry‑lists, each subsection explains how the approach behaves in day‑to‑day work, what you gain and give up, and when it’s the right choice for teams already invested in Snowflake.

9.1 How to read the trade‑offs

All three approaches—Cortex, Hybrid, and Externalized platform—can succeed. The differences show up along four axes:

- Data gravity & governance. How much sensitive data must remain inside Snowflake’s RBAC/masking/audit?

- Capability needs. Do you require specialized models, agent/tool workflows, or rapid model swaps?

- Performance envelope. What p95 latency and throughput do users expect, and how bursty is traffic?

- Operational tolerance. How much SRE/ML‑ops complexity can your team own without slowing delivery?

If you evaluate each option against those four constraints—and keep cost per successful task as your unit metric—you’ll avoid most second‑guessing later.

9.2 Cortex (all‑in‑platform)

What it feels like in practice.

Cortex keeps retrieval, semantic search, and generation within Snowflake’s security boundary. Analysts invoke language functions from SQL or Python, embeddings and managed search sit next to your tables, and results land back in Snowflake with lineage intact. You reuse the same data observability and budget practices you already rely on, so operationally it feels like adding a new capability to an existing platform, not introducing a new platform.

Strengths you can bank on.

Governance continuity is the headline benefit: masking, row‑level policies, and audit apply where prompts are assembled. Because prompts are built from narrow views you control, it’s easier to keep context small, protect PII, and attribute spend with query tags—key for snowflake pricing discipline. For internal analytics and LLM‑assisted SQL, this proximity shortens feedback loops and reduces integration risk.

Where you’ll hit limits.

Cortex exposes fewer knobs than a full ML platform. If your roadmap requires bleeding‑edge models, complex tool/agent flows, or features that aren’t yet native, you may stall. The fix is architectural, not political: introduce Hybrid for the specific use cases that need more capability, while keeping embeddings and retrieval in Snowflake so governance and lineage remain intact.

Best fit.

Internal analytics (summarization, classification, extraction), knowledge over curated corpora, and developer/analyst productivity with queries shaped by existing query optimization habits. Choose this when data residency is non‑negotiable and your first wins should be quick, safe, and attributable.

9.3 Hybrid RAG (retrieval in Snowflake, generation external)

What it feels like in practice.

Hybrid splits responsibilities cleanly: Snowflake remains the governed data plane—views, embeddings, indexes, ranking—while an external endpoint handles generation. The only thing crossing the boundary is a minimal, redacted packet of context. Day‑to‑day, you’ll mind both sides: Snowflake for retrieval cost/freshness, the model provider for tokens and latency. A single correlation ID ties them together for model monitoring and incident response.

Strengths you can bank on.

You get model flexibility—frontier models, long‑context variants, domain‑tuned options—without duplicating sources of truth. Because retrieval is still SQL, upstream query optimization and deduplication directly reduce tokens, tail latency, and risk. It’s also a reversible choice: you can route only the slices that demonstrably benefit from external generation and leave everything else in Cortex.

Where you’ll hit limits.

You now operate two estates. Secrets, private networking, rate‑limits, and retries need first‑class attention, and p95 variance can creep in with network hops. The fix is discipline: co‑locate endpoints with your Snowflake region, cap context length per route, cache stable sub‑answers with TTLs tied to Snowflake freshness, and propagate correlation IDs so you can see where time and money go.

Best fit.

Interactive or user‑facing surfaces that improve measurably with a specific model capability; multilingual or domain‑specialized tasks; or transitional phases where Cortex provides most value but select routes need external lift. Choose this when capability or latency gains are real and you can keep the external surface area small.

9.4 Externalized platform (app‑first, platform‑rich)

What it feels like in practice.

Here the model layer is a product surface of its own. You use a cloud ML platform (or a self‑hosted stack) for model selection, routing, finetunes, tool/agent orchestration, A/B testing, and evaluation gates. Snowflake stays the data backbone; retrieval may remain in Snowflake or, where truly necessary, a curated artifact (embeddings, distilled corpora) is mirrored with a clear freshness contract.

Strengths you can bank on.

Maximum control: rapid model swaps, agent frameworks, evaluation at the center of release hygiene, and the ability to shape complex tool workflows. If your application is the product and ships weekly, these knobs matter. Done well, you still keep snowflake security and lineage as your foundation by anchoring retrieval in Snowflake.

Where you’ll hit limits.

Complexity and cost migrate from rate cards to people and process. You now need SRE/ML‑ops depth, a second set of access controls and audits, and playbooks for incident response and rollback. The common failure modes are data sprawl (mirroring raw tables “temporarily”) and fragmented observability. The countermeasure is the same boundary discipline: minimal data leaves Snowflake, everything is tagged, and one trace spans the full request.

Best fit.

Customer‑facing applications that demand orchestration, frequent experimentation, and tight SLOs—where the product experience depends on capabilities a platform makes easier to manage than ad‑hoc scripts or SQL‑only flows.

9.5 Choose deliberately (and keep the bar steady)

A simple, reliable sequence works for most Snowflake customers:

- Start where your data and controls live—Cortex for internal, governed use cases.

- Introduce Hybrid only when evidence demands it—a specific task improves materially on an external model and the cost per successful task stays within your envelope.

- Adopt an external platform when the app is the product—and only after you’ve proven you can keep retrieval and lineage anchored in Snowflake.

Across all three, hold the invariants steady: governed retrieval in Snowflake, minimal and logged cross‑boundary context, a single correlation ID for data observability, and disciplined context management. If those habits are in place, your team can change models, prompts, and even architectures without changing the way you measure success—or the way you sleep at night.

10) Implementation playbooks and decision framework

Two playbooks cover almost every Snowflake‑centric LLM initiative: a Cortex‑first path for internal, governed use cases and a Hybrid RAG path when a specific external model materially improves outcomes. Both assume that Snowflake remains your data plane—the place where masking, row‑level policies, lineage, and budgets already work—and that LLMs (whether Cortex or external) are the logic plane. What changes between the playbooks is where generation happens and how you control the cross‑boundary hop.

10.1 Playbook A — Cortex‑first (a fast, governed path to value)

Start by framing the smallest slice that proves value. Pick one outcome you can score unambiguously—“SQL is correct,” “answer is grounded in evidence,” or “document is classified to the right bucket.” Write that definition down. You’ll use it to measure cost per successful task and to decide whether to expand.

Next, shape the data where it lives. Build narrow views that expose only the columns a prompt truly needs, and attach masking and row‑level policies so sensitive fields can’t leak by accident. If the use case requires grounding, generate embeddings in‑platform and create indexes over a curated corpus; schedule refresh at a cadence tied to business freshness rather than per write. This step keeps query optimization and snowflake security in the foreground and prevents token bloat before it starts.

With the data surface nailed down, implement the language step with Cortex functions. Treat prompt templates like code: version them, parameterize them, and validate inputs before invocation (length, type, forbidden patterns). Keep contexts deliberately small: chunk → rank → truncate and deduplicate near‑matches rather than stuffing entire documents. Land outputs—and any human feedback—back in Snowflake tables so lineage remains intact.

Operationalize from day one. Stamp a correlation ID on the retrieval query (via query tags) and propagate it through the generation step and write‑back so you can separate retrieval latency from generation latency and attribute spend cleanly. Track p50/p95, cache hits, retries, and the evaluation result for each attempt. Set a modest credit budget for the pilot and alert before you hit thresholds; treat this like any other Snowflake workload rather than a special project.

Roll out in increments. Let a small group of users exercise the flow for a week, then read the trace rather than anecdotes: is grounded‑answer rate or SQL correctness stable, is p95 within tolerance, and is cost per successful task inside your envelope? If the numbers hold, widen the audience; if not, iterate on views, ranking, and truncation before you touch prompts or model settings. The point of Cortex‑first is to get reliable wins without adding a second platform to govern.

10.2 Playbook B — Hybrid RAG (retrieval in Snowflake, generation external)

Choose Hybrid for a narrow, evidence‑based reason: a particular external model provides capabilities you need now (frontier reasoning, multilingual strength, tool use) and measurably improves results on your evaluation set. Keep the external surface as small as possible—one endpoint for one route—until you have proof it should grow.

The data plane stays the same: embeddings, indexes, and retrieval run in Snowflake, and views still project only the necessary fields with masking and row policies enforced. Assemble a minimal, redacted context (ranked and capped to a strict token budget) and send only that across the boundary. Co‑locate the endpoint in the same region as your Snowflake account and prefer private connectivity; configure the provider not to retain or train on your data unless you have explicit approval.

Tracing is non‑negotiable. Generate a correlation ID in Snowflake, carry it in prompt metadata, and log it with the model response and any post‑processing so data observability reads as one story. Cache only what is safe to reuse (for example, policy boilerplate or immutable definitions) and tie TTLs to freshness signals in Snowflake. Handle failure like you handle any distributed system: implement exponential backoff, make writes idempotent, and watch p95; it’s usually tail latency—more than average cost—that triggers retries and surprises.

Prove the lift before you scale. Run your evaluation set through the hybrid route and compare to Cortex‑first with the same retrieval, templates, and context caps. Expand only if quality rises materially and cost per successful task stays in range. If results are mixed, tighten retrieval and truncation first; sending less usually helps more than switching to a bigger model.

10.3 A decision checklist you can run in one meeting

Use this checklist to prevent over‑engineering and force your architecture to follow evidence:

- Data gravity. Must the relevant data remain inside Snowflake’s RBAC/masking boundary? If yes, start Cortex‑first and treat cross‑boundary calls as exceptions.

- Capability gap. Does a specific external model move the needle on your evaluation set? If yes, try Hybrid for that one route; if not, optimize retrieval and prompts first.

- Interaction model. Are users interacting live (strict p95) or consuming batch/internal outputs? Live experiences justify Hybrid sooner; batch/internal usually don’t.

- Ops tolerance. Do you have the SRE/ML‑ops capacity to run and observe another estate? If not, stay in‑platform until you do—or until evidence makes the trade‑off undeniable.

- Unit economics. Can you compute cost per successful task with a single trace? If you can’t measure it, you can’t control it—pause until that harness exists.

- Exit and rollback. What would make you revert a change? Define rollback triggers (quality drops, p95 spikes, budget breaches) before you flip traffic.

Run this list before you commit engineering time; it keeps governance, performance, and cost aligned with what you can actually support.

10.4 When to escalate—and when to revert

Escalate from Cortex‑first to Hybrid when your labeled evaluation set shows a sustained, material improvement that users will notice and budgets can absorb. Escalate from Hybrid to an externalized platform only when the application itself needs orchestration, frequent model swaps, or agents you can’t responsibly operate ad‑hoc. Just as important, give yourself permission to revert: if p95 blows past SLOs, cache hit rate collapses, or cost per successful task creeps up over two consecutive reviews, route traffic back to the simpler path while you fix retrieval, truncation, or caching. Simplicity is not a step backward; it’s how you preserve momentum while you keep risk and spend where you want them.

11) FinOps for LLM workloads on Snowflake (govern costs without slowing down)

FinOps for LLMs isn’t a month‑end spreadsheet; it’s a design discipline. With Snowflake as your system of record, the cleanest way to keep spend predictable is to think in two planes—credits inside Snowflake and tokens/hosting outside—and to evaluate everything through one steady yardstick: cost per successful task. When you hold that metric constant and keep retrieval close to governed data, architecture choices and optimizations become straightforward.

11.1 The unit metric and the two‑plane bill

Start by defining success in the language of the use case—SQL that executes with the expected schema and values, an answer that’s grounded in retrieved evidence, or a document that’s placed in the correct class. Then compute:

Cost per successful task = (Snowflake credits × price/credit + external tokens × price/token + any hosting) ÷ # of successful tasks

This framing prevents rate‑card whiplash. Whether you call Cortex functions or an external endpoint, the number rewards smaller contexts, fewer retries, and better routing—because those changes increase successes while reducing both credits and tokens. If you need a refresher on how credit consumption works and where budgets/alerts fit, see our guide on Snowflake Pricing, Explained: A Comprehensive 2025 Guide to Costs & Savings.

11.2 Shape retrieval where the data lives

Most runaway LLM bills begin as ordinary SQL: a wide SELECT, an under‑filtered JOIN, or an intermediate table that’s larger than the prompt ever needs. Fixing this at the source is cheaper than any downstream trick. Build narrow views that project only the fields a prompt will include. Push filters and pre‑aggregations down so contexts start small. If you ground answers in enterprise text, generate embeddings in‑platform and schedule index refresh on a cadence tied to business freshness (hourly or nightly), not on every write. These are familiar query optimization habits; in LLM pipelines they also reduce tokens and p95 latency, which directly improves cost per success.

11.3 Manage the cross‑boundary hop (if you go hybrid)

Hybrid adds a second currency to the ledger, so be deliberate about what crosses the boundary. Assemble context with chunk → rank → truncate, redact obvious identifiers, and co‑locate the endpoint in the same region as your Snowflake account. Cache only what’s safe to reuse (policy boilerplate, immutable definitions), and tie TTLs to freshness signals in Snowflake. Handle failure like any distributed system: back off exponentially on timeouts, make write‑backs idempotent, and watch tail latency—the p95 that triggers retries is usually the real budget killer. Treat the external stack as a logic plane; keep embeddings and retrieval in Snowflake so governance, lineage, and spend attribution remain simple.

11.4 Make cost observable and attributable on day one

Cost control works only when you can see where time and money went. Stamp a correlation ID on the retrieval query (via query tags), carry it through prompt metadata, and log it with the model response and any write‑backs. Now one trace tells a coherent story: p50/p95 for retrieval versus generation, cache hits, token counts (if hybrid), and the pass/fail outcome against your evaluation rubric. Attribute Snowflake credits by project using query tags and resource monitors with alerts before cutoffs; on the model side, track tokens, retries, and timeouts with the same project labels. For a concise primer on signals that actually help during incidents, see Observability vs. Monitoring in Snowflake: Understanding the Difference.

11.5 Forecasting and change management that people trust

Keep forecasting lightweight but live. A small sheet with five inputs—requests per day, average context tokens, average output tokens, cache hit rate, and peak concurrency—multiplied by your credit and token rates yields a monthly envelope that you can sanity‑check at a glance. When any input changes materially (a new team, a longer context cap, a lower cache hit rate), re‑run the envelope. Gate promotions with an offline evaluation set and block releases that reduce grounded‑answer rate/SQL correctness or increase cost per successful task beyond thresholds. Publishing a monthly “bill of work” by use case (not by team) keeps stakeholders focused on the levers that matter: retrieval shape, caching, routing, and—only when the data proves it—model choice.

11.6 The common failure modes—and the durable habit

The same pitfalls show up again and again: unbounded context windows that quietly inflate tokens; per‑write re‑embedding or index refresh in hot paths; hidden provider caches that make pilots look cheap and production expensive; duplicated vector stores that fragment retrieval and double indexing costs; and missing correlation IDs that turn debugging into guesswork. The antidote is a single, durable habit: shape upstream, cap what can grow, and trace every request end‑to‑end. Do that, and you can scale usage without losing control of the bill—or your ability to explain it.

Bottom line: FinOps for LLMs on Snowflake is won where prompts begin, not where they end. Keep retrieval governed and lean, make cross‑boundary calls minimal and logged, and measure outcomes with cost per successful task. The result is speed with guardrails—not a slower process, but a safer one.

12) Common pitfalls and how to avoid them

Most LLM projects don’t fail because a model can’t reason—they fail because everyday engineering habits don’t carry over to language‑driven workloads. When Snowflake is your system of record, the biggest risks cluster around retrieval shape, context discipline, and fragmented observability. The antidotes are boring by design: narrow the data surface before you prompt, cap what can grow, and keep one trace from query to answer.

12.1 Unbounded context windows

Packing every “possibly relevant” paragraph into a prompt feels safe but quietly inflates tokens, slows p95, and often hurts answer quality. Treat context as a scarce resource: chunk → rank → deduplicate → truncate. If quality drops after truncation, fix retrieval and ranking before you consider a larger model.

12.2 Wide queries that bloat prompts

Most runaway bills start as ordinary SQL—a wide SELECT, an under‑filtered JOIN, or an intermediate result far larger than the prompt needs. Curate narrow views that project only essential fields, push predicates down, and pre‑aggregate where possible. Upstream query optimization reduces tokens and latency more reliably than downstream prompt tweaks.

12.3 Synchronous re‑embedding and per‑write index refresh

Refreshing embeddings or indexes on every write feels “fresh” but drains credits and creates brittle hot paths. Move to an hourly or nightly cadence aligned with business freshness, batch updates, and monitor lag explicitly. Retrieval speed and cost usually improve immediately.

12.4 Shadow vector stores and split‑brain retrieval

Standing up a second vector store outside Snowflake “just in case” doubles indexing/storage costs and fragments relevance tuning. If Snowflake is your governed data plane, keep retrieval in Snowflake and send only a minimal, redacted context to any external model.

12.5 Hidden caches that distort tests

Provider‑side caches can make pilots look cheap and production bills surprising. Make cache behavior explicit: decide what’s safe to cache, set TTLs tied to Snowflake freshness signals, and log cache hits alongside request IDs. If a benchmark seems too good to be true, you probably measured a warm cache, not the real system.

12.6 No single trace from query to answer

When retrieval runs in Snowflake and generation happens elsewhere, missing correlation IDs turn debugging into guesswork. Generate a correlation ID at the edge, stamp it into query tags for retrieval, propagate it in prompt metadata, and log it with model responses and write‑backs. With one trace, you can separate retrieval from inference latency, attribute cost accurately, and diagnose failures quickly. For a concise primer on picking signals that matter, see Observability vs. Monitoring in Snowflake.

12.7 Over‑rotating to bigger models

Upgrading to a larger model is tempting, but if retrieval is noisy or duplicative you’ll just pay more to process the same clutter. Establish routing: small/fast model first, escalate only on low‑confidence cases or tasks that truly require advanced capabilities. Improve ranking and truncation before you reach for more parameters.

12.8 Treating redaction as a last‑mile fix

PII leaks happen when masking is an afterthought. Enforce masking and row‑level policies at the view that feeds prompts so sensitive fields are protected by default. Then apply last‑mile redaction (e.g., <customer_email>) as an additional guard, not the only one.

12.9 Vague benchmarks and moving goalposts

Benchmarks that don’t mirror production retrieval, change multiple variables at once, or skip labeled outcomes produce numbers no one trusts. Keep a representative evaluation set in Snowflake tables, version prompt templates and model parameters, cap context length, and change one dimension per run. Promote only when quality improves (e.g., grounded‑answer rate, SQL correctness) and cost per successful task stays in range.

12.10 FinOps as an afterthought

If budgets live only in a spreadsheet, engineering won’t see problems until month‑end. Attribute Snowflake credits with project‑level query tags and resource monitors; on the model side, track tokens, retries, and timeouts with the same labels. Alert before cutoffs so teams can adjust retrieval or caching without outages.

12.11 Falling to close the feedback loop

Launching without a plan to capture user feedback leaves you blind to what needs improvement. You’ll lack the data to systematically enhance retrieval, prompts, or model choice.

The Antidote: Instrument your UI for thumbs up/down and log these signals in Snowflake using the correlation ID. Use this data to identify failure patterns, enrich your evaluation set, and create datasets for fine-tuning.

Practical takeaway: Most pitfalls are the absence of ordinary discipline. Narrow the views that feed prompts, cap context and deduplicate aggressively, refresh indexes on a sensible cadence, keep retrieval in Snowflake, and make every request traceable end‑to‑end. Do those five things and you’ll reduce risk, improve responsiveness, and keep spend where you expect it—without arguing about models every week.

13) Looking ahead: changes to watch

Strategy for large language models is not a one‑time decision; it’s a posture you revisit. If Snowflake is your data backbone, the safest way to “future‑proof” is to keep a few invariants steady—governed retrieval in Snowflake, minimal and logged cross‑boundary calls, one trace from query to answer, and a cost‑per‑success yardstick—while you swap parts behind those guardrails. Several key shifts are worth monitoring: how to respond without re‑architecting every quarter.

13.1 Model velocity will keep rising—design for routing, not for a “forever model”

The external LLM ecosystem moves fast: new base models, instruction‑tuned variants, and narrow domain models arrive monthly. Rather than betting the farm on a single winner, assume model choice will expand and make routing your default. Let small, fast models handle routine prompts and escalate to larger models only when confidence is low or a capability truly requires it. Because retrieval remains in Snowflake, this swap doesn’t change masking, row policies, lineage, or your data observability story; it only swaps the generation hop. That’s the point: capability can change without touching governance.

13.2 Bigger context windows won’t replace discipline

Longer contexts and better compression techniques will tempt teams to “just include more text.” Resist it. The economics of tokens and the cognitive load on models don’t vanish with a bigger window. The pattern that survives is still chunk → rank → deduplicate → truncate, paired with consistent formatting. Use the extra headroom for true edge cases, not as a default. Your reward is predictable p95 latency, lower token spend, and fewer regressions when prompts or models change.

13.3 Native Snowflake capabilities will deepen—keep the boundary clear

Expect Cortex to keep expanding: easier embeddings, more powerful search over proprietary corpora, and continued improvements to LLM‑assisted SQL and analyst experiences. Welcome those gains, but hold the line on architecture: Snowflake is your governed data plane; LLMs are your logic plane. That clarity lets you adopt new in‑platform features where they fit while keeping the Hybrid path open for specialized models or agent behaviors. When the boundary is explicit, “new features” doesn’t mean “new platform sprawl.”

13.4 Governance expectations are tightening, not loosening

Boards and regulators are moving in one direction: more scrutiny on data classes, snowflake security controls, prompt/response retention, and cross‑region flows. If you enforce masking and row‑level policies at the views that feed prompts—and keep cross‑boundary calls minimal, redacted, and logged—you’ll meet most new requirements with configuration, not rewrites. Treat secrets, service identities, and private networking as table stakes rather than upgrades. The benefit is practical: incident response gets faster when “where data can go” was already decided.

13.5 Prices will change—your unit metric shouldn’t

Credit rates, token prices, packaging, and quotas will evolve, sometimes in your favor and sometimes not. Don’t chase every rate‑card tweak with an architectural swing. Keep measuring cost per successful task with the same trace you use for quality. When the bill moves, that number will tell you whether the lever is query optimization (trim columns/rows), context discipline (deduplicate and truncate), caching (raise hit rates), routing (prefer smaller models until confidence drops), or platform choice (Cortex vs Hybrid for a specific route). The metric keeps you calm when pricing is noisy.

13.6 Benchmarks and evaluation will get easier to automate—hold the promotion bar

More teams are wiring evaluation sets into CI, versioning prompt templates alongside code, and gating promotion on stable quality and cost. That trend will continue. The trick isn’t tool choice; it’s the contract: no promotion if grounded‑answer rate, SQL correctness, or cost‑per‑success regresses. If you keep the harness in Snowflake (eval tables, query tags, a correlation ID that stitches retrieval to generation to write‑back), you can adopt new models or Cortex features without losing comparability.

13.7 Platform strategy will stay strategic—avoid duplicate sources of truth

It will remain tempting to mirror raw data into an external platform “temporarily.” Don’t. If Snowflake is your system of record, keep embeddings and retrieval in Snowflake and send only a minimal packet of redacted context to any external model. It’s not ideology; it’s operational clarity: one retrieval stack, one set of relevance knobs, one place to watch drift. If your organization is also weighing Databricks vs Snowflake, treat that as a platform decision in its own right—then apply the same boundary discipline whichever way you go.

13.8 A light quarterly rhythm keeps you honest

Once a quarter, rerun your evaluation set with any model/version you’re considering; check p95 and cache hit rates; and look for silent token creep by inspecting average context size. Confirm resource monitors and project‑level tags are still catching all retrieval jobs and LLM calls. Then pick one lever to tune—retrieval shape, caching, or routing—and leave the rest alone. Incrementalism prevents whiplash and keeps teams shipping.

13.9 The practical takeaway

You don’t need to predict next quarter’s model to be ready for it. If you hold a few invariants steady—governed retrieval in Snowflake, explicit and logged cross‑boundary calls, a single trace for cost and quality, and disciplined context management—you can adopt new capabilities on your terms. The strategy endures because it’s boring: keep the data where the controls live, keep the bridge to LLMs narrow, and let evidence—not fashion—decide when to add complexity.

14) Conclusion: start where your data and controls live

If Snowflake is already your system of record, the surest way to get dependable value from large language models is to begin where your governance works today. Treat Snowflake as the governed data plane and whichever model you choose—Cortex, a managed API, a cloud ML platform, or a self‑hosted endpoint—as the logic plane. Keep the bridge between them narrow, explicit, and observable. That one framing reduces risk, prevents platform sprawl, and makes every later decision—pricing, performance, model choice—simpler to reason about.

From there, the path is incremental. Start Cortex‑first for internal, governed use cases: summarizing and classifying records, extracting entities into structured columns, grounding answers in curated corpora, or improving analyst throughput with LLM‑assisted SQL. You move fast because you’re not introducing a second production estate to secure and observe. When a real capability gap appears—say, a domain‑specialized model or an interactive surface that measurably benefits from a specific external model—add Hybrid selectively by keeping embeddings and retrieval in Snowflake and sending only a minimal, redacted context to the model. If the application itself is the product and needs agent orchestration, frequent model swaps, or A/B discipline, adopt an externalized platform deliberately, while keeping Snowflake the place where lineage and access never go stale.

Whichever route you take, success has less to do with clever prompts and more to do with habits that scale. Shape retrieval where the data lives so prompts start small on purpose. Enforce masking and row‑level policies at the views that feed prompts so PII protection isn’t a last‑mile script. Cap context length, deduplicate aggressively, and cache only what’s safe to reuse, with TTLs tied to data freshness. Propagate a single correlation ID from retrieval to generation and back so cost, latency, and quality read as one story. And insist on a stable yardstick—cost per successful task tied to clear pass/fail definitions—so architecture follows evidence, not fashion.