It’s no secret that companies building digital platforms have been experimenting with AI-enabled solutions a while. But now that AI is the ultimate buzzword, everyone is desperate to show how much they’re on board with it. Snowflake is no exception, and their recent Snowflake Copilot offering is proof.

But the ultimate question when evaluating digital platforms shouldn’t be “Does it have AI?” In the near future—if not already—AI will be table stakes, so that question will be meaningless. The question to ask is: How well do these AI-enabled features solve core business problems, and do they actually contribute value to the bottom line?

Just over a year ago, Snowflake announced their Snowflake Copilot feature, an AI-enabled assistant that integrates directly into the cloud data platform. But does Snowflake Copilot add value to the platform’s users? And does it offer any help in optimizing Snowflake cost and performance? Read on for answers to these questions and more.

What is Snowflake Copilot?

Snowflake Copilot is an AI-powered assistant that operates natively within the Snowflake data cloud platform. Its core purpose is to enable natural language interaction with the Snowflake environment, thus simplifying data analysis and expanding the platform’s accessibility.

How does Snowflake Copilot work?

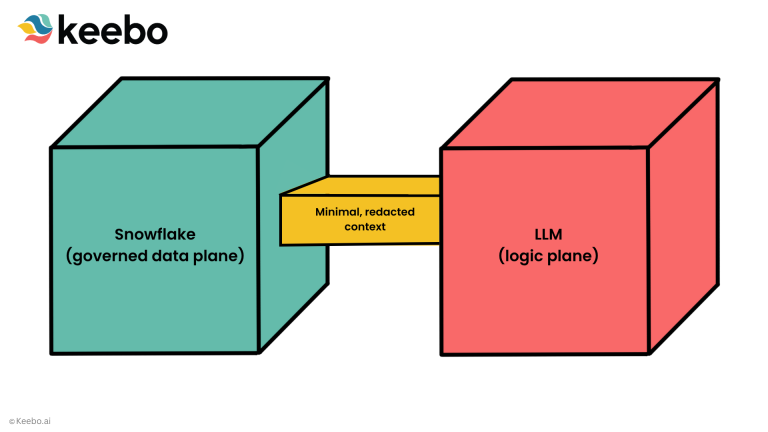

Snowflake Copilot is powered by Snowflake Cortex, the data platform’s managed AI service. It leverages a combination of Snowflake’s proprietary LLMs, augmented in some cases by partners like Mistral. Partnerships like the latter help to enhance Copilot’s understanding of SQL and the accuracy and efficiency of its queries.

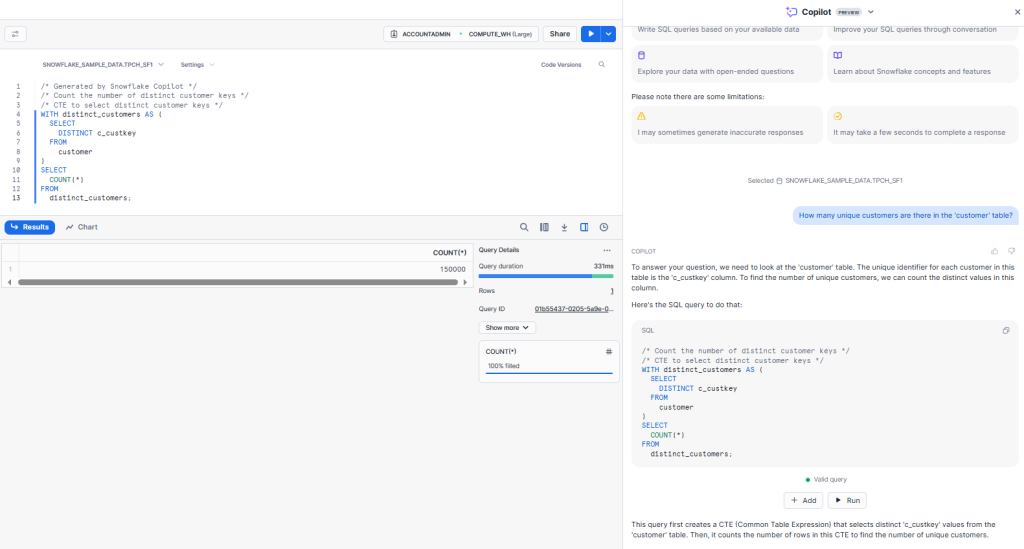

The platform’s core capabilities is text-to-SQL conversion. Users enter questions and requests into the platform in plain language. Copilot then analyzes and interprets these requests, using the context of Snowflake metadata to better discern the user’s intent. Then, Copilot will generate relevant SQL queries or explain how to achieve the user’s desired result.

Copilot is also built to be conversational and iterative. If you’re dissatisfied with a query it returns, you can ask follow-up questions. This conversational format enables complex, multi-step analysis to create efficient, refined queries.

NOTE: Snowflake Copilot is a new and developing feature. Things may have changed by the time of the writing of this article. So be sure to consult Snowflake’s official documentation for the latest information on the feature’s capabilities and functionality.

Does Snowflake Copilot present a data risk?

A common concern with any AI-enabled platform is its ability to access your data. Snowflake Copilot users should have no concerns on this front:

- All data processing stays within Snowflake’s environment

- Snowflake Copilot respects existing role-based access control (RBAC), so users only receive suggestions based on the data they have access to

- Snowflake Copilot only analyzes metadata—schema names, table names, descriptions, column names, etc.—to get the information it needs. Your data remains 100% anonymous.

How do you operate Snowflake Copilot?

If you’re new to Snowflake Copilot, here’s a step-by-step instruction guide on how to operate it. When enabled, Copilot is available straight out of the box—no further setup is needed. Just follow these steps:

- Open a SQL worksheet or notebook in Snowsight. Select the Ask Copilot button.

- The Snowflake Copilot panel should open on the right-hand side of your screen. You’ll then need to select the appropriate database and schema to set the context for Copilot’s analysis.

- Enter your question or data request using natural language. Snowflake Copilot will also provide suggestions for how to phrase your prompts. You can also provide custom instructions—formatting, schema focus, etc.—that can help tailor Copilot’s responses to your organization’s best practices.

- Snowflake Copilot will return SQL queries, text explanations, or documentation-based answers. You can either run the suggested queries immediately, or add queries to a worksheet for further review or modification.

- You can continually ask questions within Copilot to refine and optimize queries, as well as troubleshoot potential areas. If you’re unfamiliar with SQL syntax or Snowflake’s features, Copilot will answer any questions you have.

Is Snowflake Copilot limited to a specific Edition?

No, Snowflake Copilot is not Edition-specific. Instead, Snowflake Copilot is region-based. If it’s available within your region, then you have access to it, regardless of which Edition you subscribe to. As of today, Snowflake Copilot is available in the following AWS and Azure regions:

- AWS US West 2 (Oregon)

- AWS US East 1 (N. Virginia)

- AWS Europe Central 1 (Frankfurt)

- AWS AP Northeast 1 (Tokyo)

- AWS Europe West 1 (Ireland)

- AWS AP Southeast 2 (Sydney)

- Azure East US 2 (Virginia)

- Azure West Europe (Netherlands)

For the most reliable, up-to-date insights into Snowflake Copilot’s availability, be sure to check out the latest official Snowflake documentation.

Does Snowflake Copilot require additional cost?

Yes and no. On the one hand, Snowflake Copilot doesn’t charge a specific subscription fee for the feature itself and, as mentioned above, it’s not limited to a specific Edition. However, because Copilot queries Snowflake metadata, it does eat into your cloud services costs.

As we’ve mentioned before in our Snowflake pricing guide, Snowflake doesn’t charge for cloud services if they fall below 10% of your total compute. So if you’re able to stay within that ratio, Snowflake won’t charge you to use Copilot.

How do Snowflake users get the most out of Snowflake Copilot?

No feature is valuable in itself. Its value is determined by how well (or poorly) other people use it. So how are Snowflake users best able to get value out of Snowflake Copilot? Here are some top-of-mind use cases.

Exploring unfamiliar datasets

One of the biggest roadblocks to using Snowflake isn’t the platform itself, but the data contained within it. When you’re dealing with millions—even billions—of rows, it can be challenging and time-consuming to become familiar with those data.

For example, say you’ve just inherited a table called customer_events with ten distinct date fields, half a dozen JSON columns, and hundreds of unique event types. If you don’t have the appropriate documentation, your first impulse is probably to run a handful of SQL snippets to get your head wrapped around those data. For example:

— What date ranges are we dealing with?

SELECT

MIN(event_timestamp) AS first_seen,

MAX(event_timestamp) AS last_seen

FROM customer_events;

— Which event types exist?

SELECT DISTINCT event_type FROM customer_events;

— How many customers per event type?

SELECT

event_type,

COUNT(DISTINCT customer_id) AS unique_customers

FROM customer_events

GROUP BY event_type

ORDER BY unique_customers DESC

LIMIT 10;

However, writing and rewriting these queries over and over until you become familiar with the data is highly inefficient, both in terms of time and money. Queries burn credits. Plus, if you’re like most engineers or DBAs, you don’t have an excess of hours in the day to waste on exploratory snippets.

Snowflake Copilot can help save both time and money. Rather than writing each SQL snippet yourself, you can simply ask Copilot to profile the table, writing something like the following:

“Provide a summary of customer_events: row count, null rates per column, distinct value counts for key fields, and top five values for any string column.”

Copilot will then automatically generate a single script that will execute the following actions:

- Count rows (data volume)

- Compute null and distinct counts for columns (sparsity or cardinality issues)

- List top values for high-cardinality string fields

- Suggest optimal clustering key based on the places where filtering happens most frequently—which can reduce your future scan costs

In a matter of seconds, you have the baseline context you need to engage in further, deeper analysis. Whether you need to filter, join, or aggregate data, you can do so without wasting cycles on blind SQL or guesswork.

Fast query generation

Writing queries by hand is tedious, time-consuming, and opens the door to human error—all of which impede both cost and performance efficiency. While many queries are automatically generated by integrated applications, there are still use cases that require you to write queries yourself:

- One-off deep dives. One of your internal teams may need a custom analysis for a new initiative or campaign. This will require custom WITH clauses, window functions, or conditional logic to surface the correct insights.

- Complex transformations. If you need to clean or normalize nested JSON or semi-structured data, you’ll need a human set of eyes on the query.

- Troubleshooting and error investigation. Identifying the root cause of an error or inefficiency in Snowflake often requires highly specific queries that are unique to that circumstance.

- Performance tuning. Identifying, pruning, and caching slow queries often require manual SQL edits—usually by someone with deep technical knowledge of the data, Snowflake, and SQL.

- ETL orchestration and audit. Building or debugging pipelines often requires custom queries with context-specific logic that an auto-generated query won’t provide.

Despite the real need for these specific queries that require high levels of tuning and specificity, in many cases they take a disproportionate amount of time for value. For example, marketing may need a custom analysis of customer data for one of their campaigns. That’s potentially hours of work for a set of insights that may only provide marginal value.

However, you can’t just use an average GenAI tool to “skip the line” and write queries faster. There are a number of problems with using a tool like, say, ChatGPT. For starters, ChatGPT isn’t built for SQL. The platform is notoriously bad at creating errors and discrepancies within even the simplest queries, let alone those intended to execute complex operations.

But there’s also the issue of security and privacy. ChatGPT isn’t confidential, to the point that most experts recommend never giving it your password or other sensitive information. The same goes for your queries. Giving ChatGPT access to table names, column names, and other identifying information is too risky.

You could, of course, go through all the trouble of anonymizing your table data, creating the query through ChatGPT, then going back and recoding the data before you run it through Snowflake. It should seem pretty obvious that this is highly time consuming—perhaps even more than if you just manually wrote the query yourself. Not to mention the risk of a piece of data being missed either when you anonymize or recode the query.

As a result, most organizations simply prohibit the use of ChatGPT or other public GenAI tools in writing SQL queries. So even if you were very careful, that option is probably not available to you.

But because Snowflake Copilot is 100% Snowflake native, it gives you the best of both worlds:

It not only eliminates grunt work, but does so in a safe, secure way. You don’t have to write every single JOIN or FILTER command yourself. Instead, simply describe your stated goal in plain language. For example:

“Show me any columns in orders where more than 5% of values are null, and suggest a remediation script that replaces nulls with the column median.”

Copilot will then produce a first draft of a multi-step SQL script. While the code itself is production-ready immediately, you may find you’ll want to test or optimize the query to fit the use case. But in that situation, your labor hours are spent in an area of value—improving query efficiency and accuracy—not performing tedious coding tasks.

Plus, because Copilot never reads your data nor transports it from your Snowflake instance, you remain compliant with your organization’s data privacy and security policies.

SQL optimization

Complex SQL scripts that query high volumes of data take a long time to process. This is especially the case if you need to process a large number of joins, CTs, or conditional statements. As a result, most Snowflake users are attentive to the need to write queries as efficiently as possible.

In addition to generating new queries, Snowflake Copilot can optimize existing queries. You enter the text into Copilot, and it will suggest improvements like rewriting joins and implementing better indexing strategies. Because Copilot uses your data files for context, it’s able to create optimizations tailored to your specific data set and use case, rather than regurgitate generic recommendations.

Because Copilot is built as a conversational tool, the user interface is naturally geared toward iterative improvement and query refinements. So if you spot an issue in Copilot’s rewritten queries, you can simply ask it to make the change and it will fix it.

Search Snowflake Documentation

Snowflake is a complex platform, and sometimes finding a specific answer to a usage question can be daunting. While Snowflake has extensive, detailed documentation, sometimes the answer to your question is buried in thousands of words of text and SQL snippets.

Snowflake Copilot is trained on multiple LLMs and can easily surface potential answers to your questions. This enables users to get the most out of Snowflake by quickly and easily retrieving information about its capabilities.

Learn about SQL Syntax

To operate Snowflake effectively, you need at least a basic understanding of SQL. But younger and less experienced engineers often need to consult online SQL manuals and YouTube videos to handle more complex functions.

In addition to surfacing insights on Snowflake itself, Snowflake Copilot can also provide on-demand knowledge on specific SQL syntax and provide recommendations on how to write queries more efficiently.

Not only does this use case help to accelerate immediate data needs, but it also onboards and trains new personnel faster. Because Copilot offers immediate, contextual guidance within the platform, there’s no need for a senior engineer to constantly look “over the shoulder” to coach and train new personnel. This can free up their time for other tasks.

Snowflake Copilot: Key features

- Natural language inputs. Users describe tasks in plain language and Copilot generates and refines SQL queries to achieve those desired outcomes.

- Contextual awareness. Copilot is aware of your data’s structure and context, enabling more accurate and relevant suggestions and explanations.

- Complete integration with Snowflake. Copilot integrates fully with SQL Worksheets, Notebooks, and other features in Snowflake. There’s no need to jump to a different platform.

- Data governance. Copilot is 100% Snowflake native, only reads metadata, and respects your RBAC rules.

- Snowflake Documentation search engine. Users can receive guidance on the workings and best practices of various Snowflake features, enabling them to get more value out of the platform.

Optimizing cost and performance with Snowflake Copilot: pros and cons

One of the immediate benefits of using Snowflake Copilot is that it can streamline engineer and DBA workflows, enabling them to work faster and more efficiently. It also (as mentioned above) reduces the need for excessive intervention from more experienced engineers, enabling them to direct their attention to more value-generating tasks.

On the other hand, Snowflake Copilot doesn’t actually reduce your Snowflake bill directly. In fact, if you overuse it, you could end up exceeding your 10% cloud services threshold and burn more credits. And while it does help you optimize your SQL queries to be more efficient, this really only works for manually written queries.

So it’s worth it to take a long hard look at the pros and cons of using Snowflake Copilot in cost and performance optimization. Where does it add value? And, more importantly, where do you need to consider implementing an alternative solution?

Pros

- Query generation and optimization is contextual, making its recommendations specific to your data structure and volume

- Copilot’s LLMs are trained on SQL, enabling them to write queries more efficiently than generic GenAI tools (e.g. ChatGPT, Claude)

- Accelerates data exploration by generating and optimizing one-off queries faster than “by hand”

Cons

- Still requires manual intervention—no autonomous solutions

- Limited to the questions the user is asking—Copilot doesn’t know to surface information if the user doesn’t know to look for it in the first place

- Unable to optimize automatically generated queries

- Limited scope when evaluating large schemas, as Copilot only considers the 10 most relevant tables and top 10 columns when generating responses

- 3-4 hour delay before Copilot recognizes newly created databases, schemas, or tables

- Copilot does not access data, which means it cannot analyze data distribution or query execution statistics

- Beyond query structure, Copilot can’t provide informed cost and performance recommendations

Why Keebo is a perfect companion to Snowflake Copilot

Despite Snowflake Copilot’s shortcomings, the tool is a major step forward for improving the platform’s usability, accelerating skill development, and making data teams operate more efficiently. When paired with an autonomous, AI-powered optimization engine like Keebo, the benefits increase exponentially:

- Improve performance efficiency by accelerating query writing (Copilot) + automatically rightsizing warehouses to ensure efficient resource provisioning (Keebo)

- Optimize poorly written queries (Copilot) + route automatically generated queries to warehouses with enough provisioned resources to handle them (Keebo)

- Gain insight into the efficiency of your query structure (Copilot) + your warehouses, users, data, etc. (Keebo)

Learn more about how Keebo’s autonomous optimization engine works—schedule a demo with our team today.