Of all the ways to optimize Snowflake we have discussed in this series, perhaps the easiest one is optimizing your warehouse settings. However, it is also the area that will most directly affect your Snowflake costs, so it is important to monitor warehouse settings frequently as a mistake here will work against you and even nullify the efforts you have made in other areas.

Introduction

Keebo is the first data learning platform that automatically optimizes cloud data warehouses and analytics. On behalf of our customers, we perform thousands of Snowflake optimizations daily. This is the third of 3 articles where we will examine what you can do right away to optimize Snowflake: queries, schema, and warehouse.

Here are the top 4 warehouse optimizations that will have the most beneficial impact for Snowflake.

Top Snowflake Warehouse Optimizations

Data Ingestion

You can minimize credit usage for data ingestion depending on whether you use a dedicated warehouse for data ingestion or not.

If you use a dedicated warehouse for data ingestion, simply start the warehouse right before the data load process starts and then stop it immediately after it ends. You can automate this by enabling auto-resume and auto-suspend for the warehouse–just be sure that no other applications will hit the warehouse after your data load completes as you will be paying again until the auto-suspend value is reached.

If you are sharing a warehouse with other applications, then you can simply scale-up the warehouse before a data ingest job and then scale it back down after to minimize your credit usage.

Right-sizing the Warehouse

Perhaps the most important decision that directly affects your Snowflake costs is choosing an appropriate warehouse size. Size in this case does not refer to storage costs, but compute costs. The larger the warehouse, the faster it can handle a compute task (query). Snowflake currently offers the following sizes, each costing a different number of credits per hour.

| Warehouse size | Credits per hour |

| X-small | 1 |

| Small | 2 |

| Medium | 4 |

| Large | 8 |

| X-large | 16 |

| 2X-large | 32 |

| 3X-large | 64 |

| 4X-large | 128 |

| 5X-large | 256 |

| 6X-large | 512 |

The basic idea is to pick a warehouse size that will efficiently process most of your queries. Sizing based on your slowest query would mean you are paying too much, since most of the time you will be paying for an underutilized warehouse. On the other hand, if your warehouse is undersized, you will waste valuable time while queries wait their turn to be processed. If your query loads are not predictable, you will need automation, such as what Keebo provides, to dynamically adjust the size of your warehouse based on the current loads.

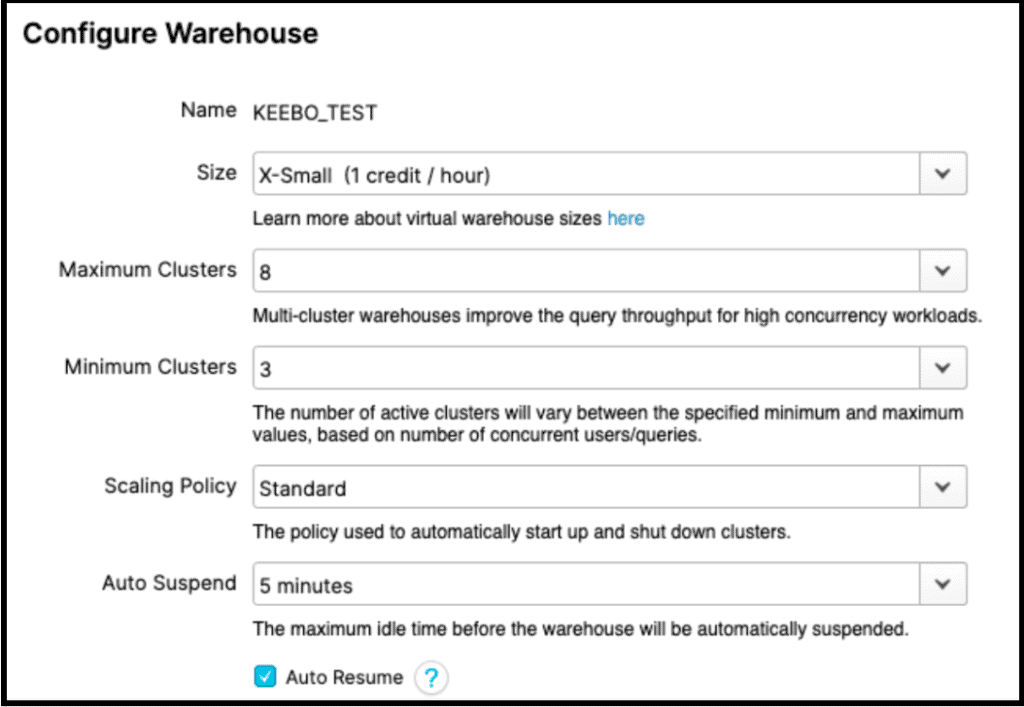

As you increase the size of the warehouse, you are scaling-up. If you have Snowflake Enterprise edition or higher, you also can scale-out using their multi-cluster feature. You can set the defaults for size and number of clusters:

In theory, because of Snowflake’s pricing, the credits required to run an X-large warehouse for an hour is no different than running a large warehouse with 2 clusters for an hour. Both would use 16 credits for that hour. In practice, though, there is a big difference in the effectiveness of scaling-up vs scaling-out for various workloads.

In general, scaling-up helps when you have slow (large) queries and scaling-out helps when you have a lot of concurrent queries. The way most Snowflake customers determine if they have slow queries is to look at TOTAL_ELAPSED_TIME in their QUERY_HISTORY view. But this can be misleading because it only indicates a symptom and not the cause. To find out the root cause of slow queries, you need to look at the following fields in your QUERY_HISTORY view:

| COMPILATION_TIME | If this is high, it typically indicates overly complicated queries, such as nested queries (see part 1 of this series for more information). |

| EXECUTION_TIME | If this is high, it indicates expensive (long-running) queries. You need to either optimize these queries or scale-up to a larger warehouse. |

| QUEUED_PROVISIONING_TIME | Ideally, this number is 0. Many non-zero instances indicate the cluster is being suspended too frequently. Increase your “auto suspend” interval. |

| QUEUED_OVERLOAD_TIME | This is often the key to understanding TOTAL_ELAPSED_TIME. If a lot of fast queries are waiting behind slower queries, you will see considerable overload. To address this, you should ideally scale-out. If not that, then separate the slow queries and fast queries into separate warehouses. |

Combine Applications

Snowflake makes it easy to create separate warehouses that access the same data. There are some good reasons for doing this, such as separating workloads for security reasons, isolating heavy workloads, and even accounting for different budgets. But separating workloads will almost always increase your compute costs. The reason is simple: Snowflake bills you by the number of seconds a warehouse runs multiplied by the credits per second for that warehouse size.

Consider this example. You have a query Q1 arriving at 9:00 am that runs for 60 seconds in a large warehouse. You have a second query Q2 arriving at 9:01 am that runs for 120 seconds in the same warehouse. Even if you set your auto suspend to be 3 minutes, you will be paying for your cluster to run from 9:00 am to 9:06 am. Since a large warehouse costs 8 credits per hour, you will be billed 0.8 credits for these 2 queries: 8 * 6/60 = 0.8.

Now let’s compare this to a situation where you have separated your workload into 2 warehouses: W1 and W2, where Q1 runs on W1 and Q2 runs on W2. Now you will be paying for W1 running from 9:00 to 9:04 (1 minute for the query plus 3 minutes for auto-suspend) and W2 running from 9:01 to 9:05 (2 minutes for the query plus 3 minutes for auto-suspend). This is a total of 9 minutes of a Large warehouse which will be billed for 1.2 credits: 8 * 9/60 = 1.2. You could keep costs comparable by downsizing W1 and W2 but this would result in a performance penalty of roughly doubling the latency of the queries.

Now you could take this to the extreme and run the entire organization on a single warehouse. That is not our recommendation, either. There are good reasons for using multiple warehouses, but these decisions must be made with a full understanding of the financial costs.

Auto-suspend

This Snowflake parameter allows for automatically suspending a warehouse whenever there is no activity for a specific period. It can also automatically resume when a new query arrives. However, if this interval isn’t chosen carefully, it can actually increase costs. This is for a simple reason: whenever a warehouse is suspended, its entire local memory cache is dropped. This means that even if a warehouse can be resumed in 1 or 2 seconds, the subsequent queries might take significantly longer because they now must read data from cold storage rather than local memory. Choosing your auto-suspend interval, therefore, requires a balance between an exceedingly large interval versus an overly aggressive one.

Conclusion

The sheer complexity of analyzing thousands or hundreds of thousands of queries and all the factors that need to be considered means that decisions on these warehouse parameters are never going to be optimal. You are always going to leave some money on the table. However, you can tilt the odds in your favor by automating the warehouse optimizations with machine learning. Keebo gives data teams their time back, boosts performance, and saves money with fully automated data warehouse and analytics optimizations. Keebo doesn’t merely alert you to performance issues, we fix them in real-time.